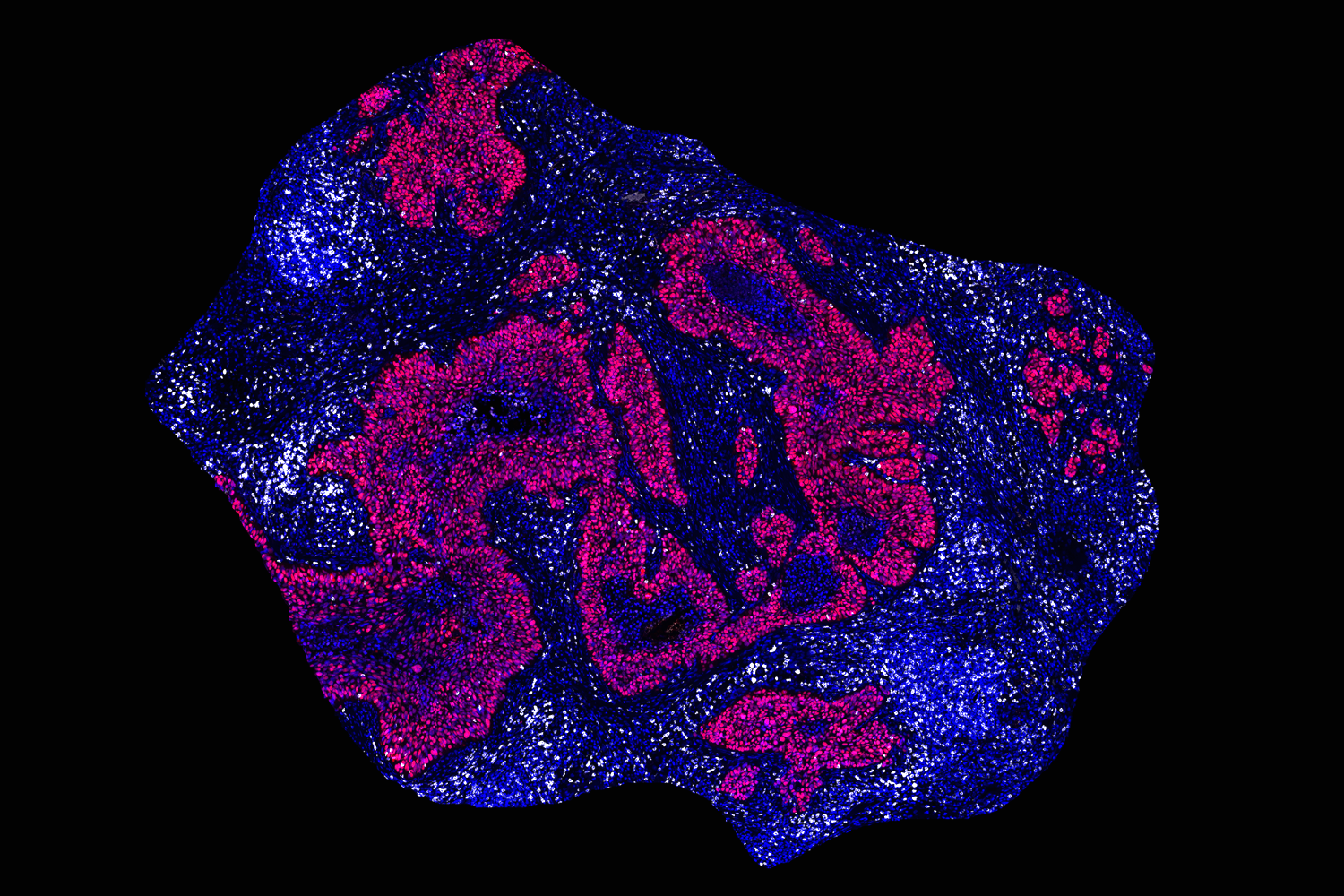

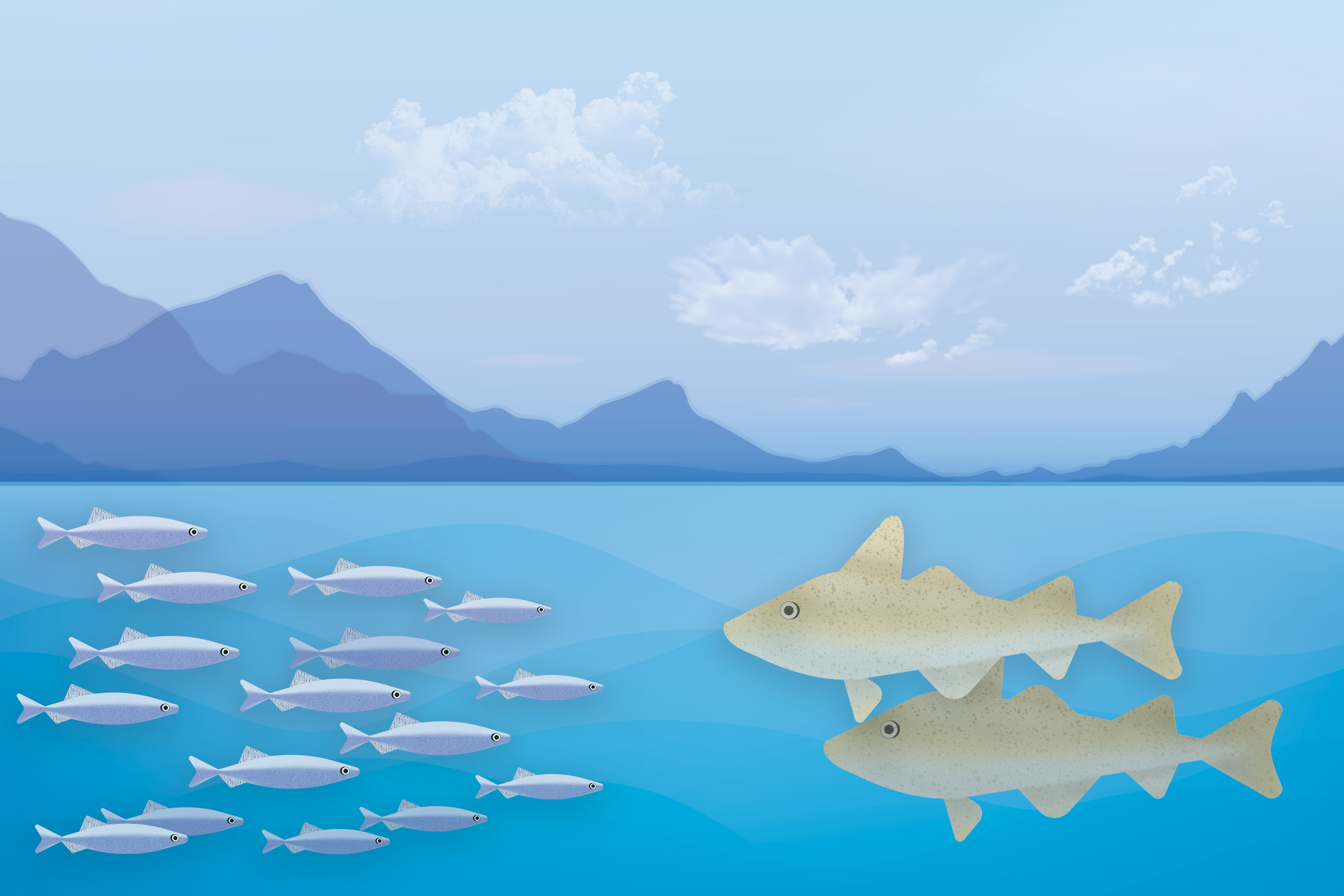

An abundant phytoplankton feeds a global network of marine microbes

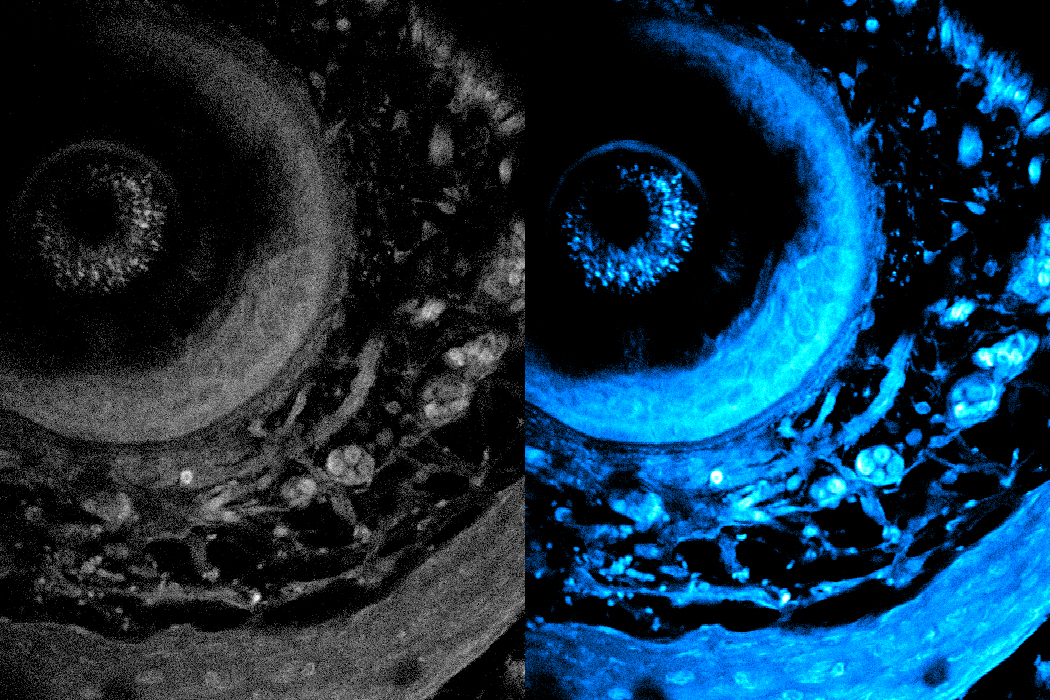

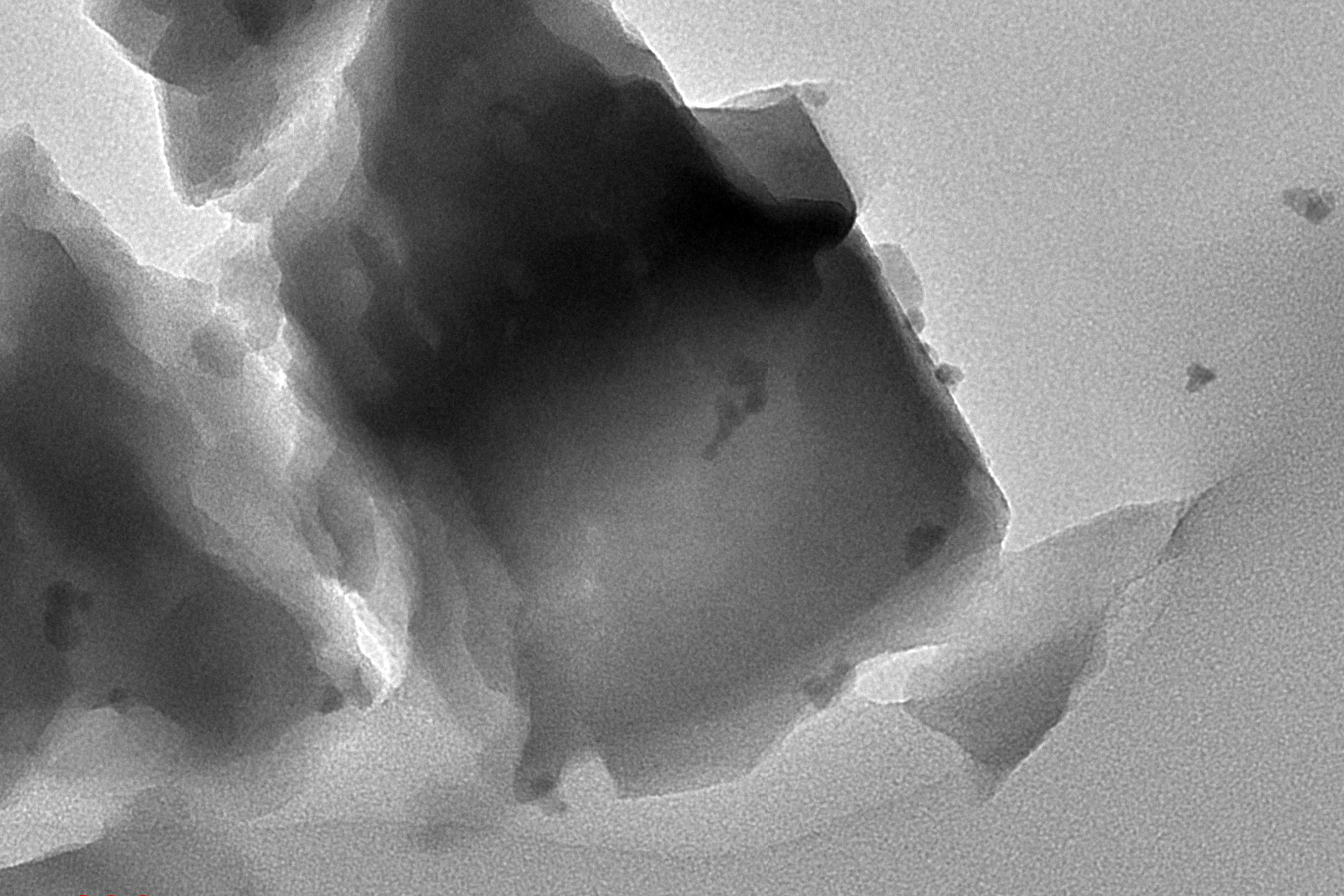

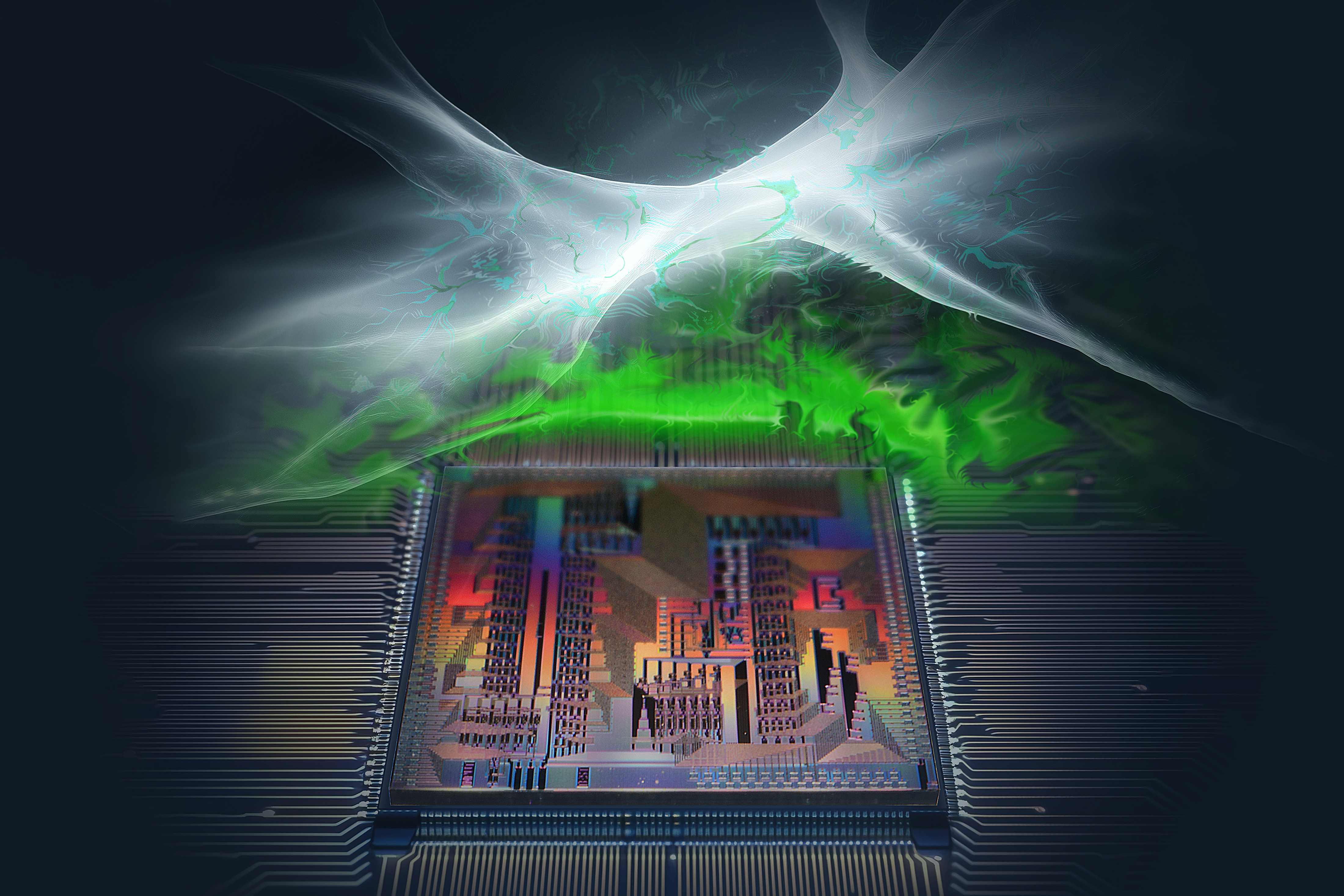

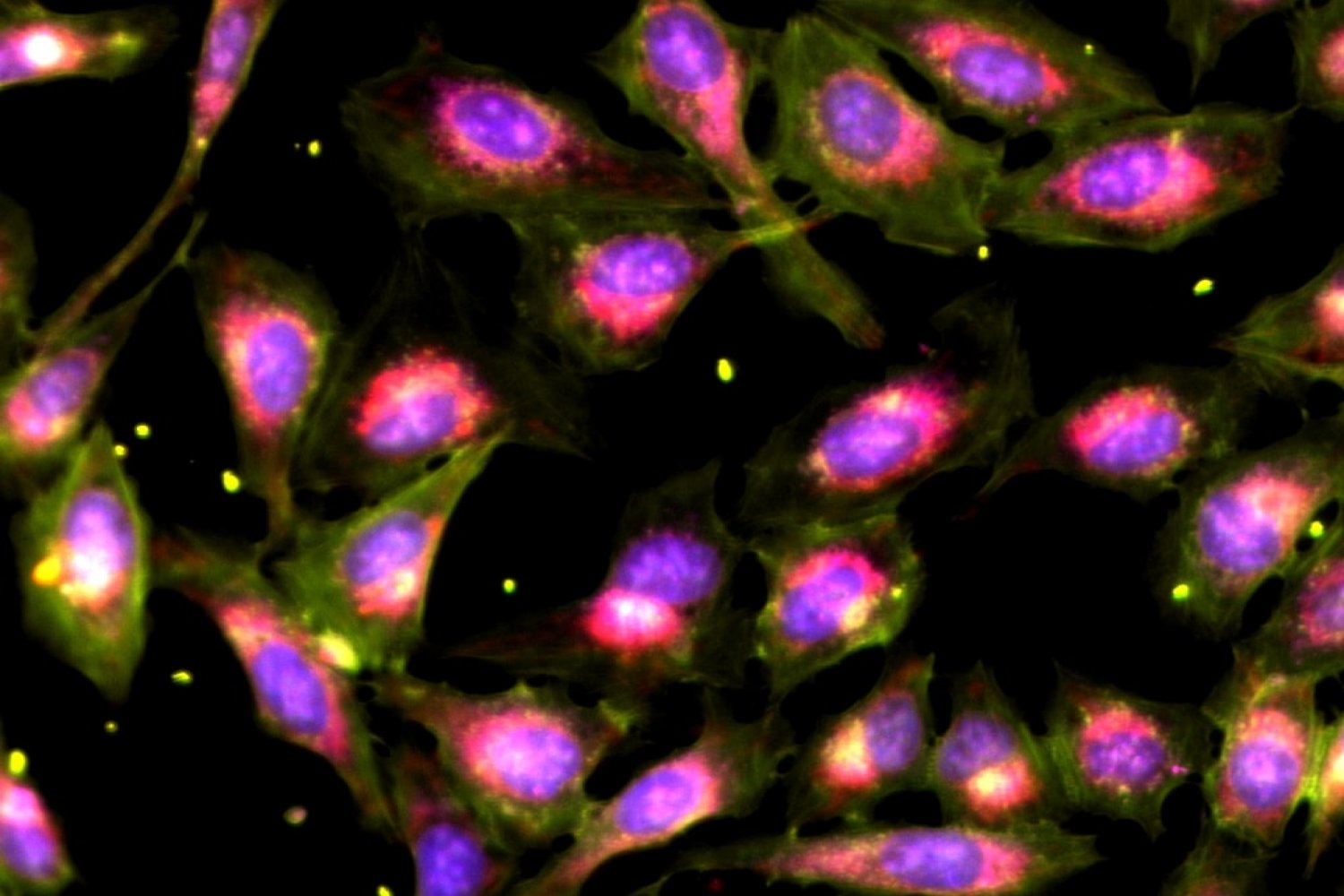

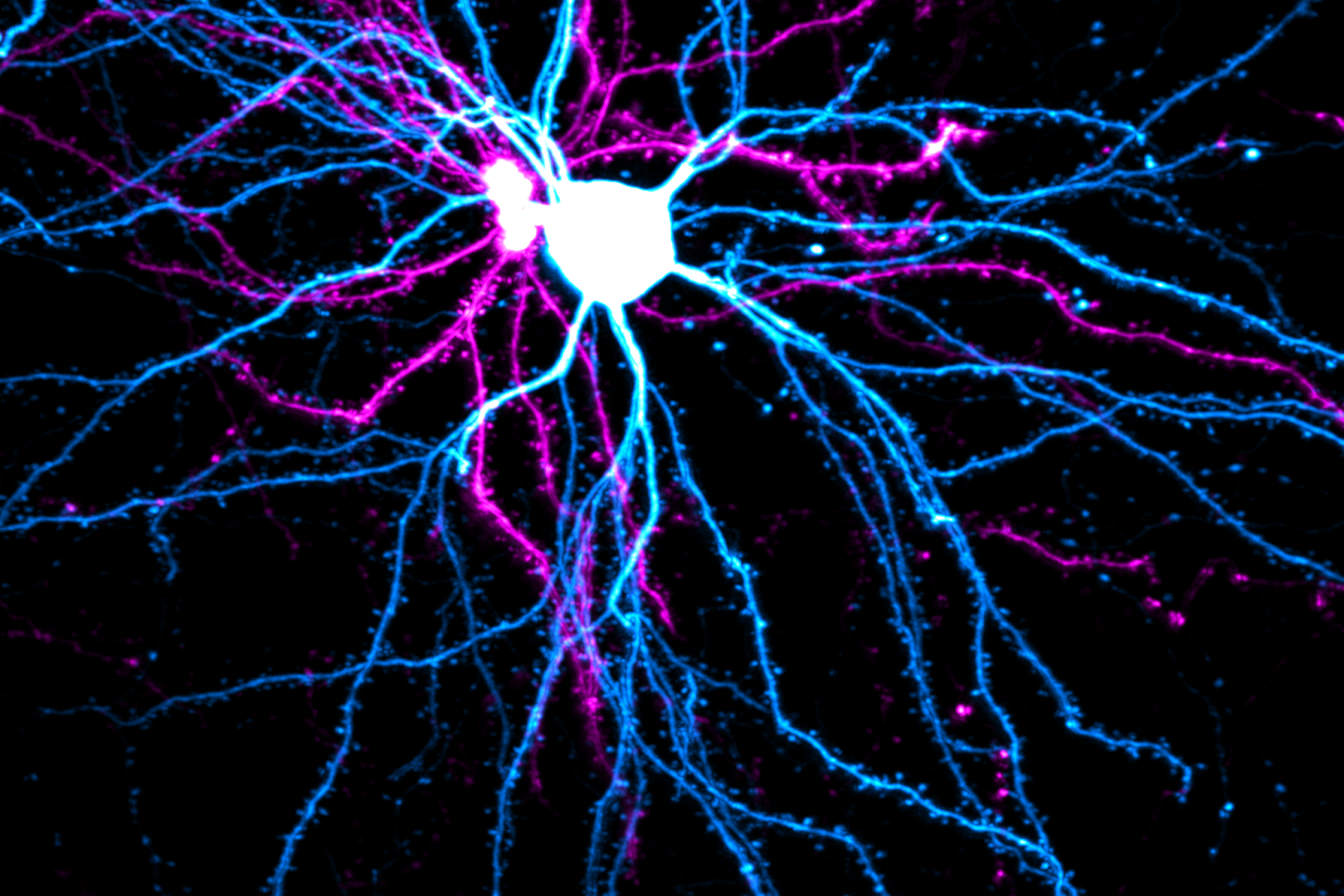

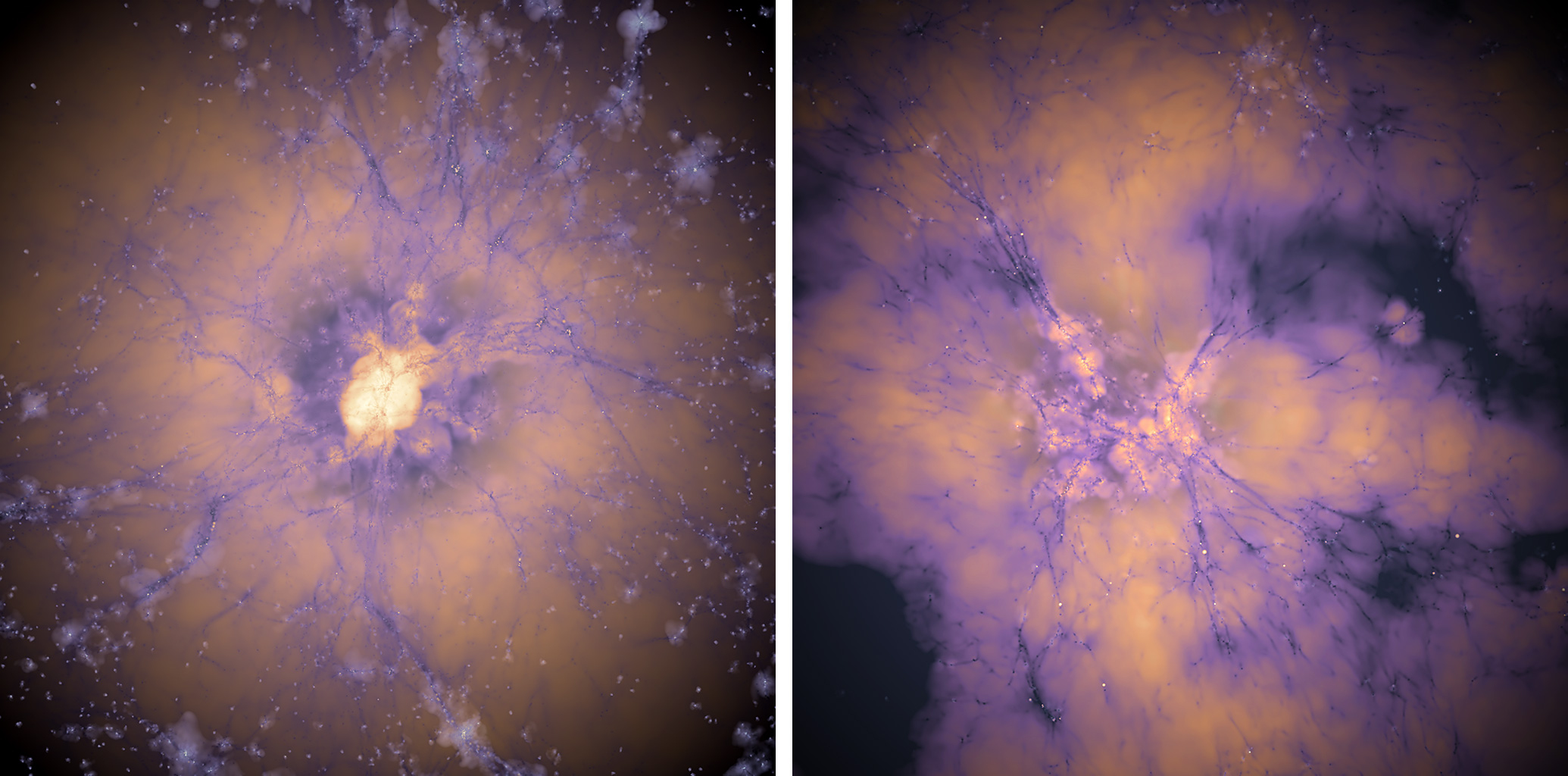

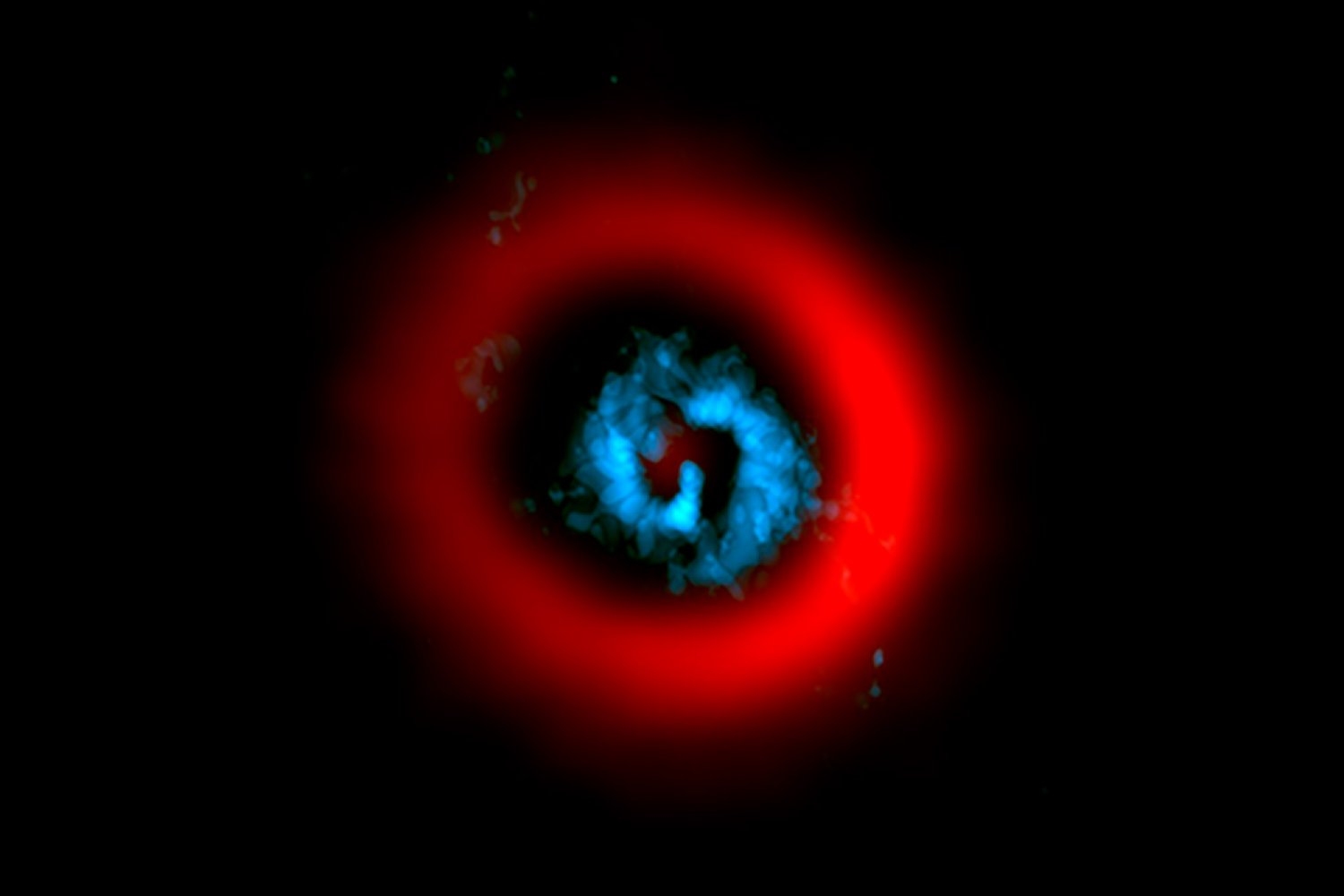

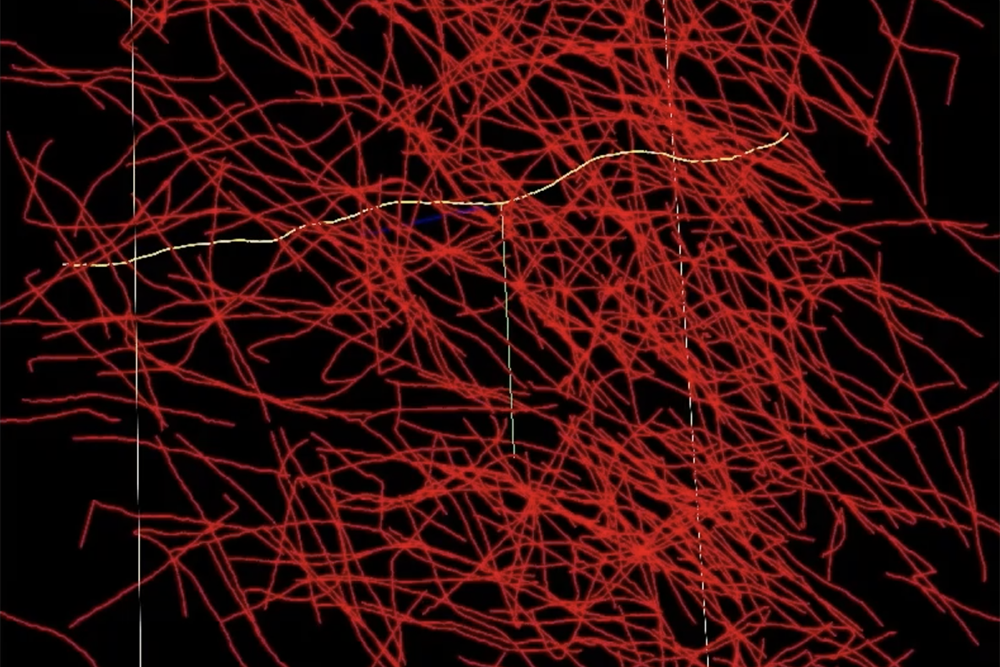

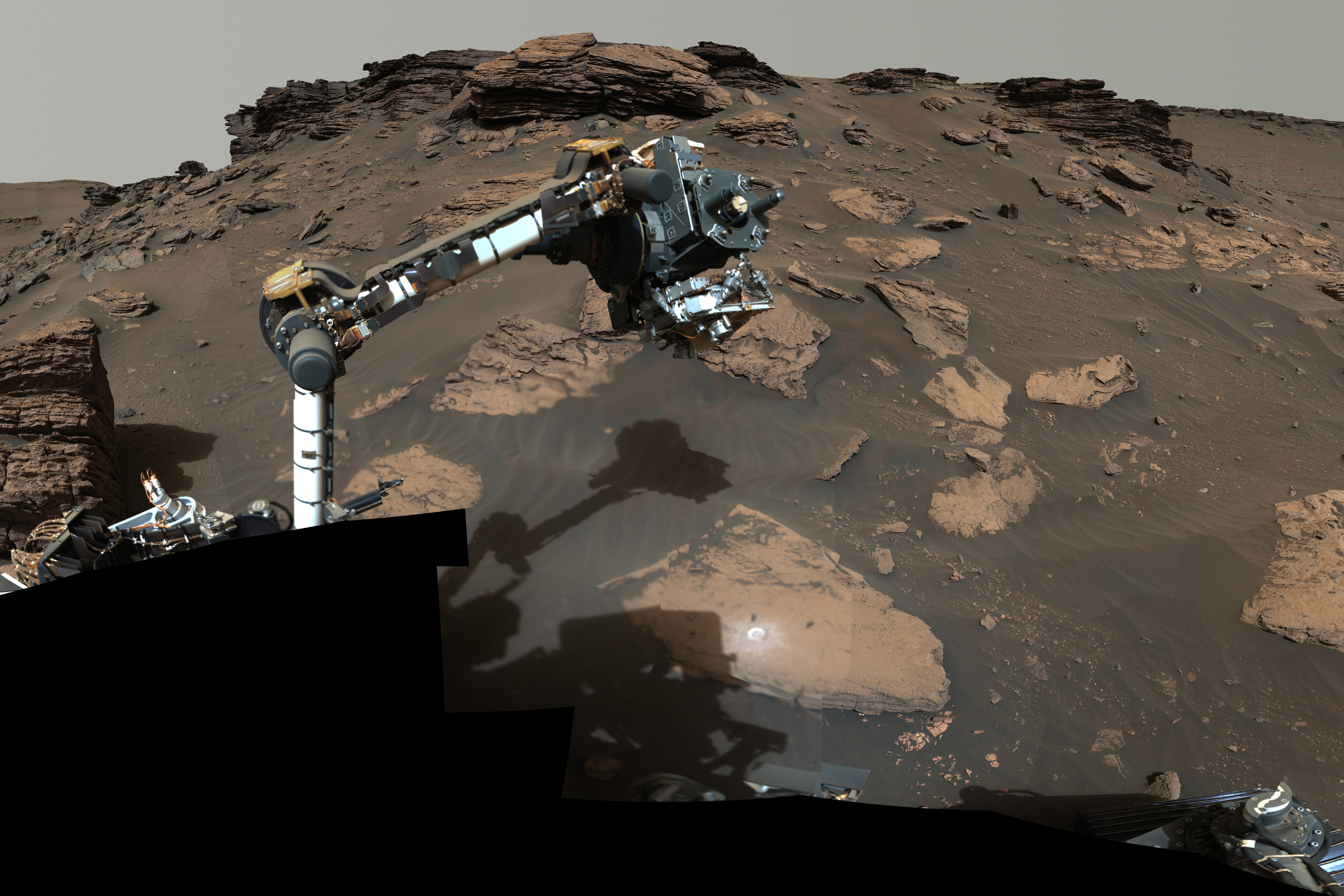

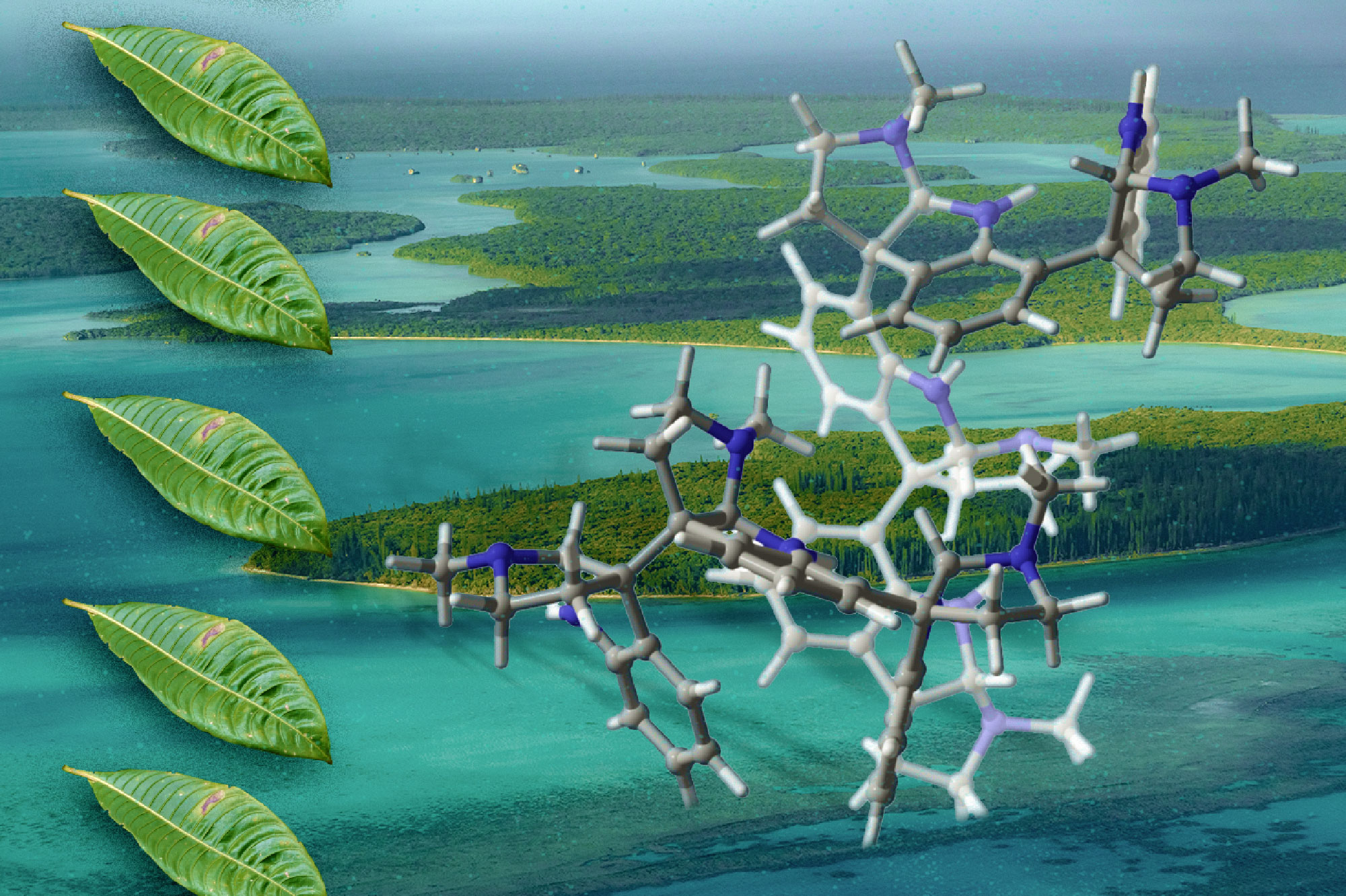

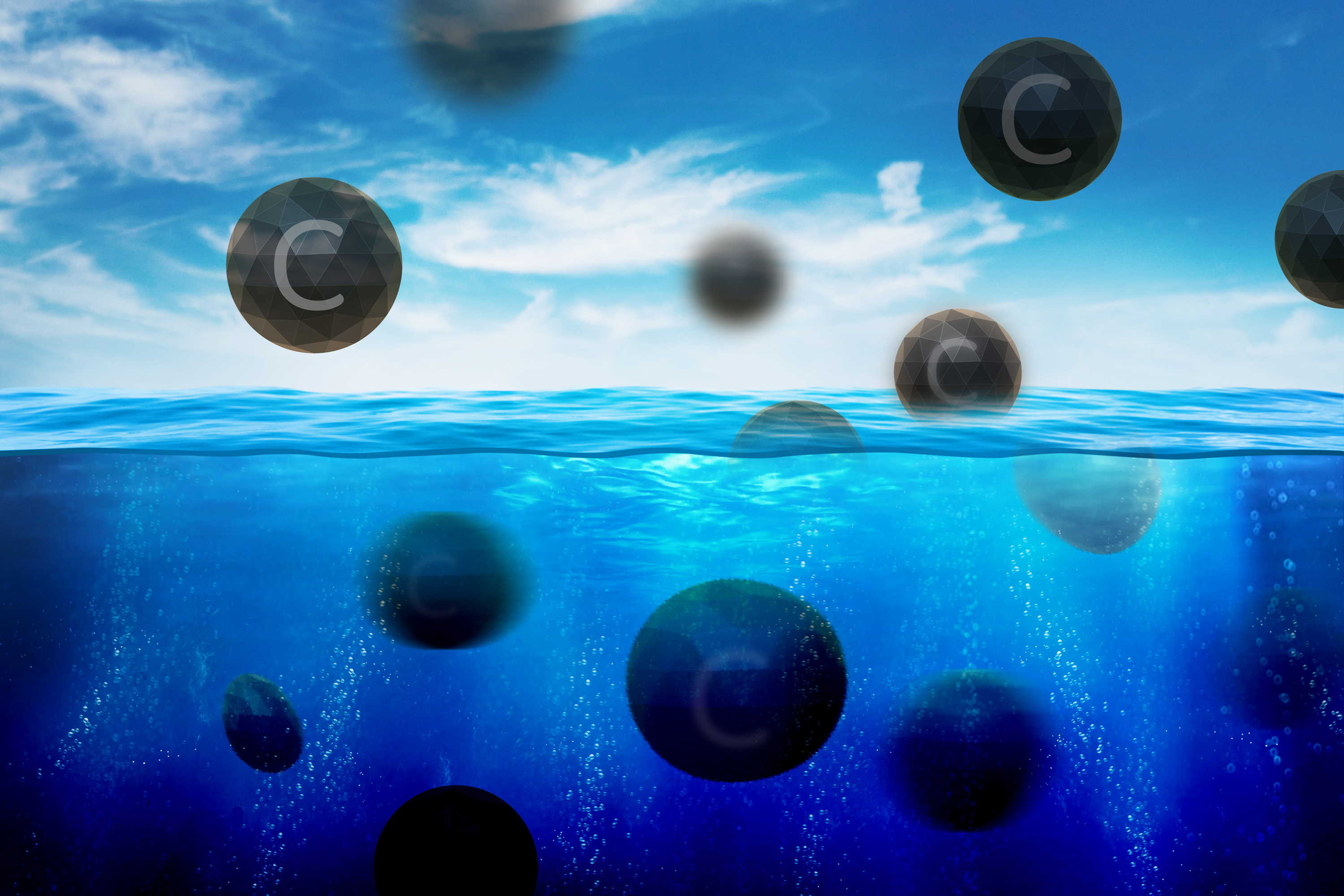

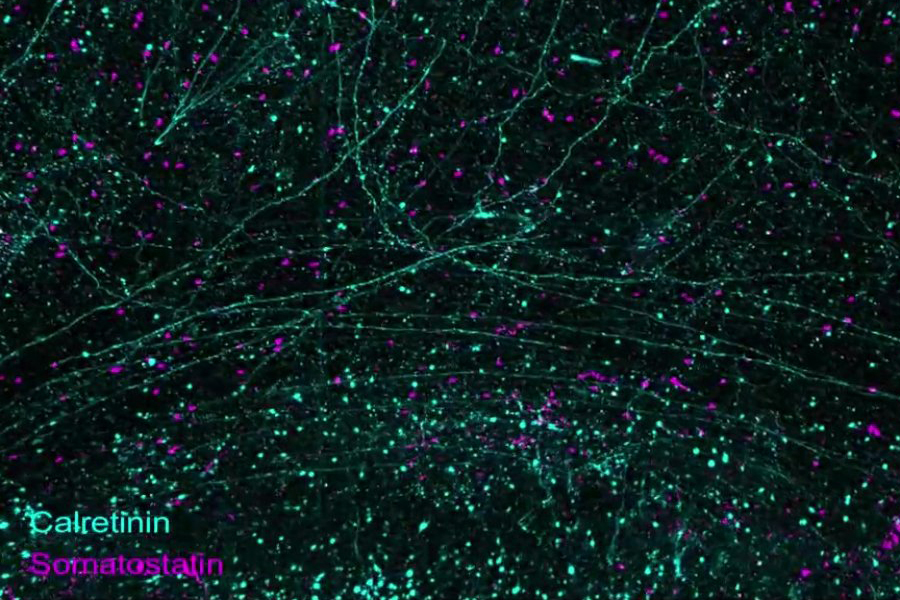

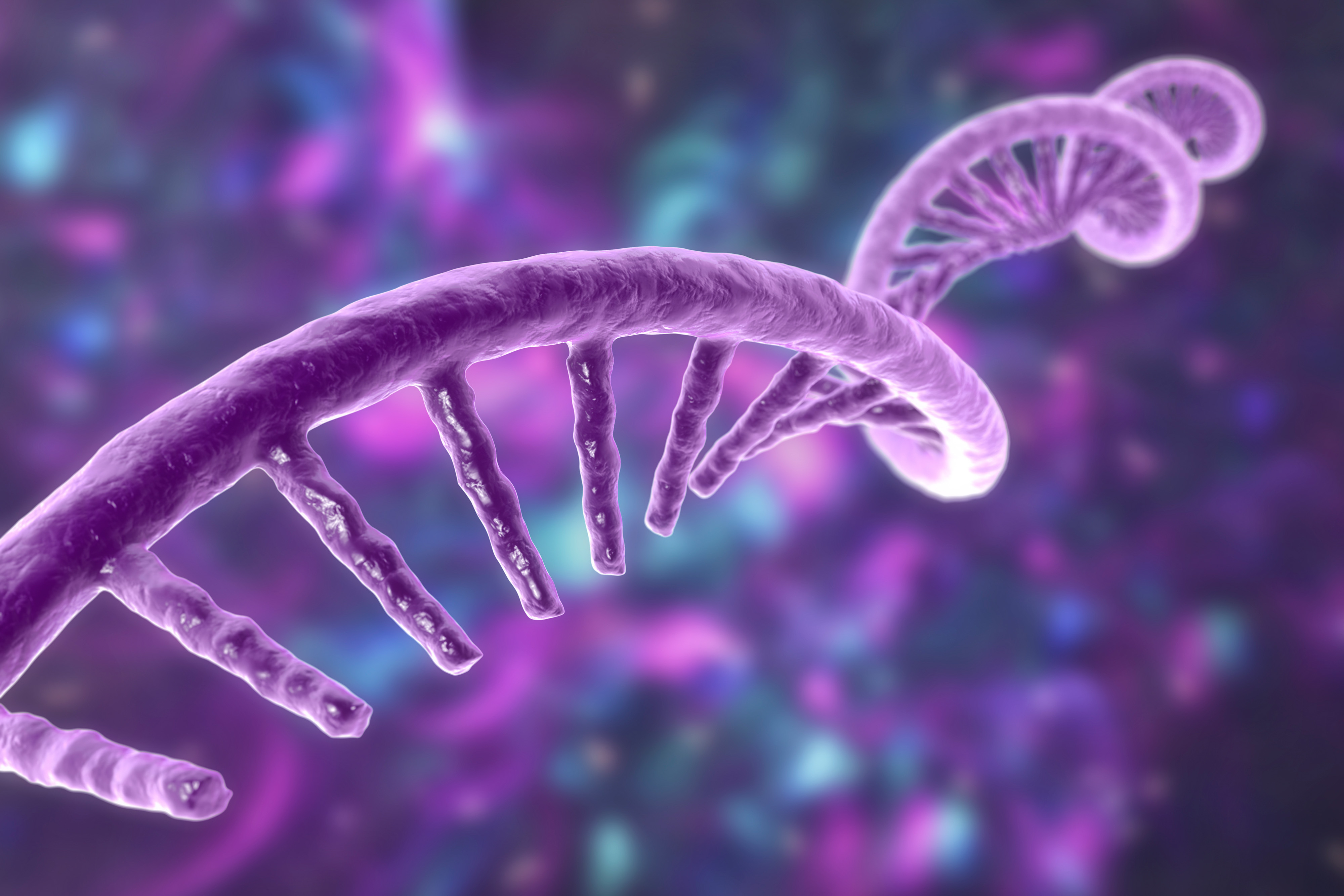

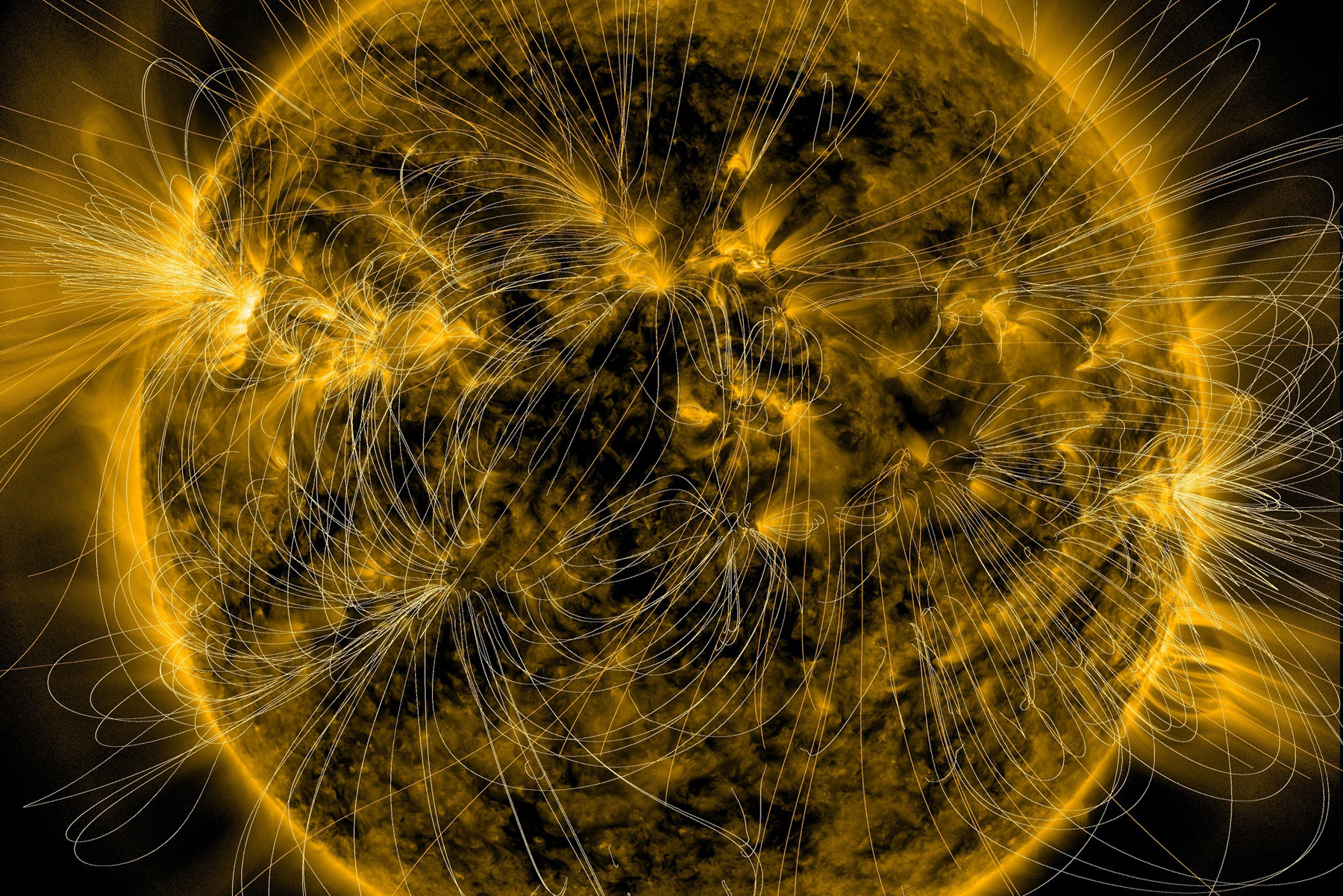

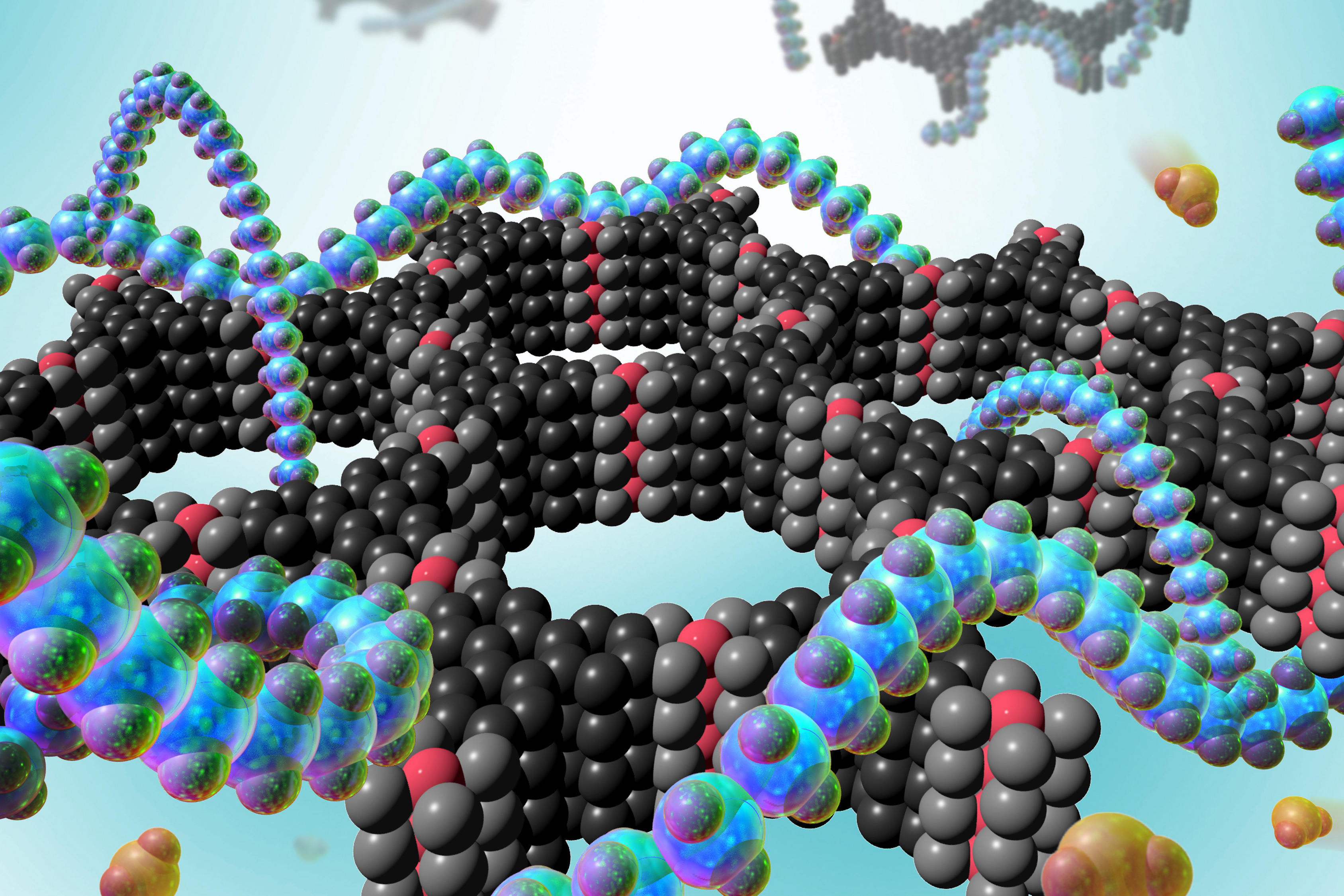

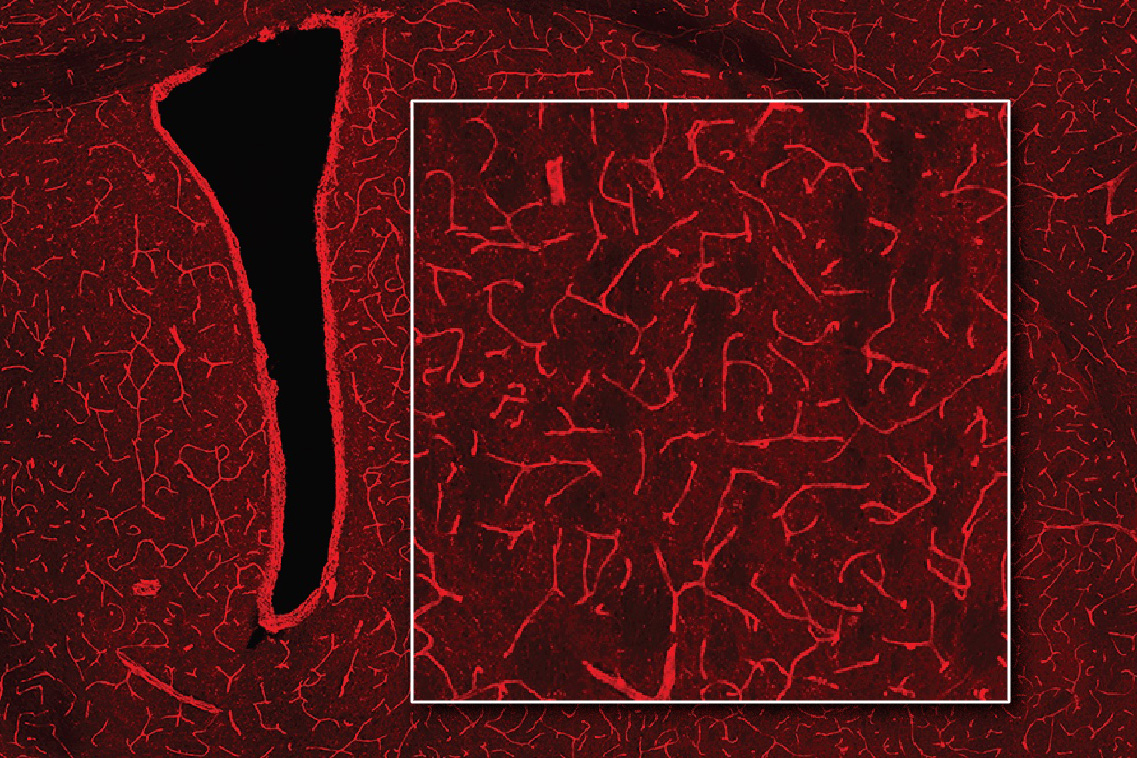

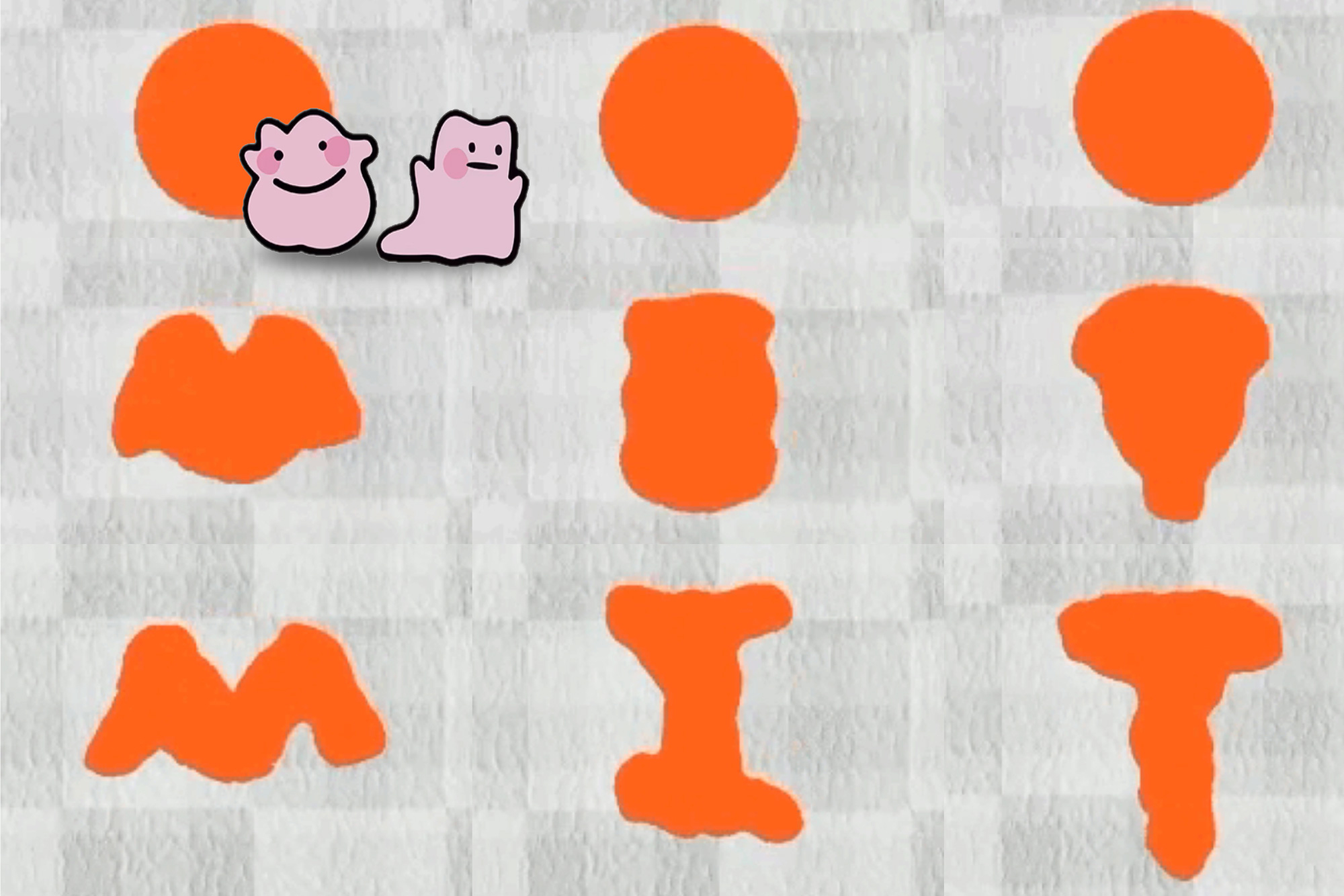

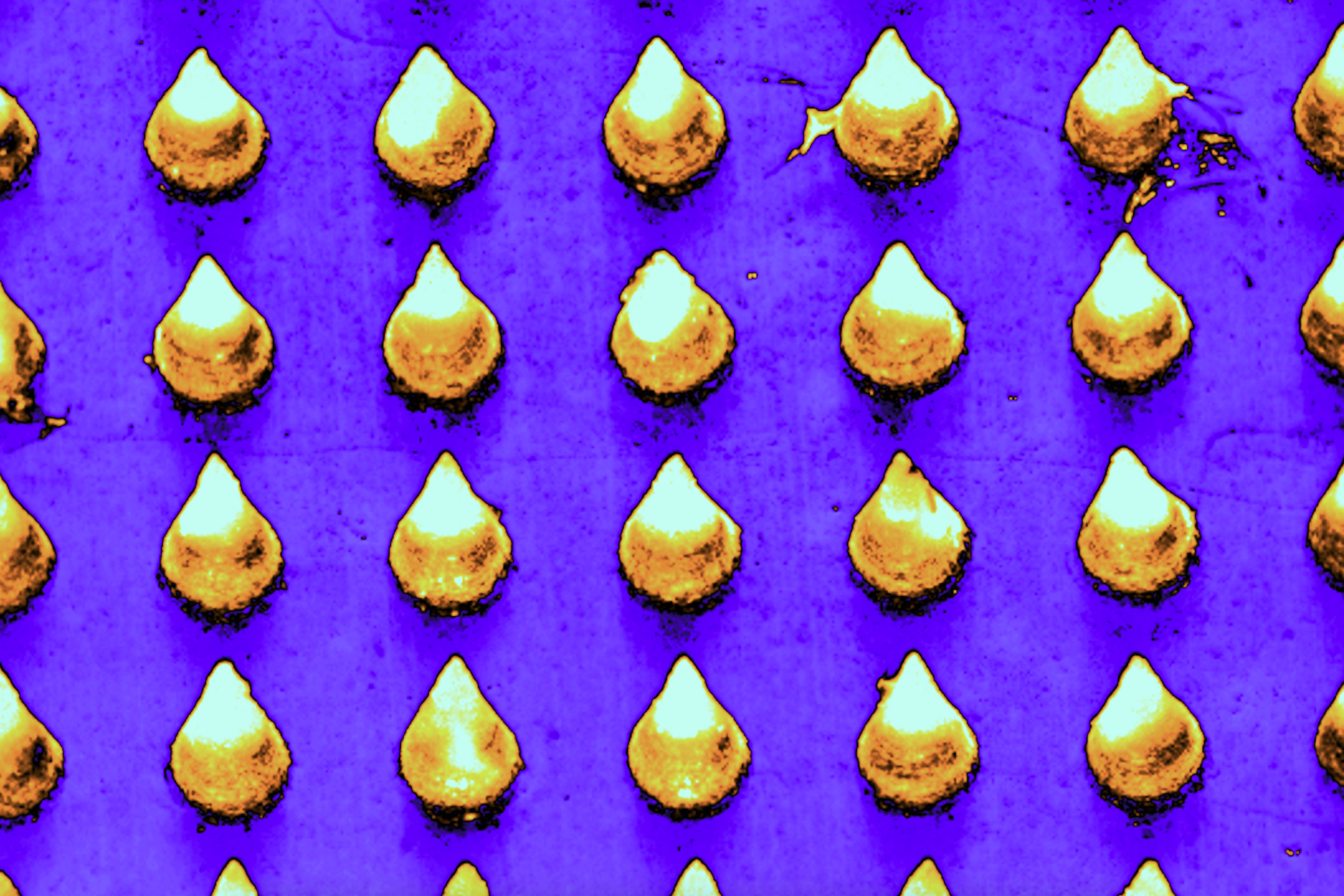

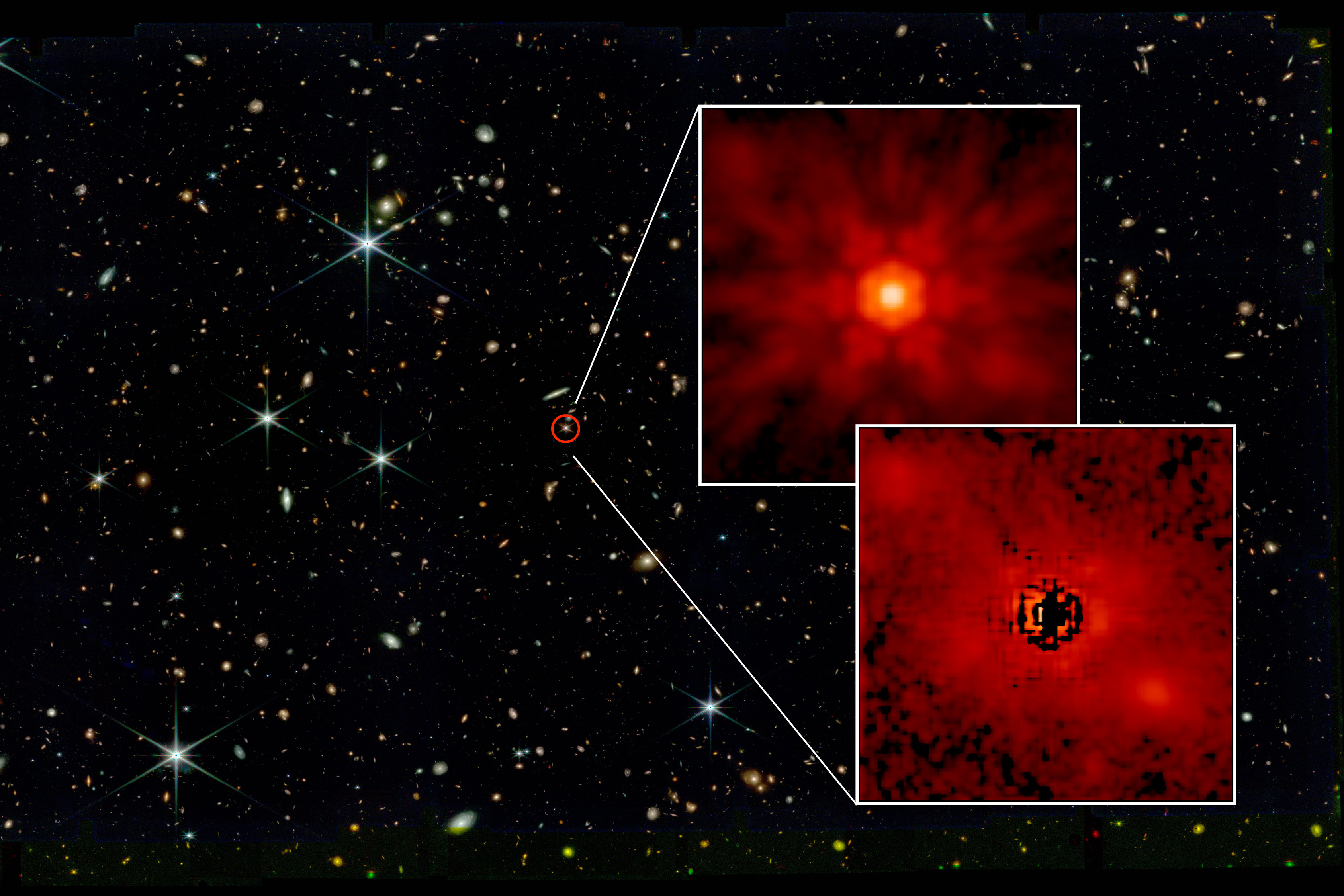

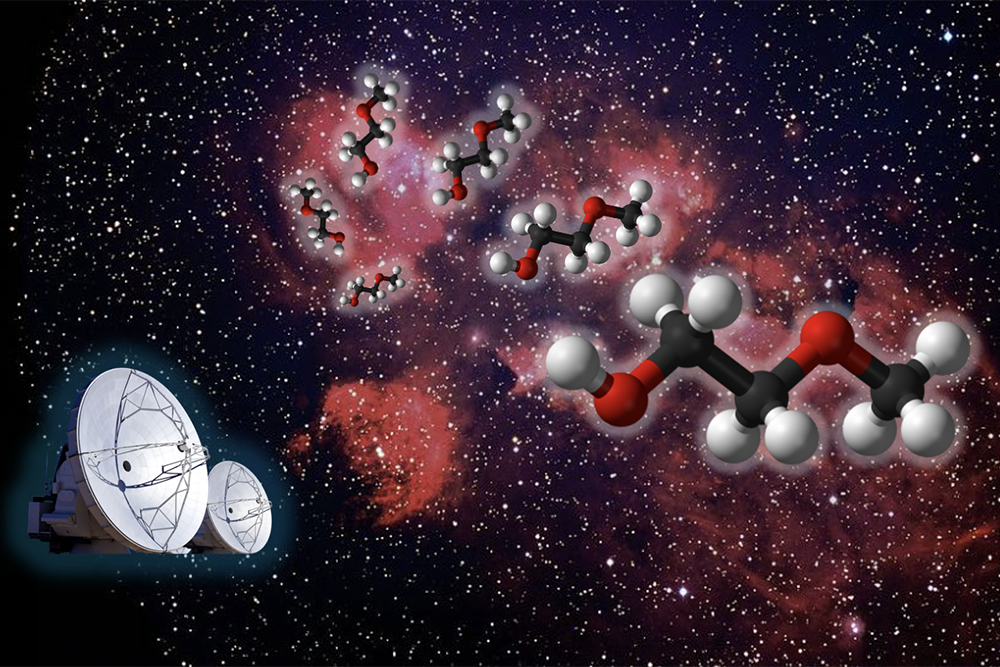

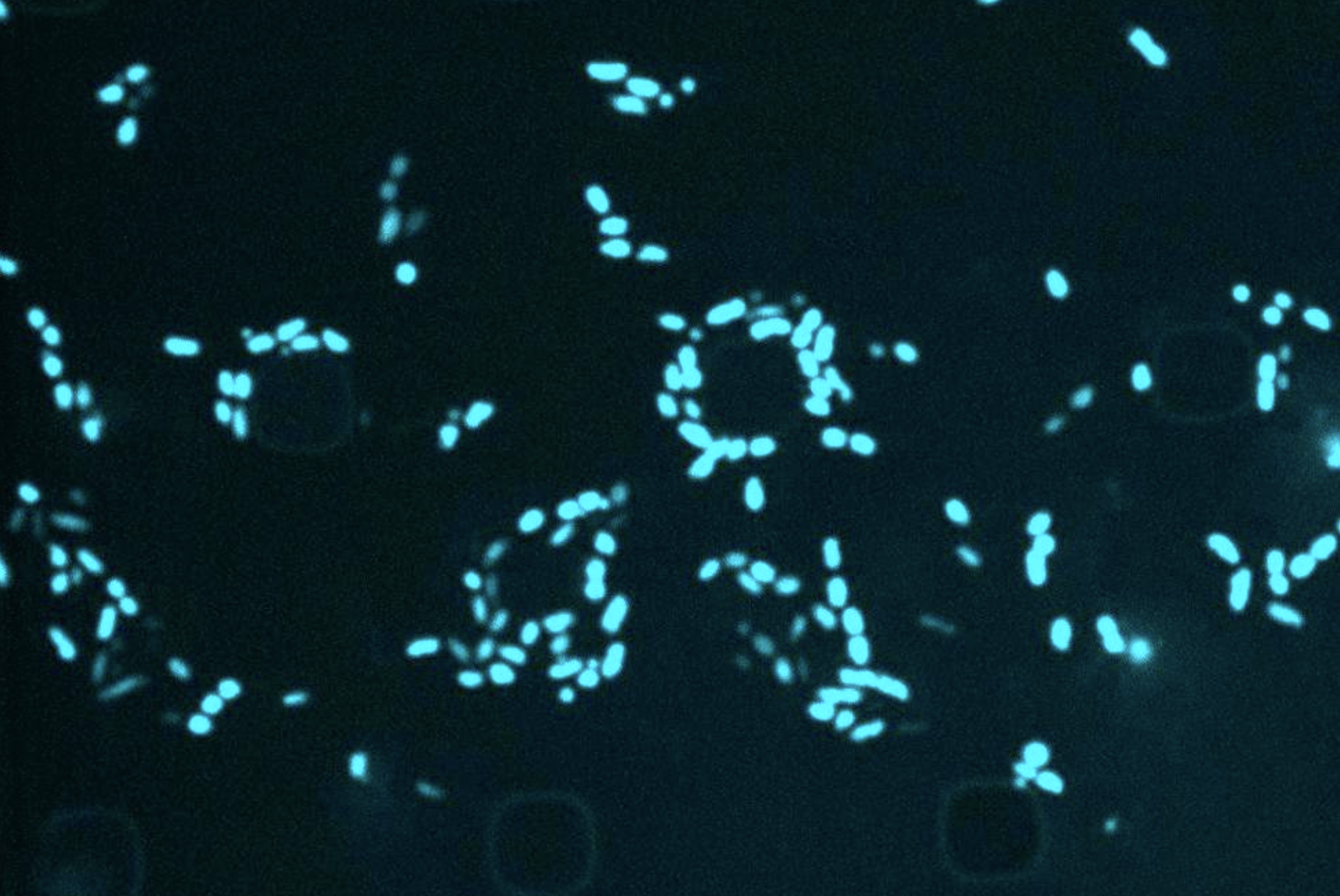

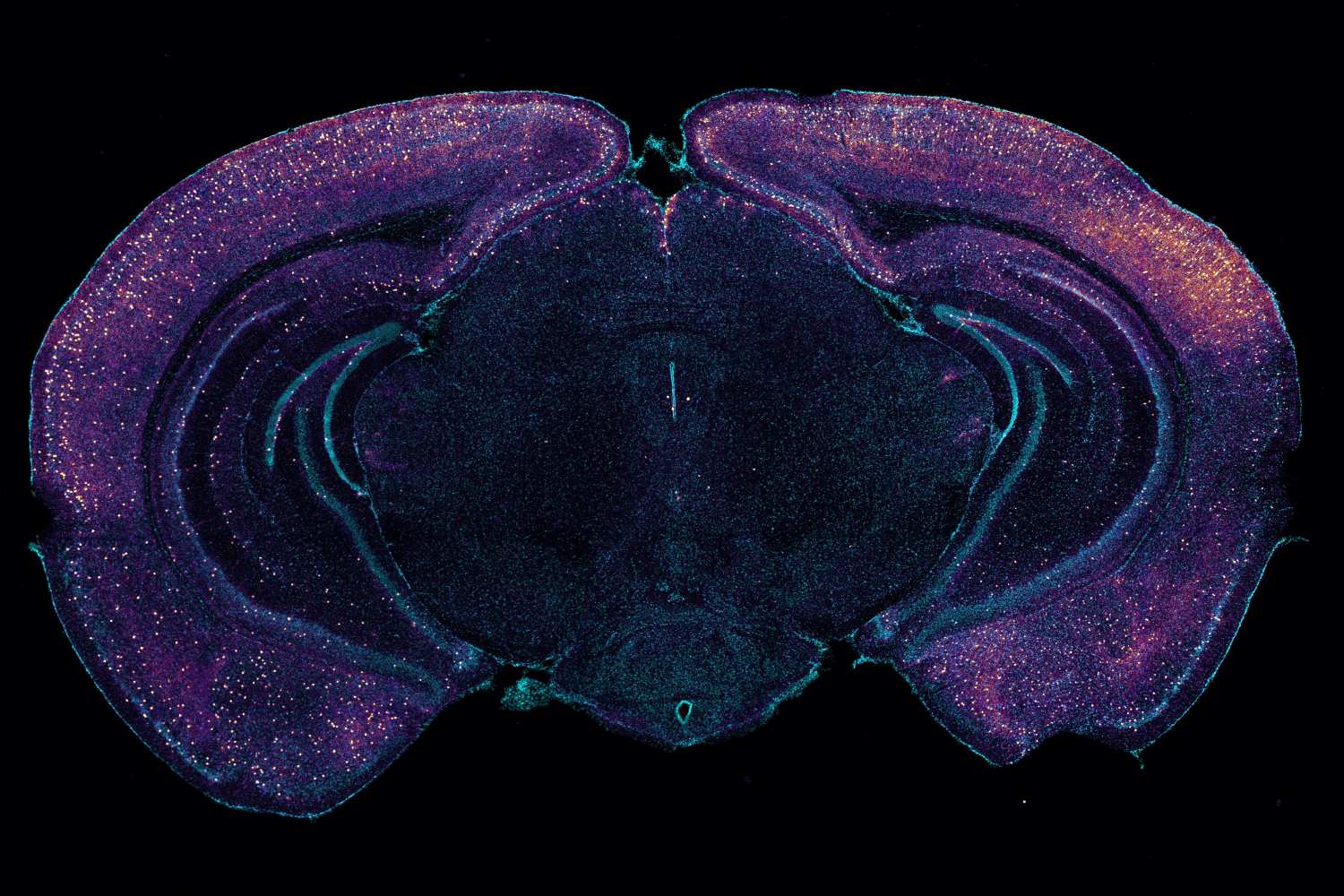

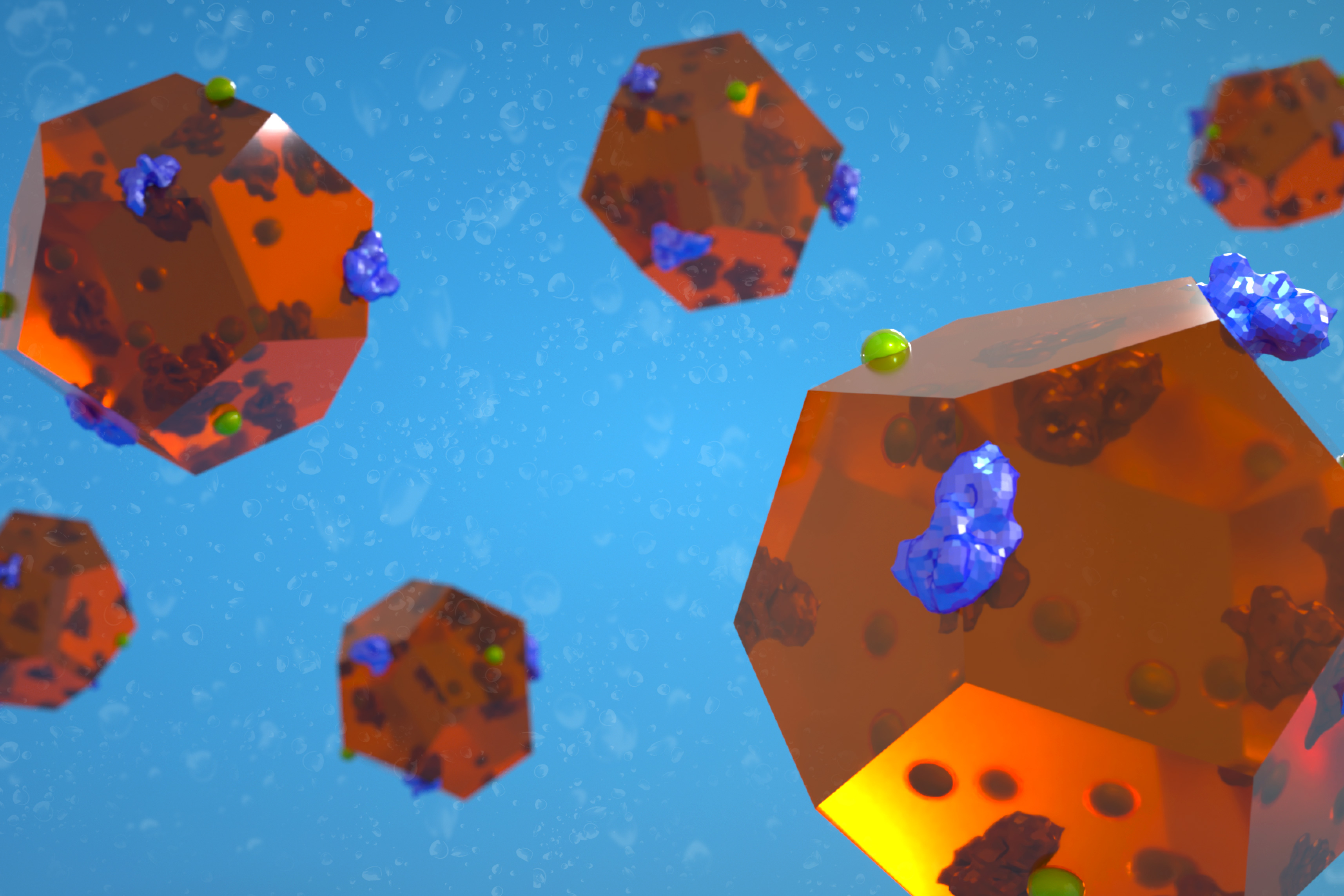

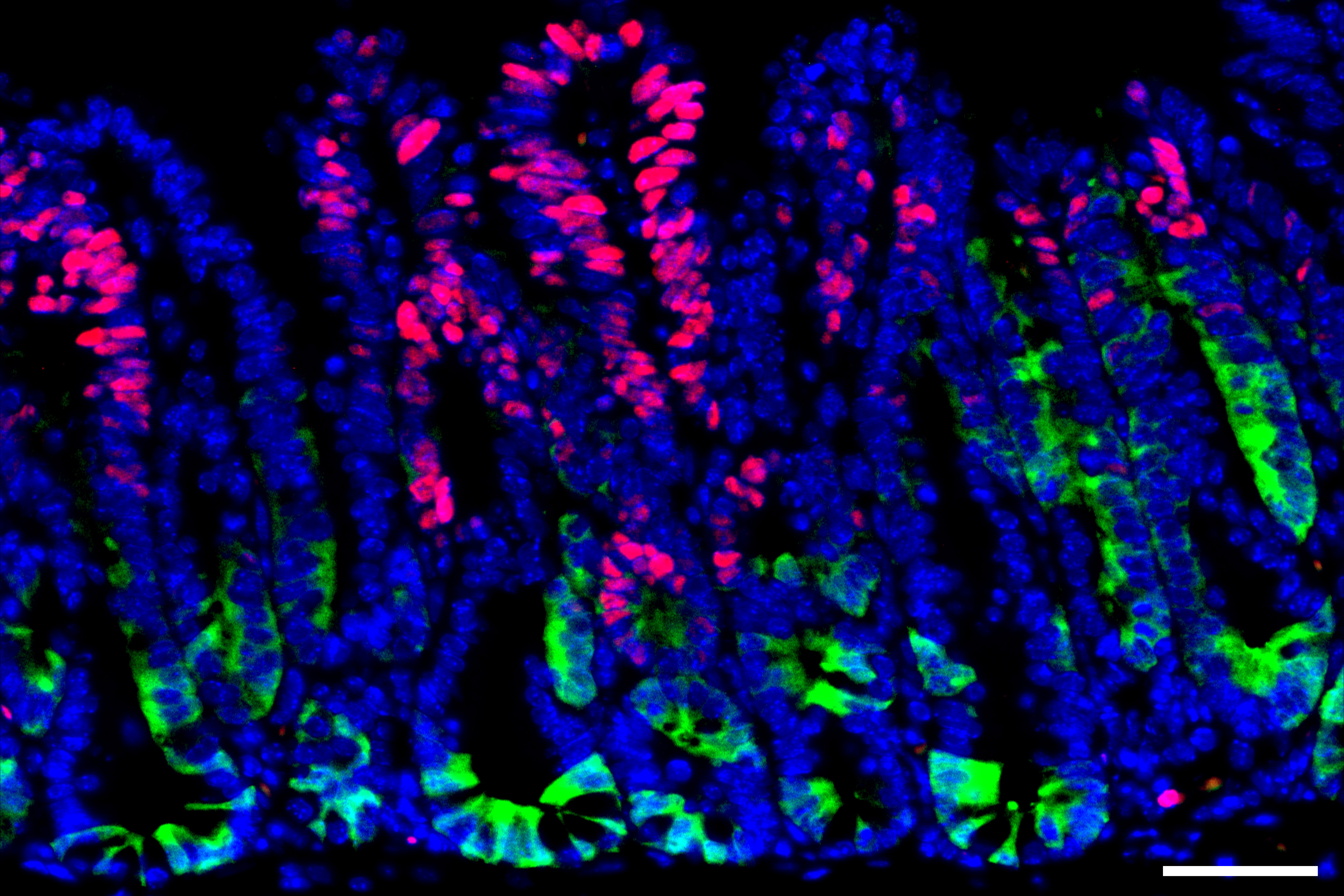

One of the hardest-working organisms in the ocean is the tiny, emerald-tinged Prochlorococcus marinus. These single-celled “picoplankton,” which are smaller than a human red blood cell, can be found in staggering numbers throughout the ocean’s surface waters, making Prochlorococcus the most abundant photosynthesizing organism on the planet. (Collectively, Prochlorococcus fix as much carbon as all the crops on land.) Scientists continue to find new ways that the little green microbe is involved in the ocean’s cycling and storage of carbon.

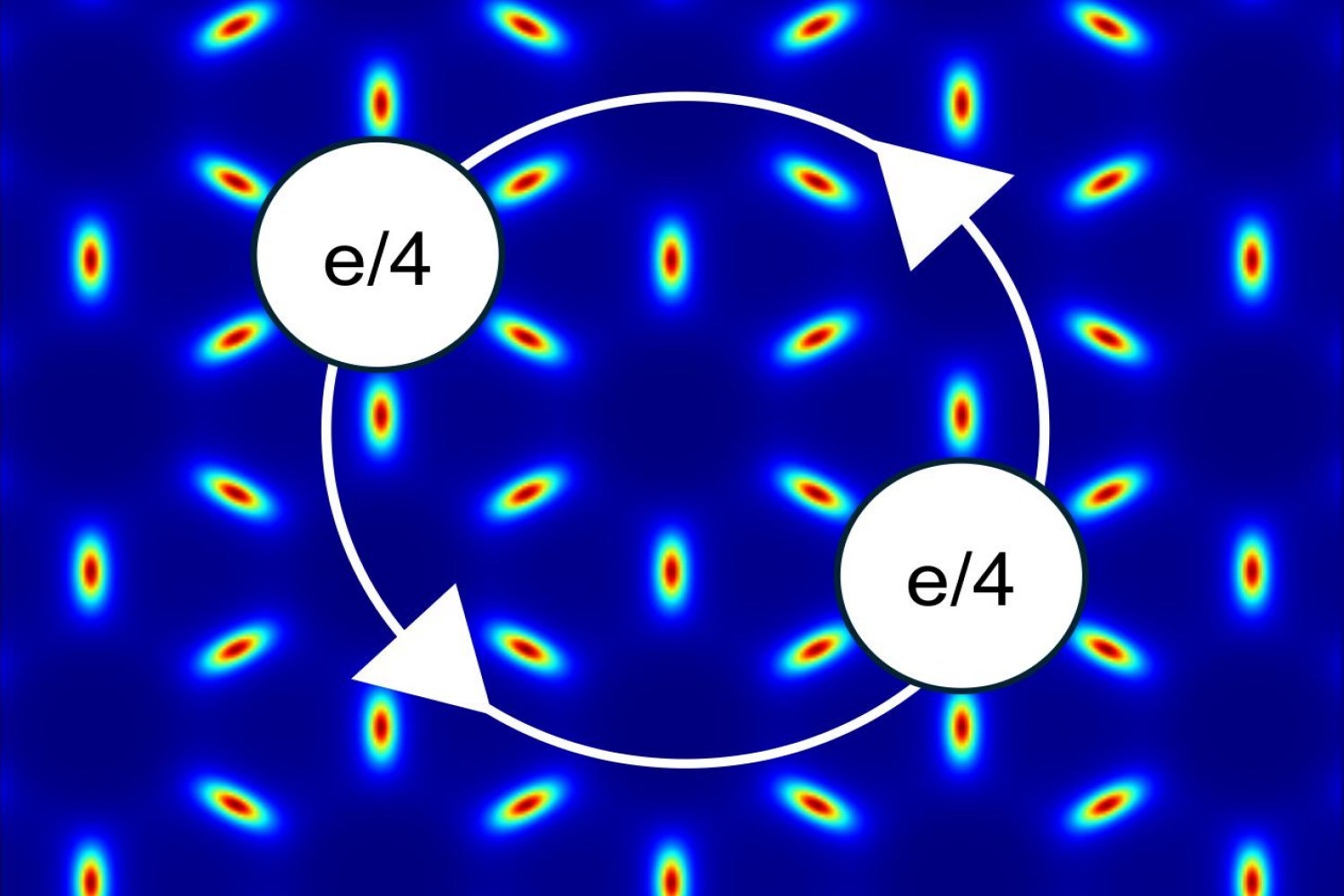

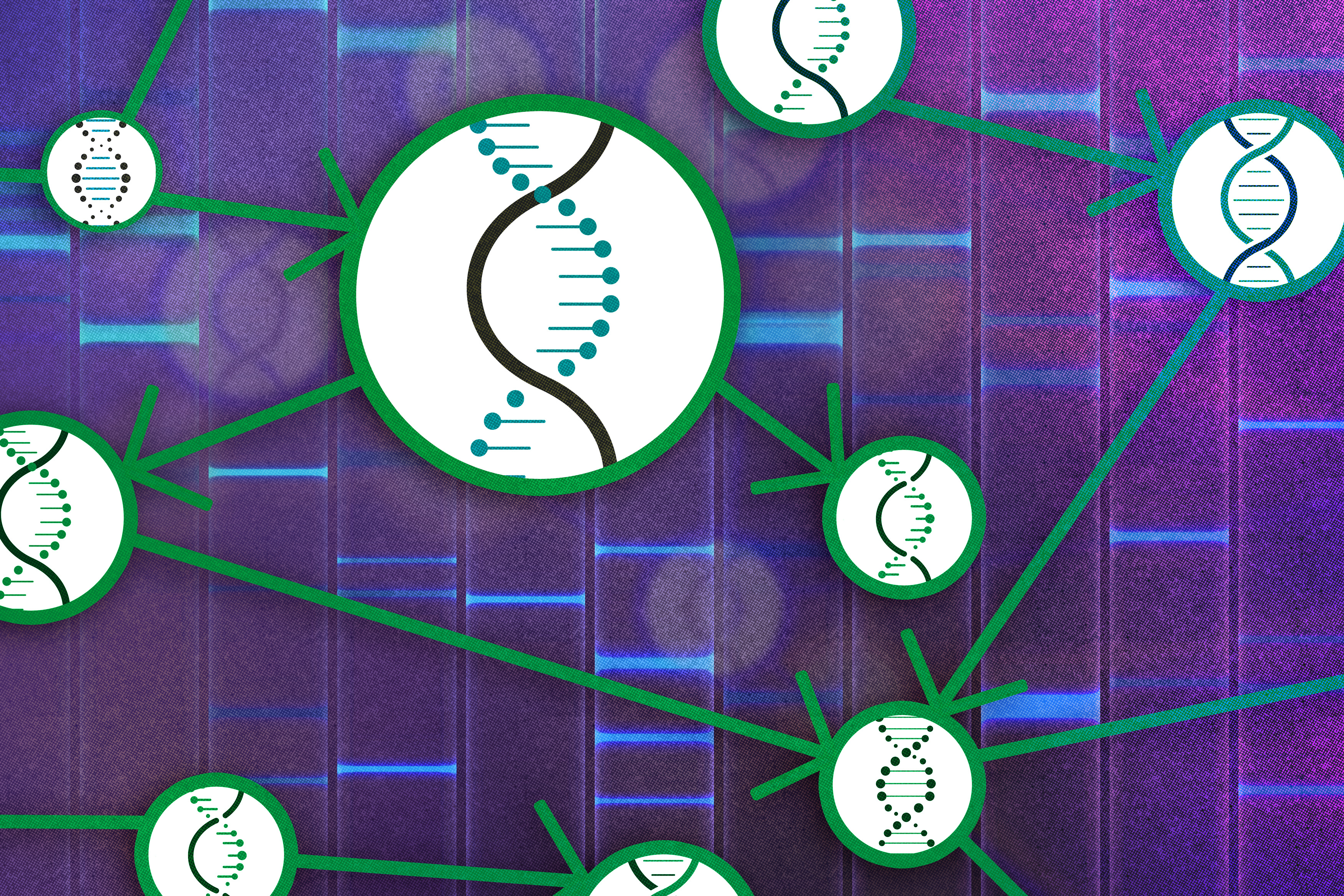

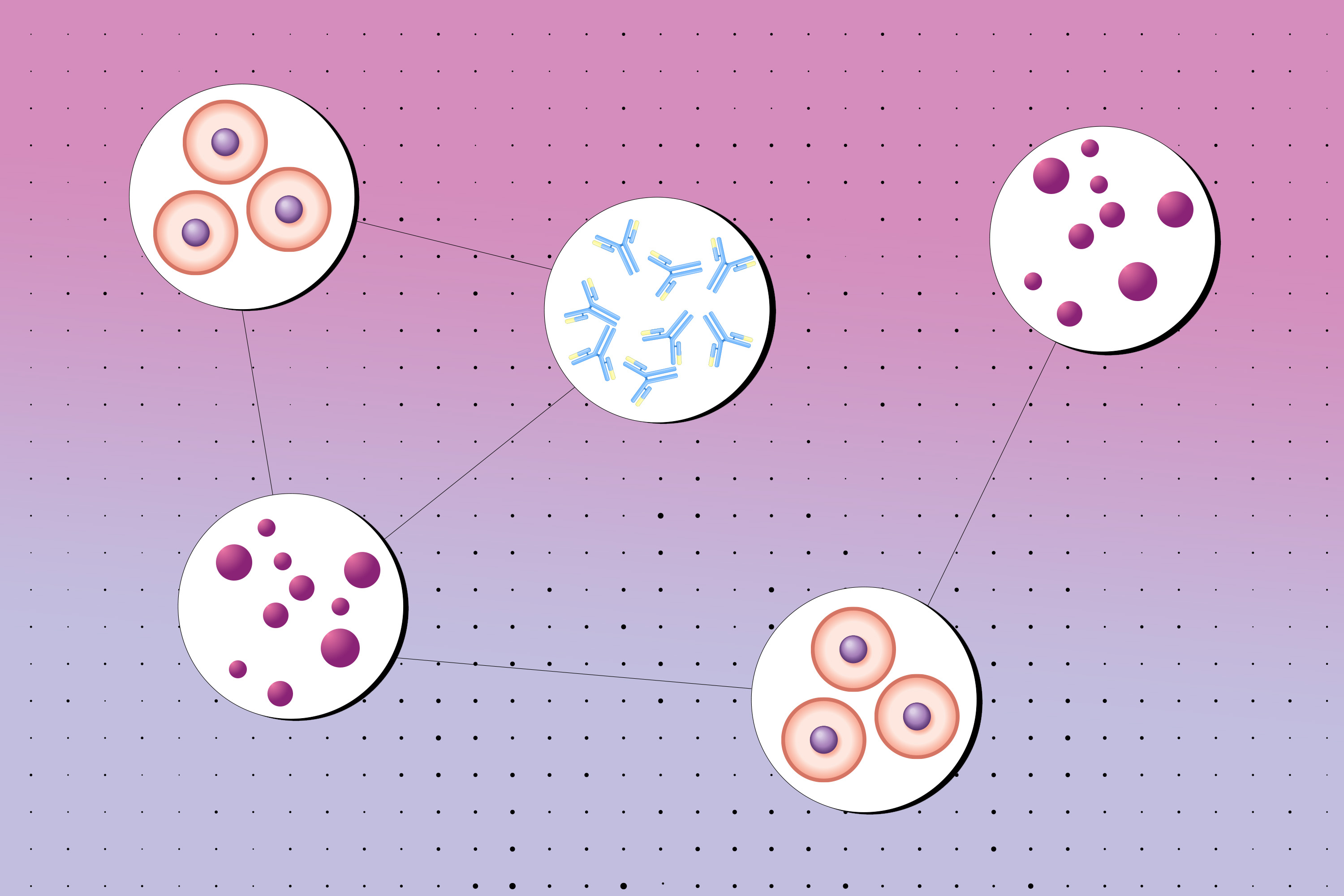

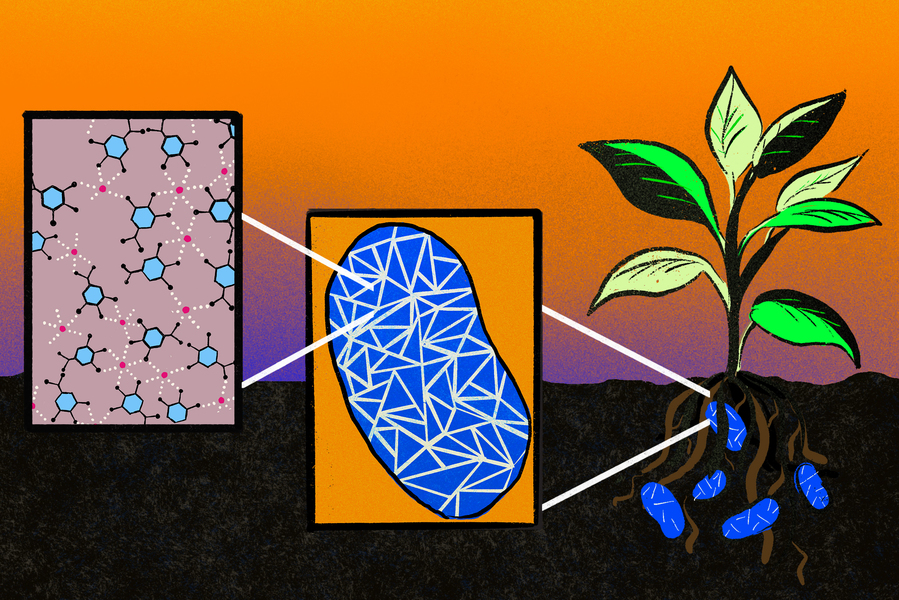

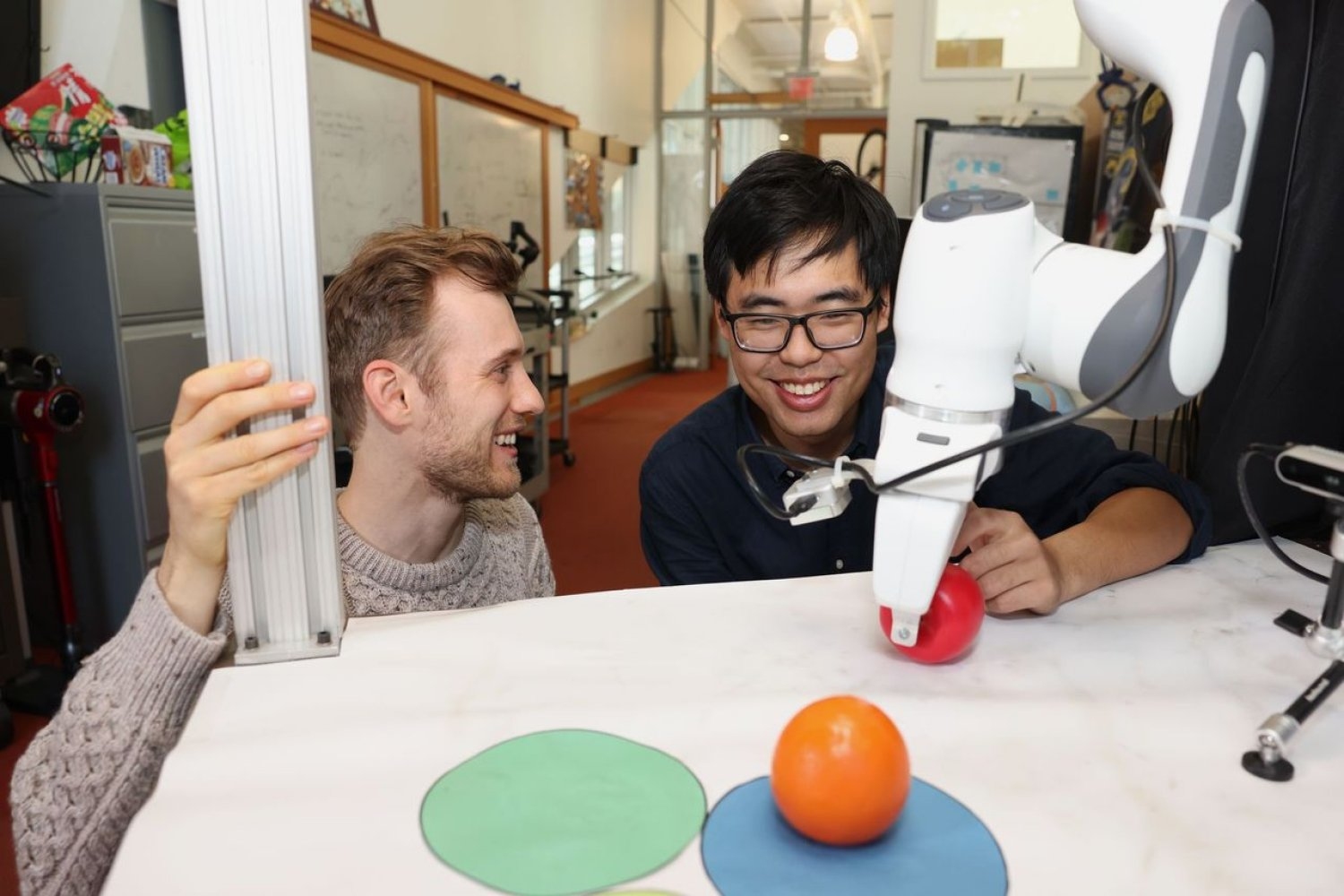

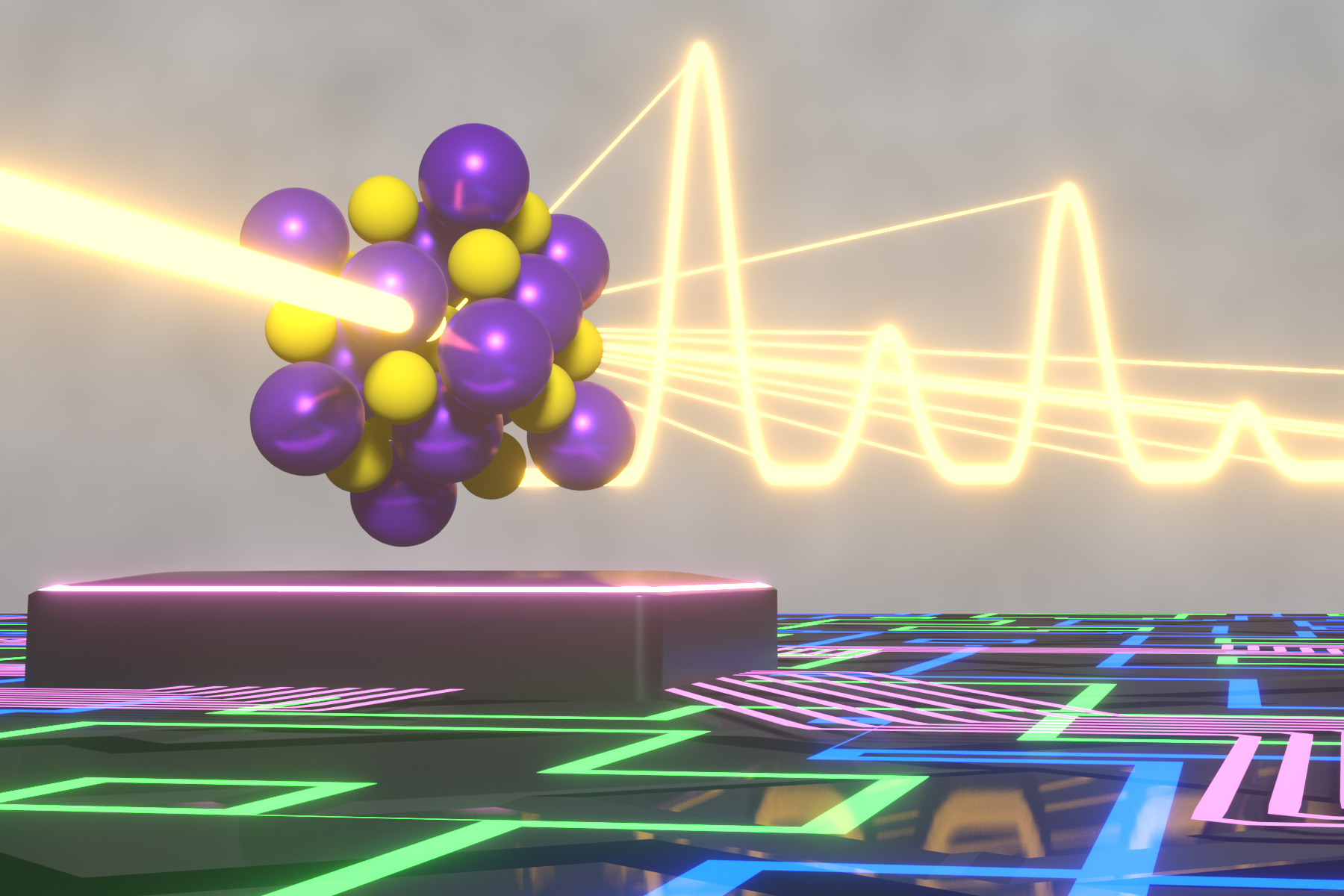

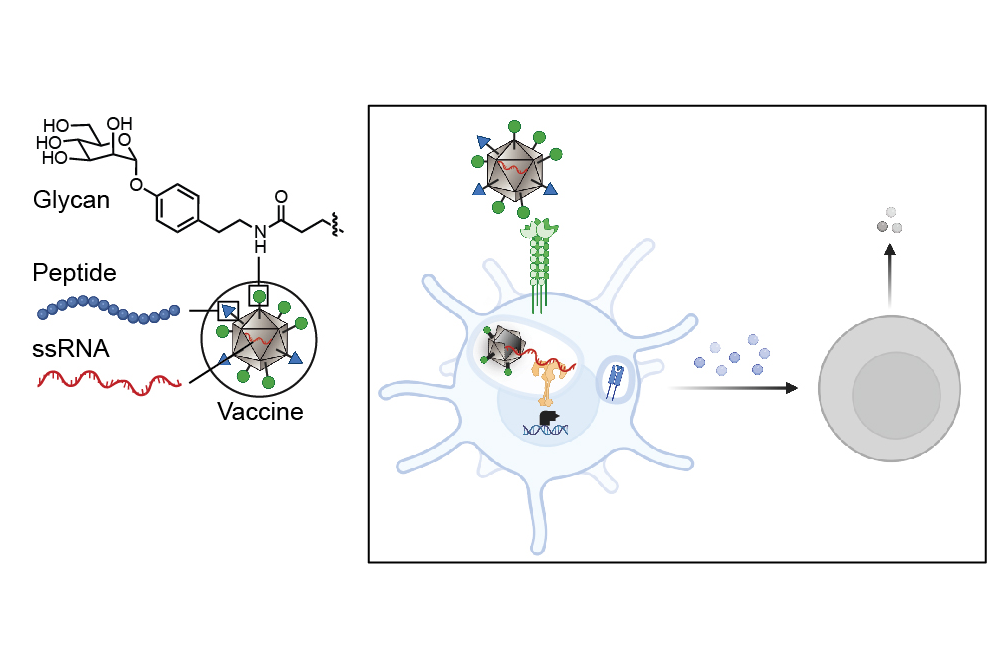

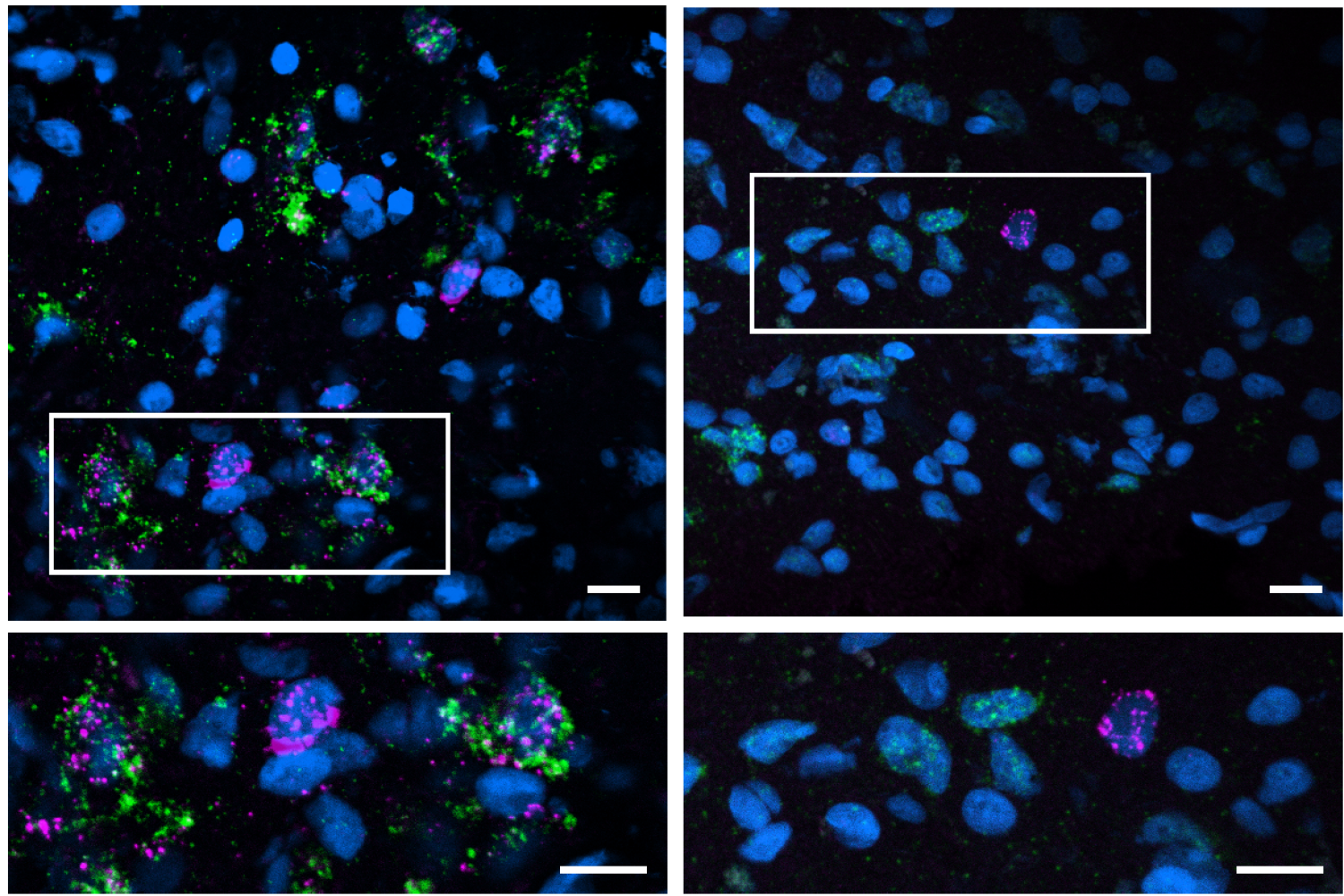

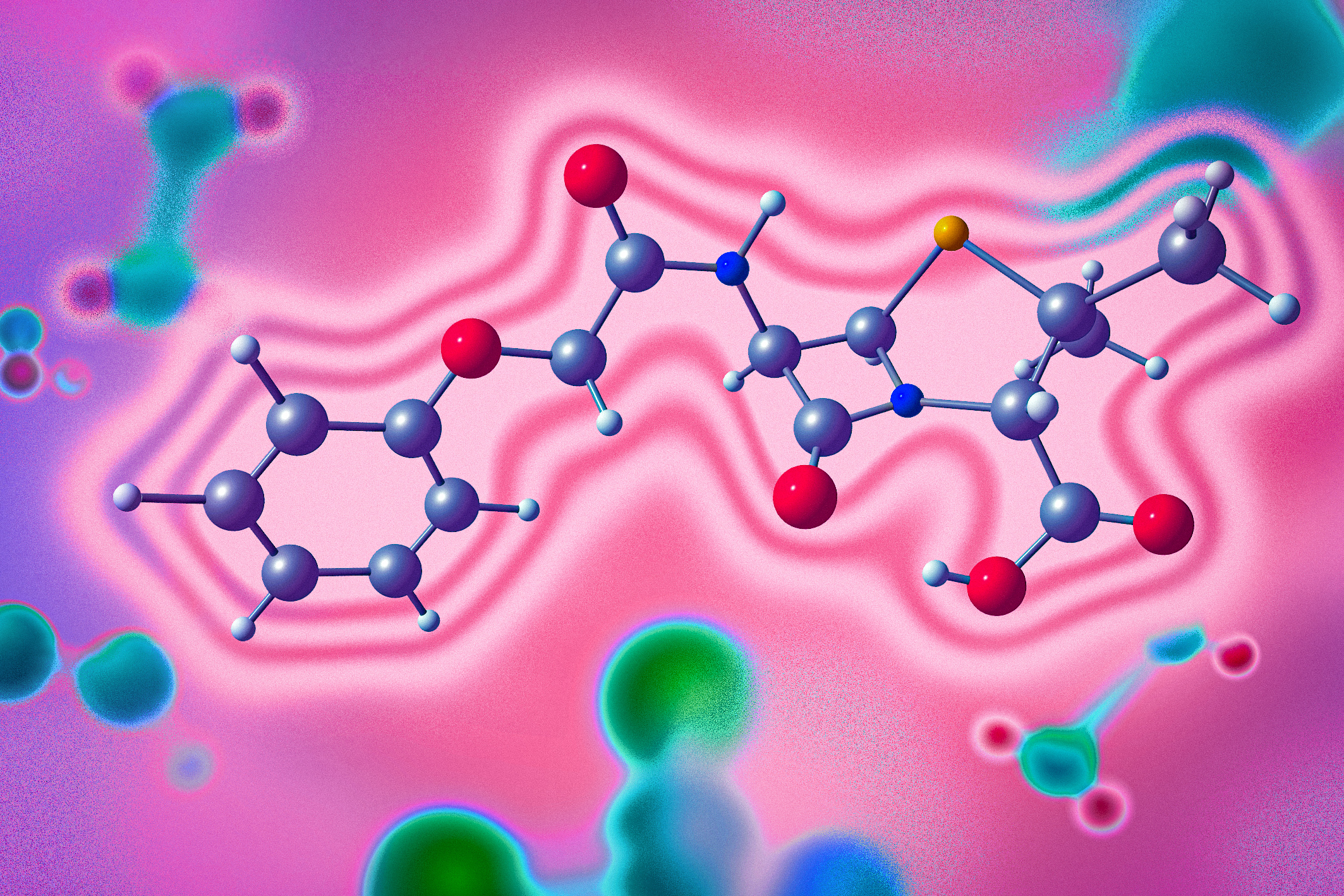

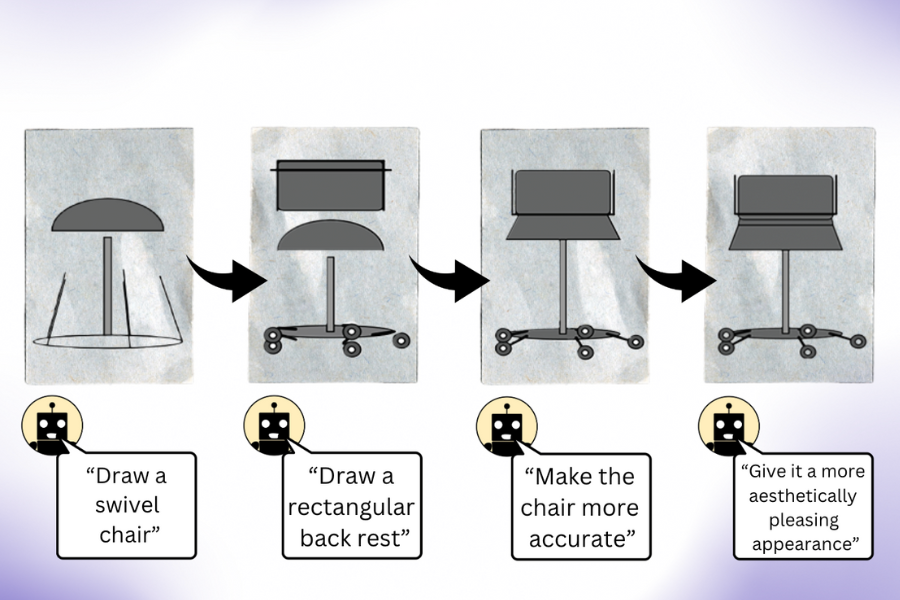

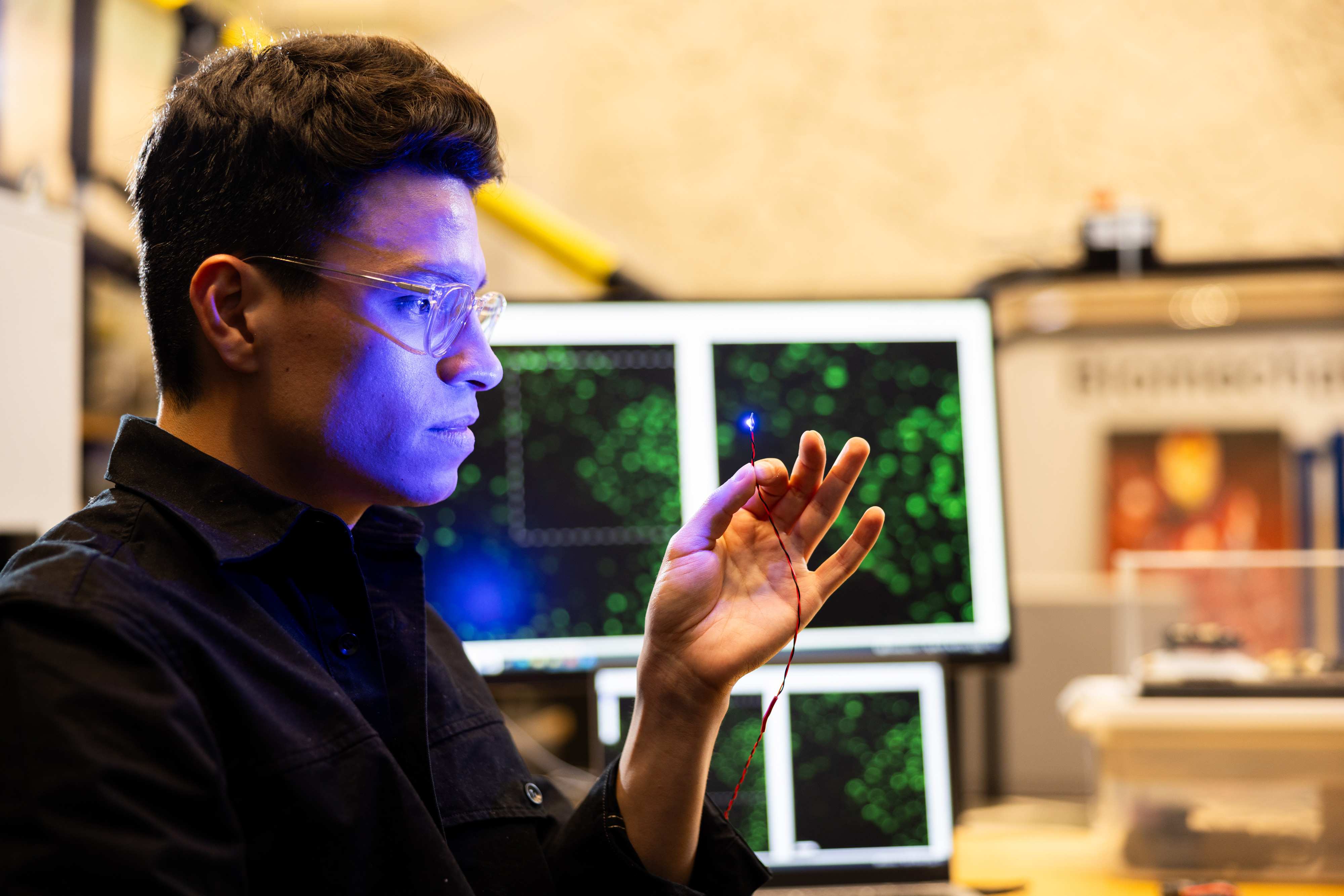

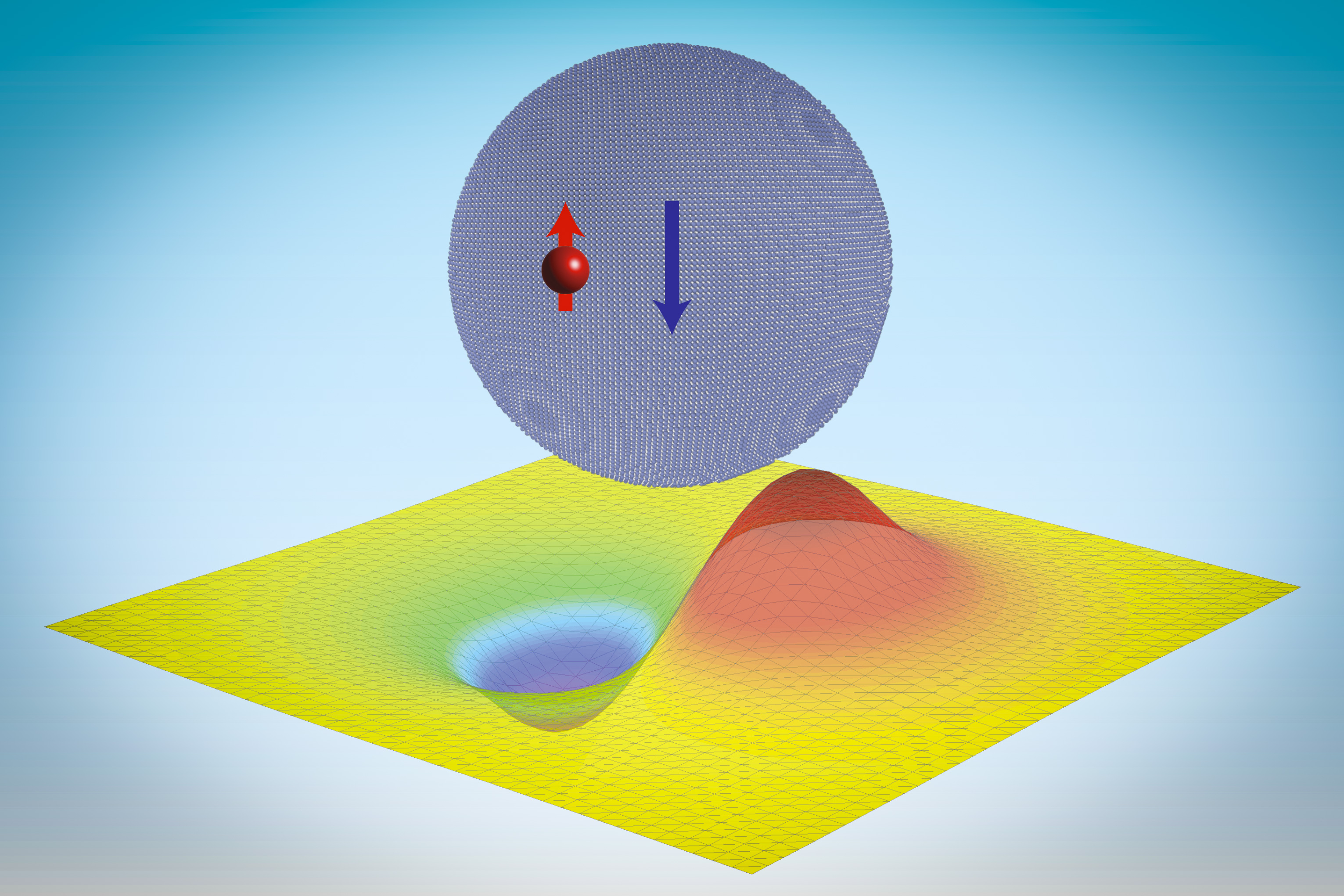

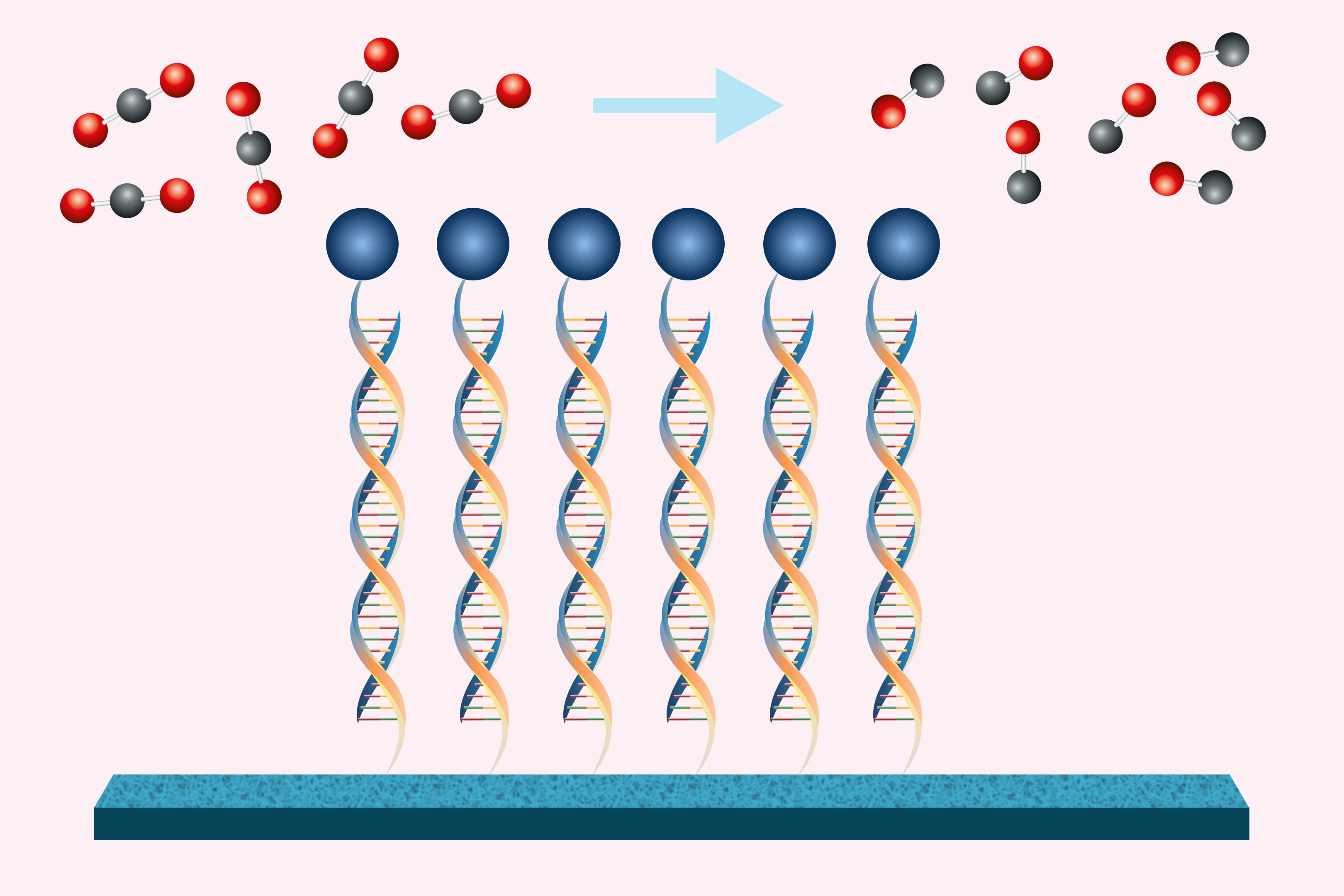

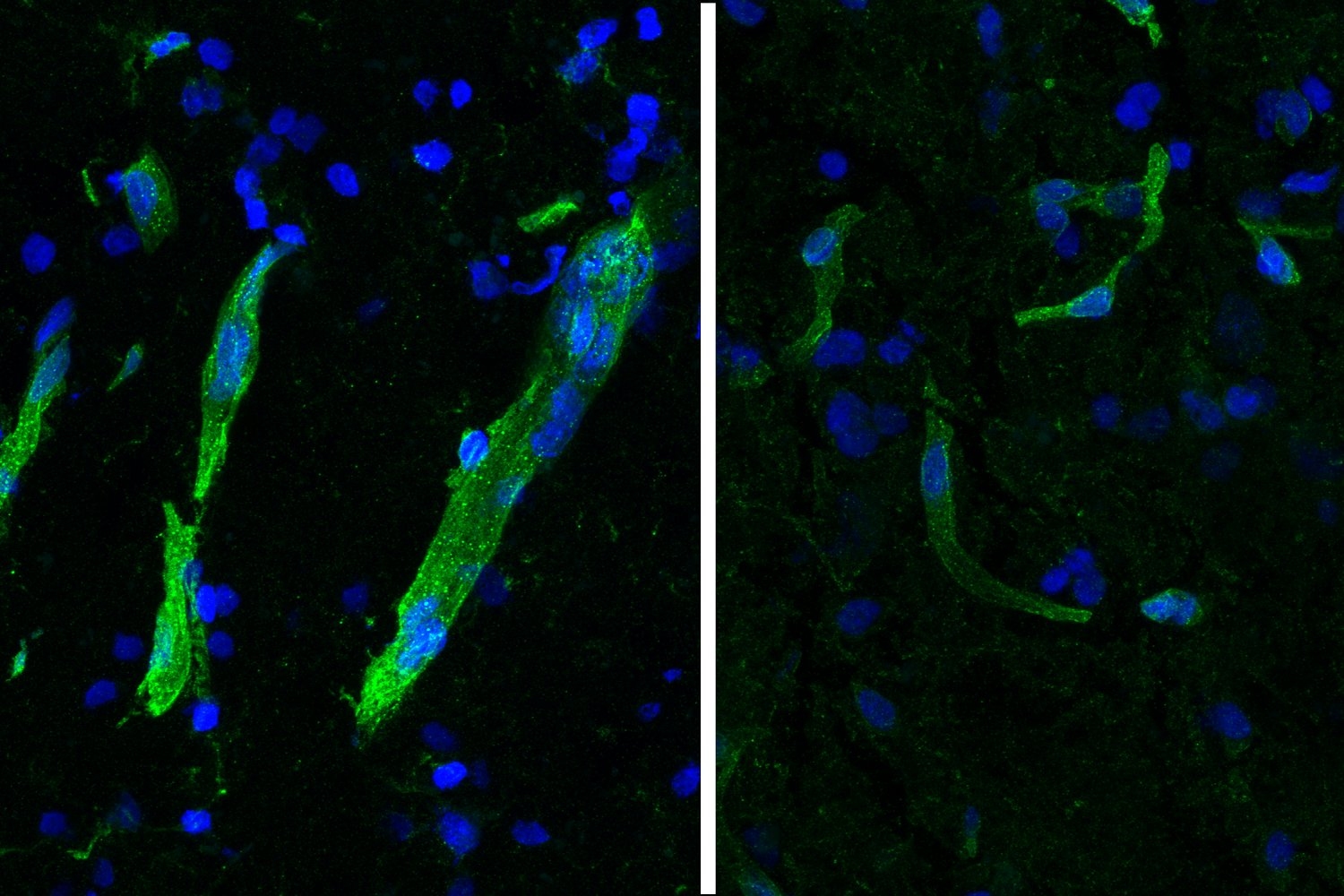

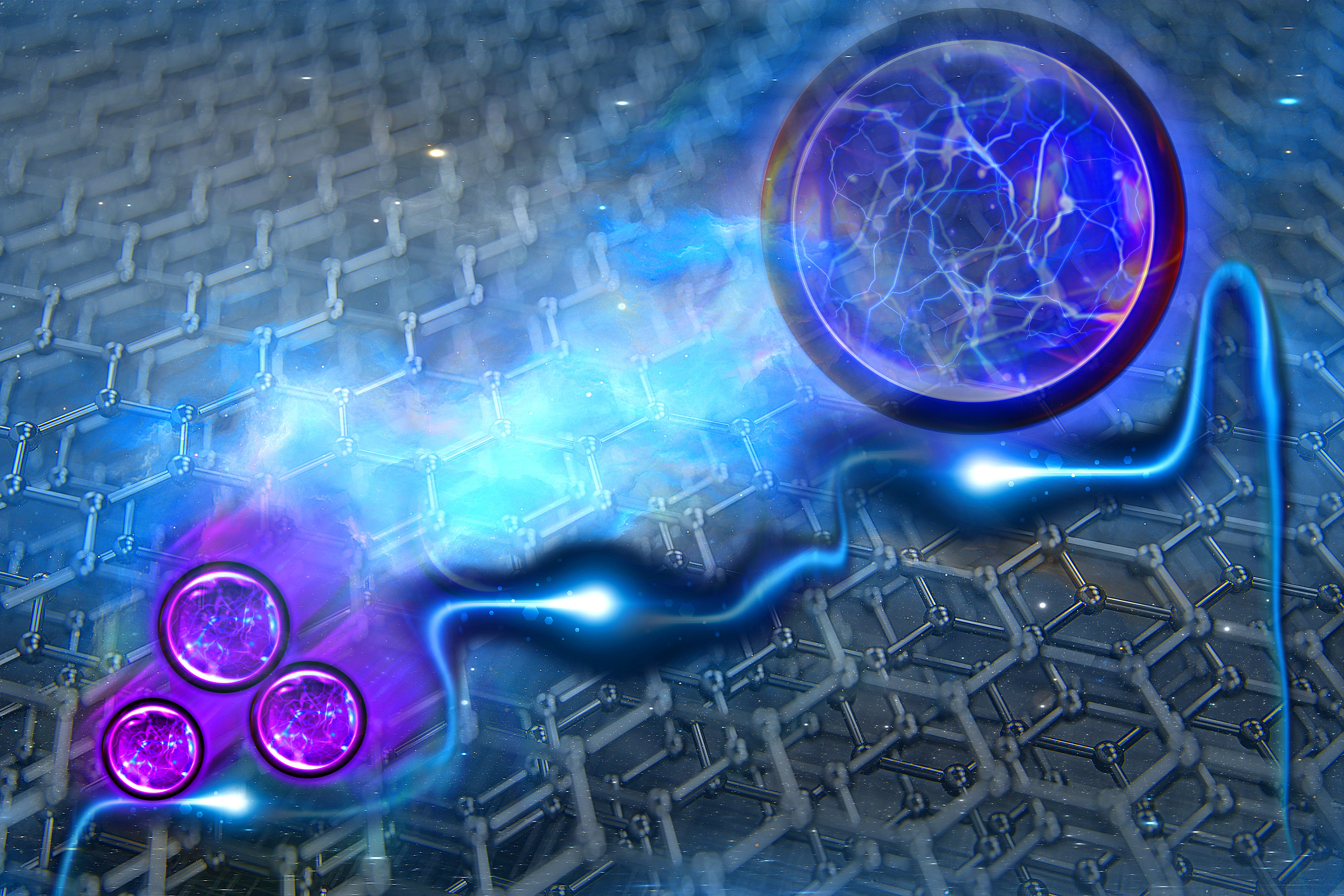

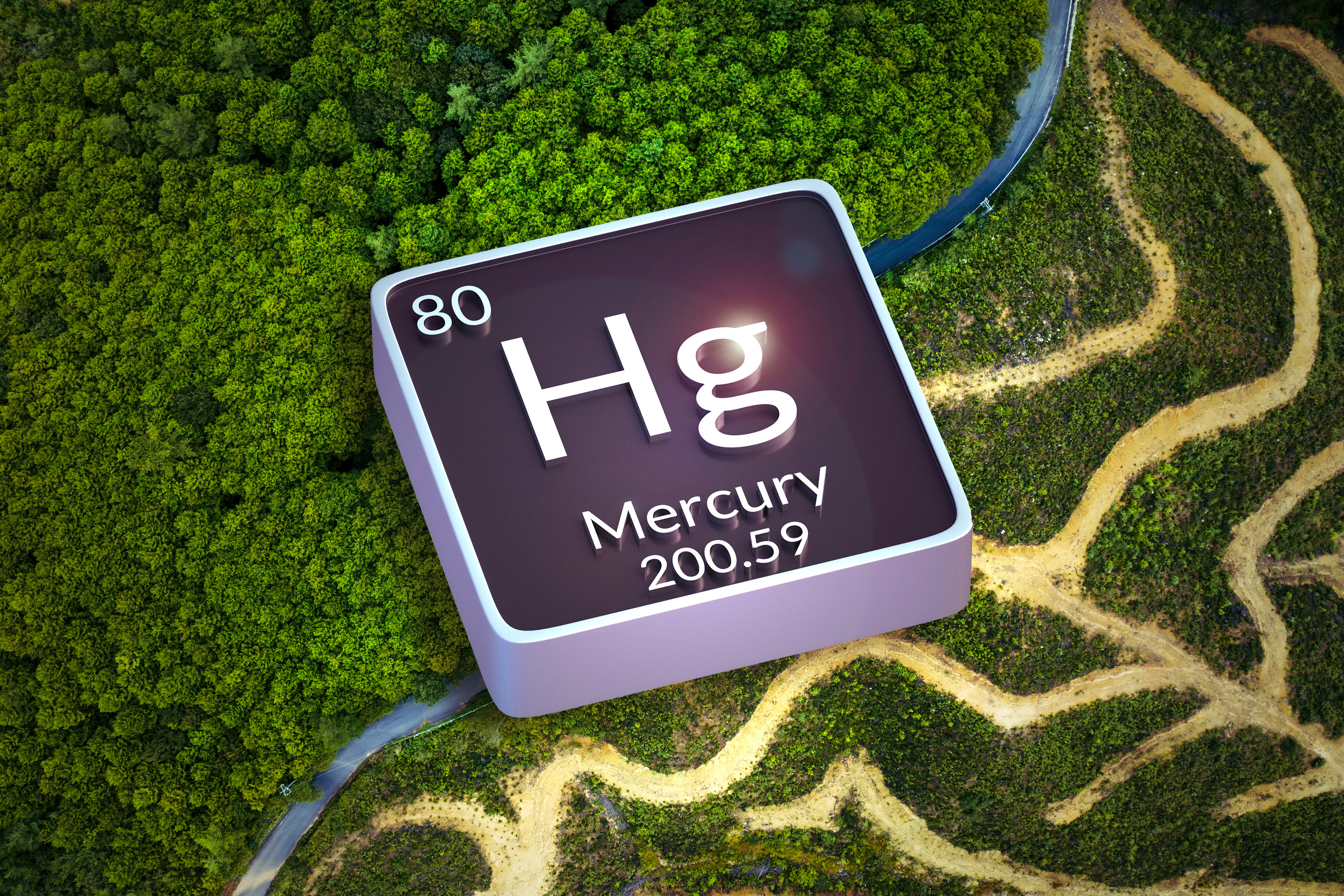

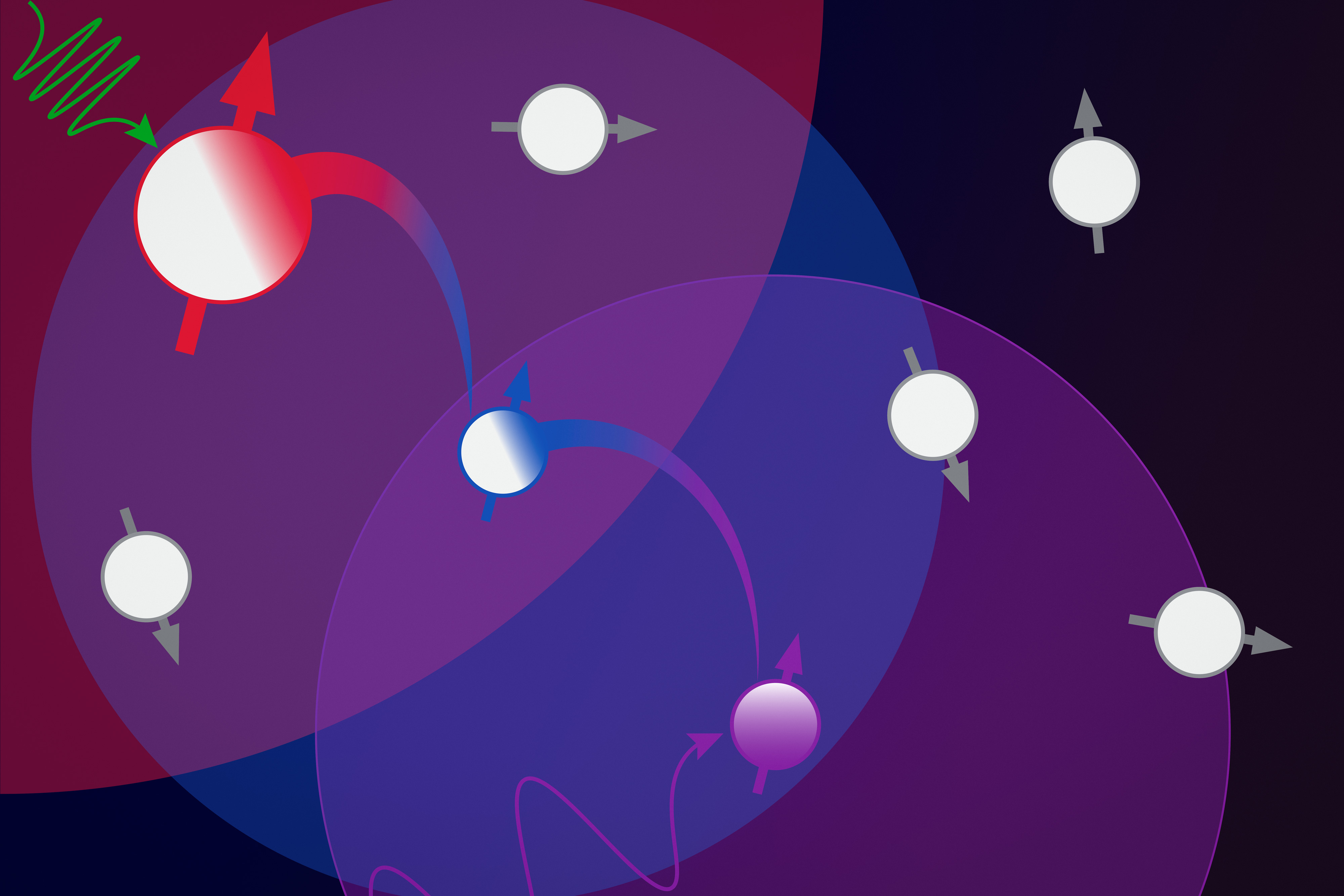

Now, MIT scientists have discovered a new ocean-regulating ability in the small but mighty microbes: cross-feeding of DNA building blocks. In a study appearing today in Science Advances, the team reports that Prochlorococcus shed these extra compounds into their surroundings, where they are then “cross-fed,” or taken up by other ocean organisms, either as nutrients, energy, or for regulating metabolism. Prochlorococcus’ rejects, then, are other microbes’ resources.

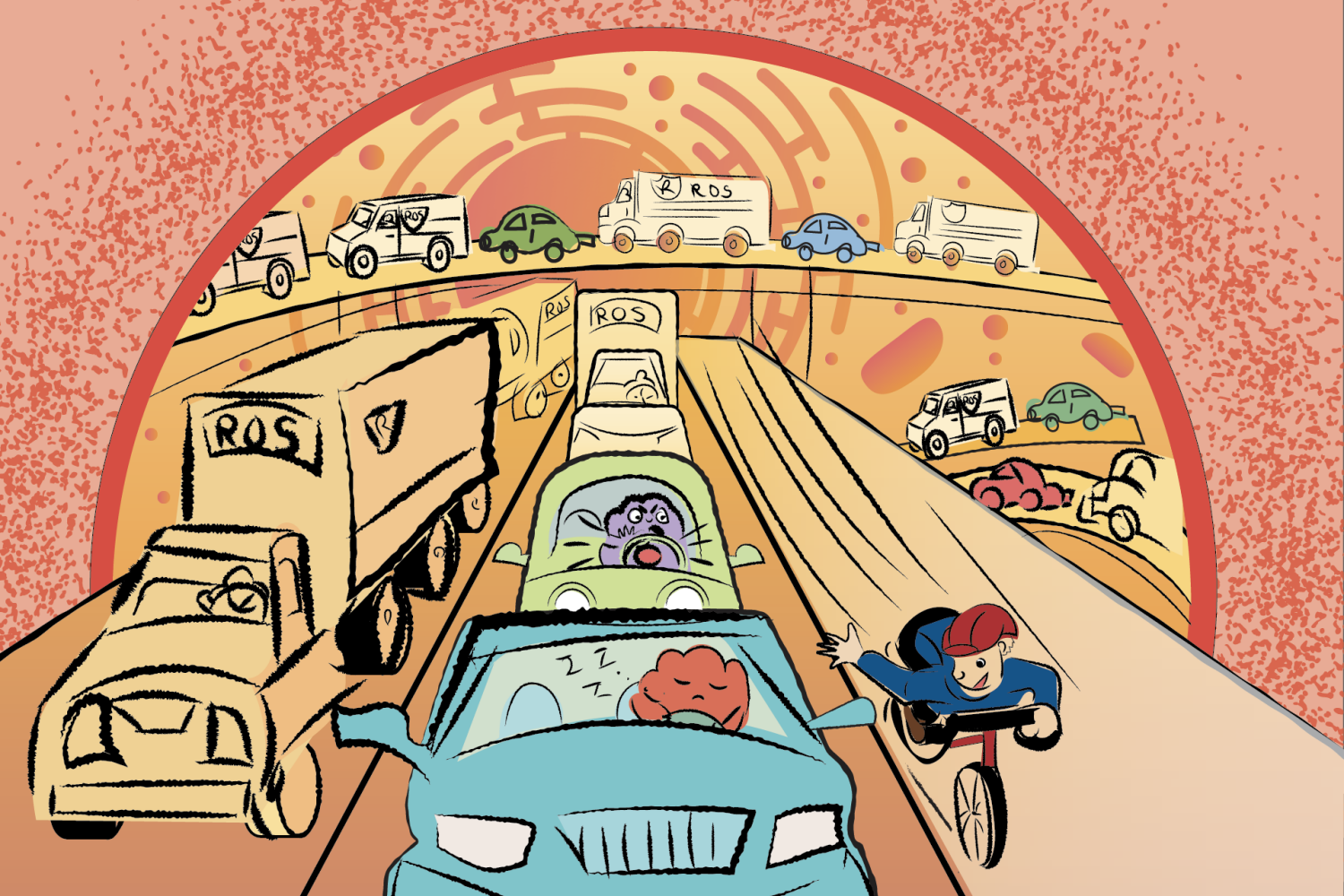

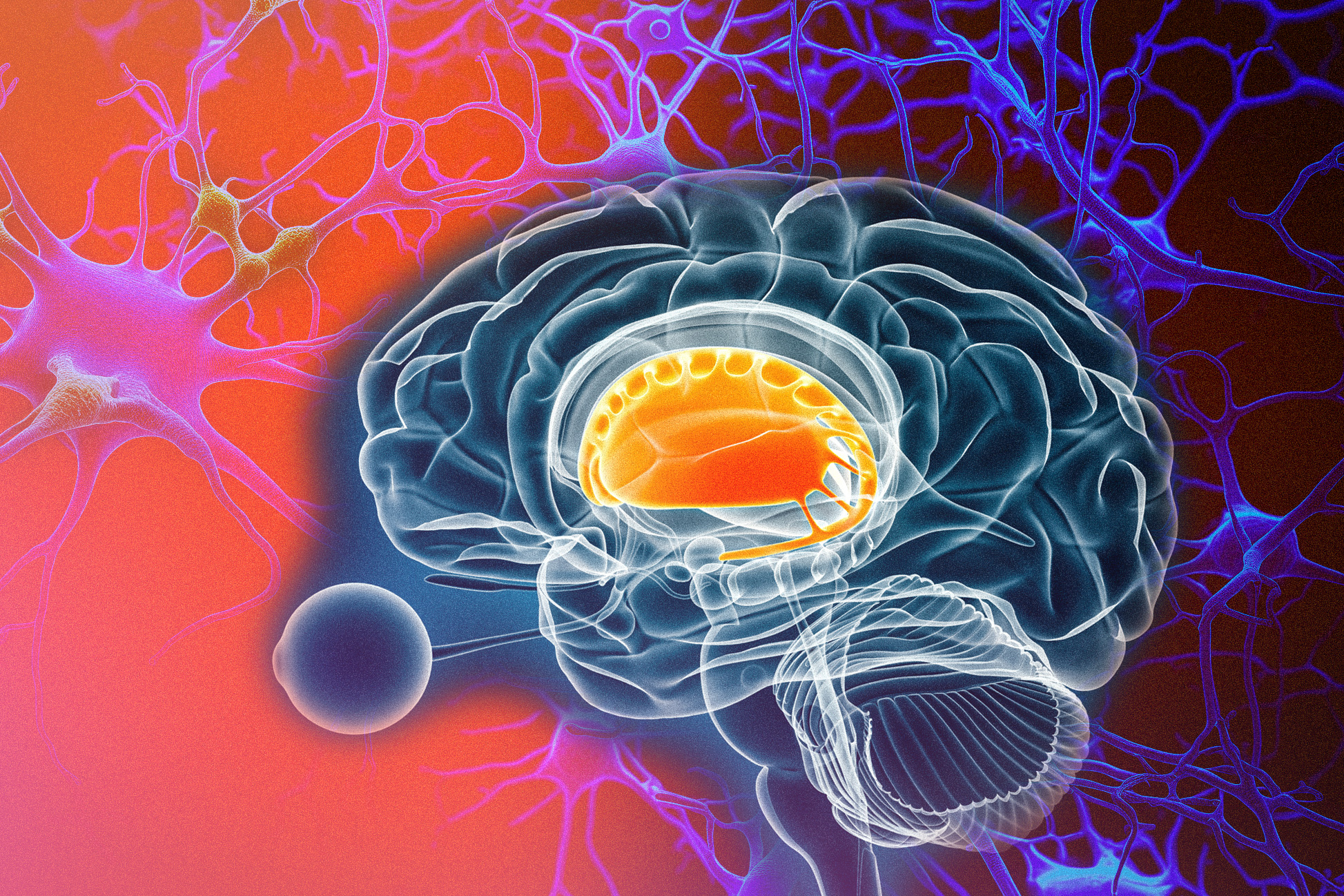

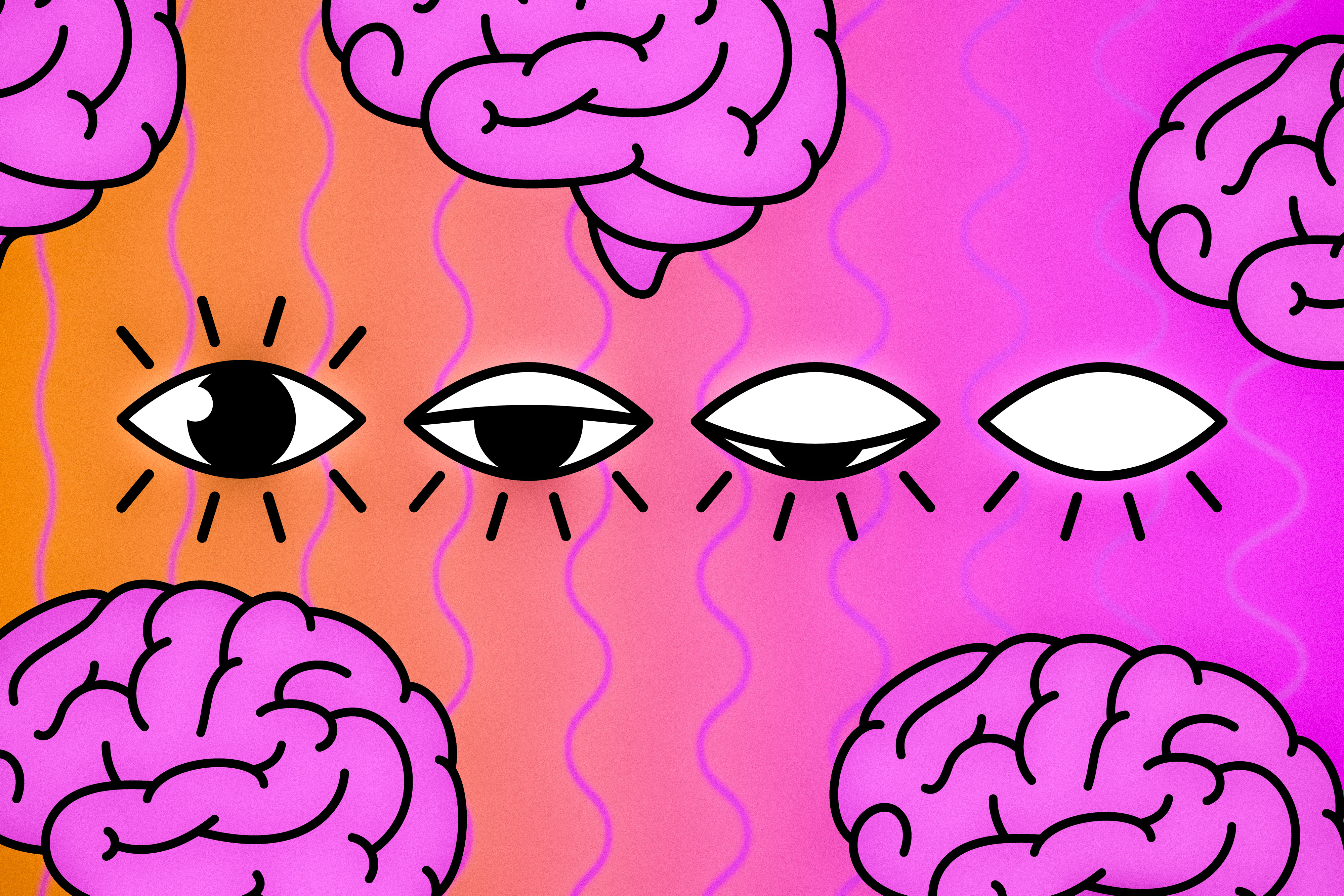

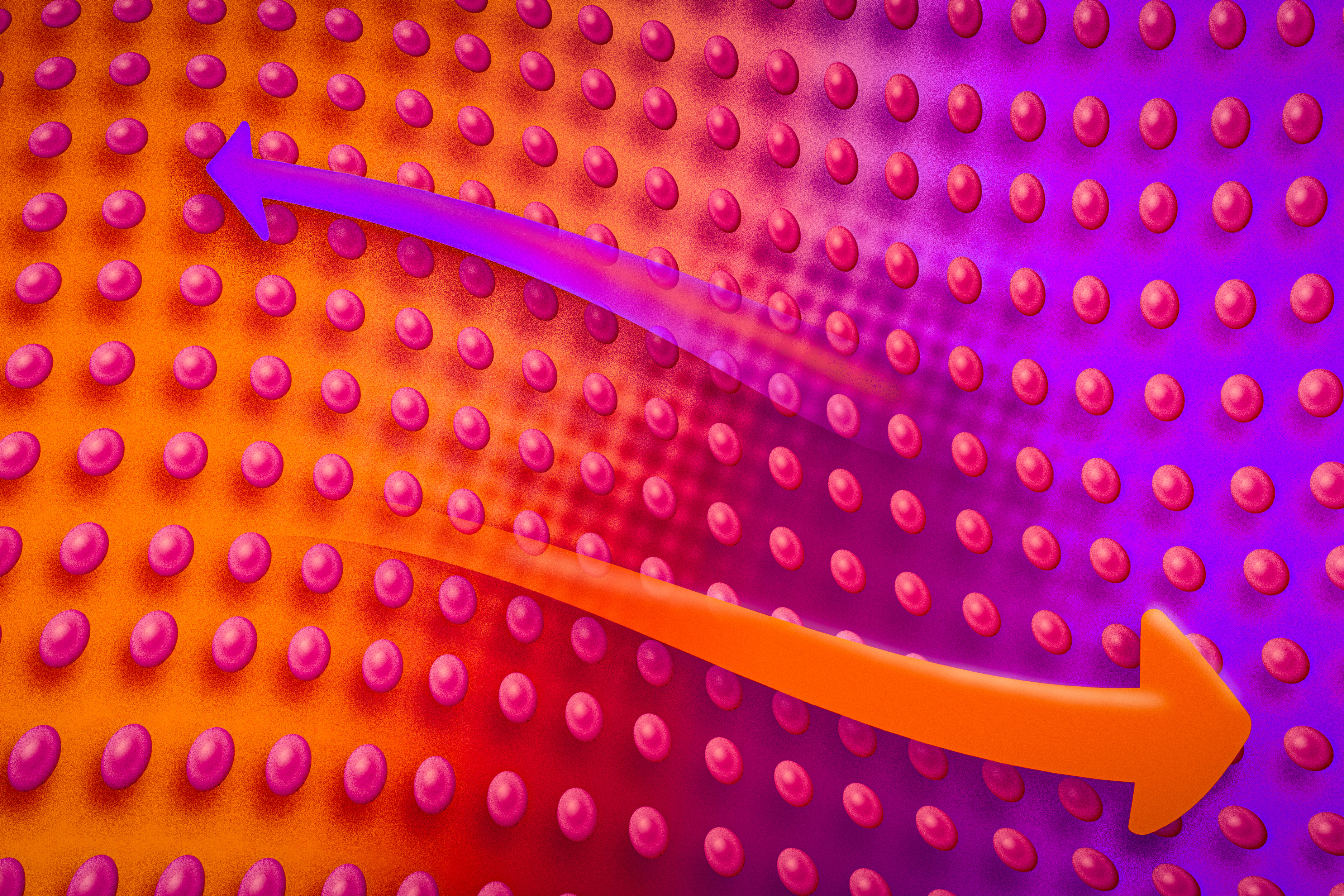

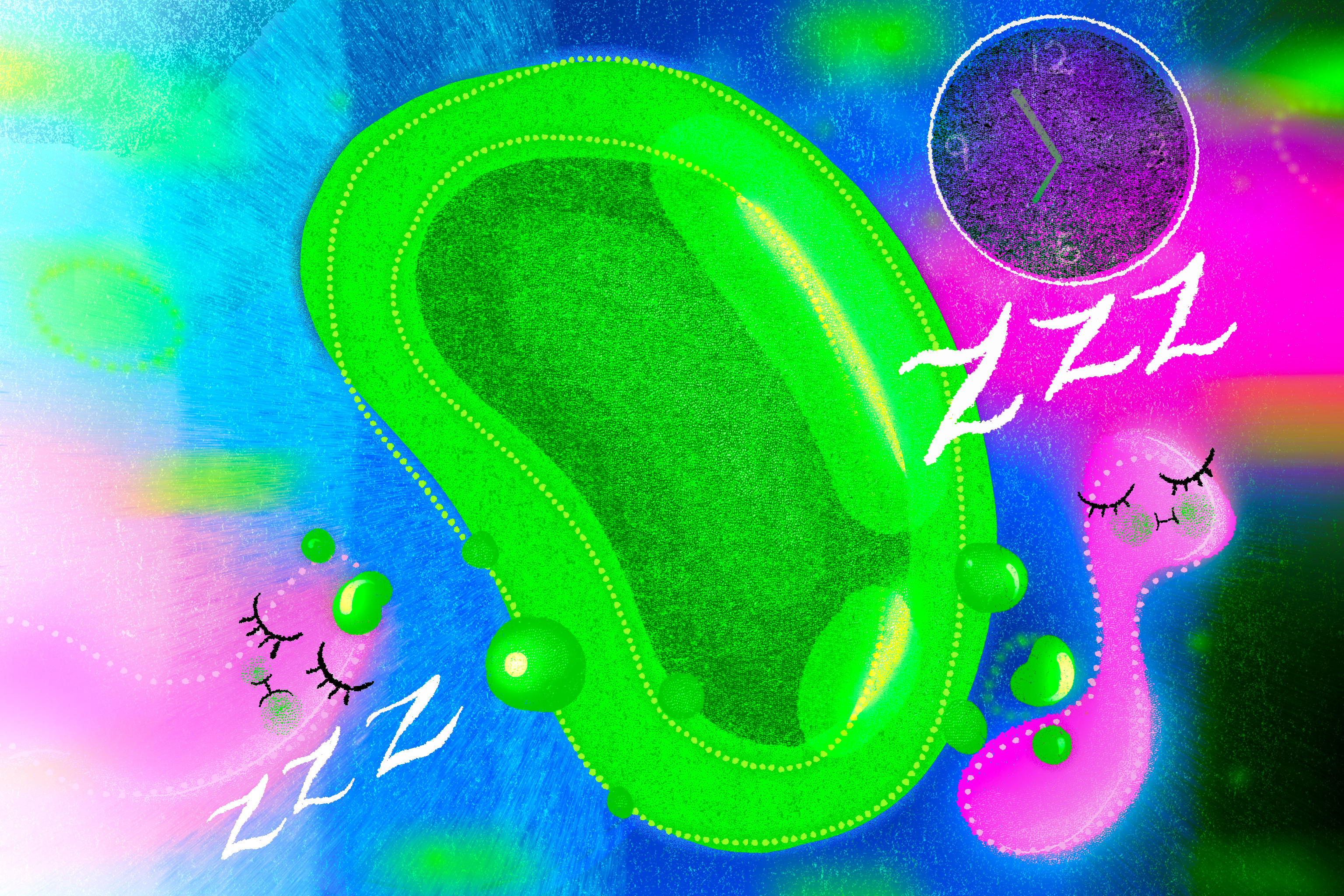

What’s more, this cross-feeding occurs on a regular cycle: Prochlorococcus tend to shed their molecular baggage at night, when enterprising microbes quickly consume the cast-offs. For a microbe called SAR11, the most abundant bacteria in the ocean, the researchers found that the nighttime snack acts as a relaxant of sorts, forcing the bacteria to slow down their metabolism and effectively recharge for the next day.

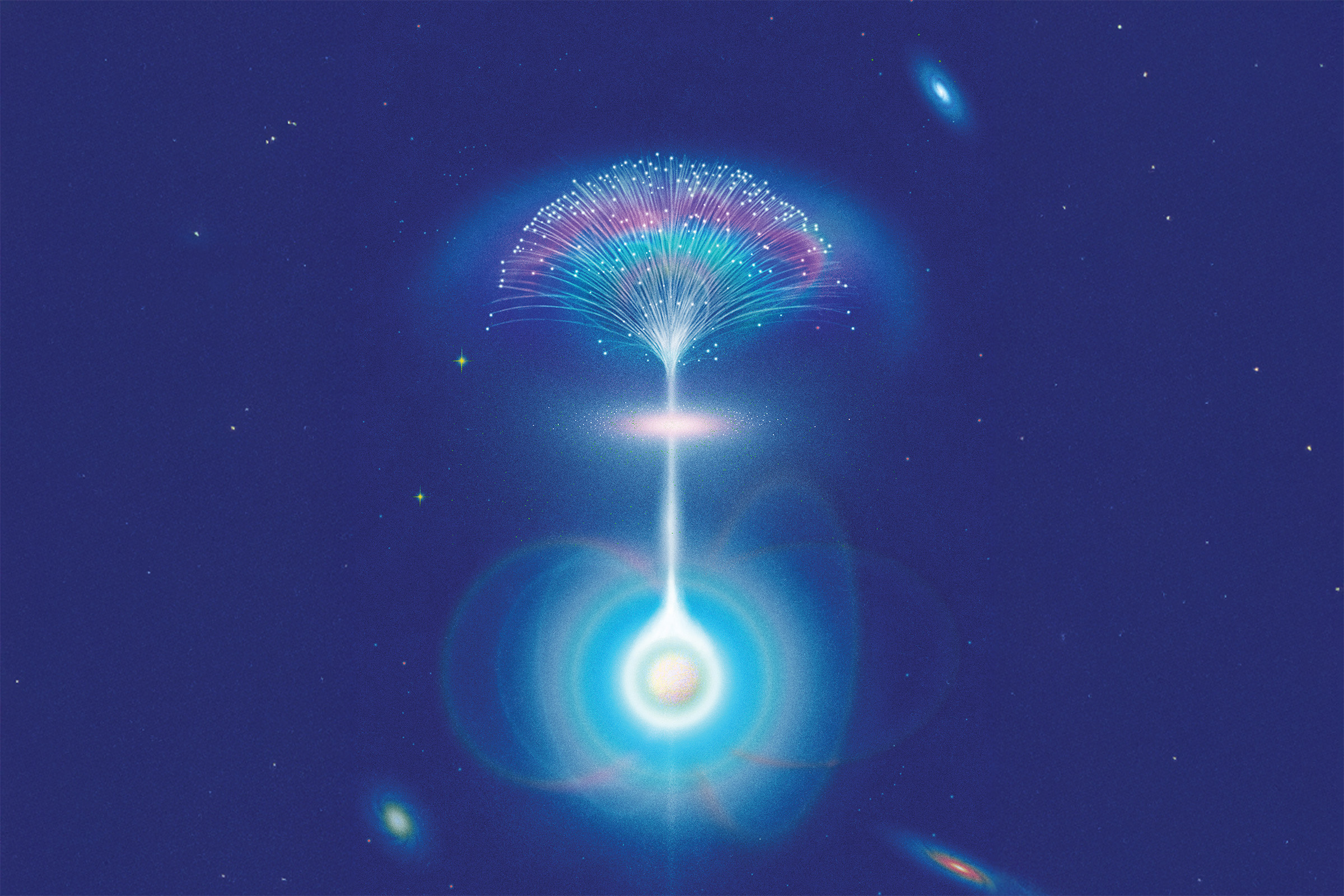

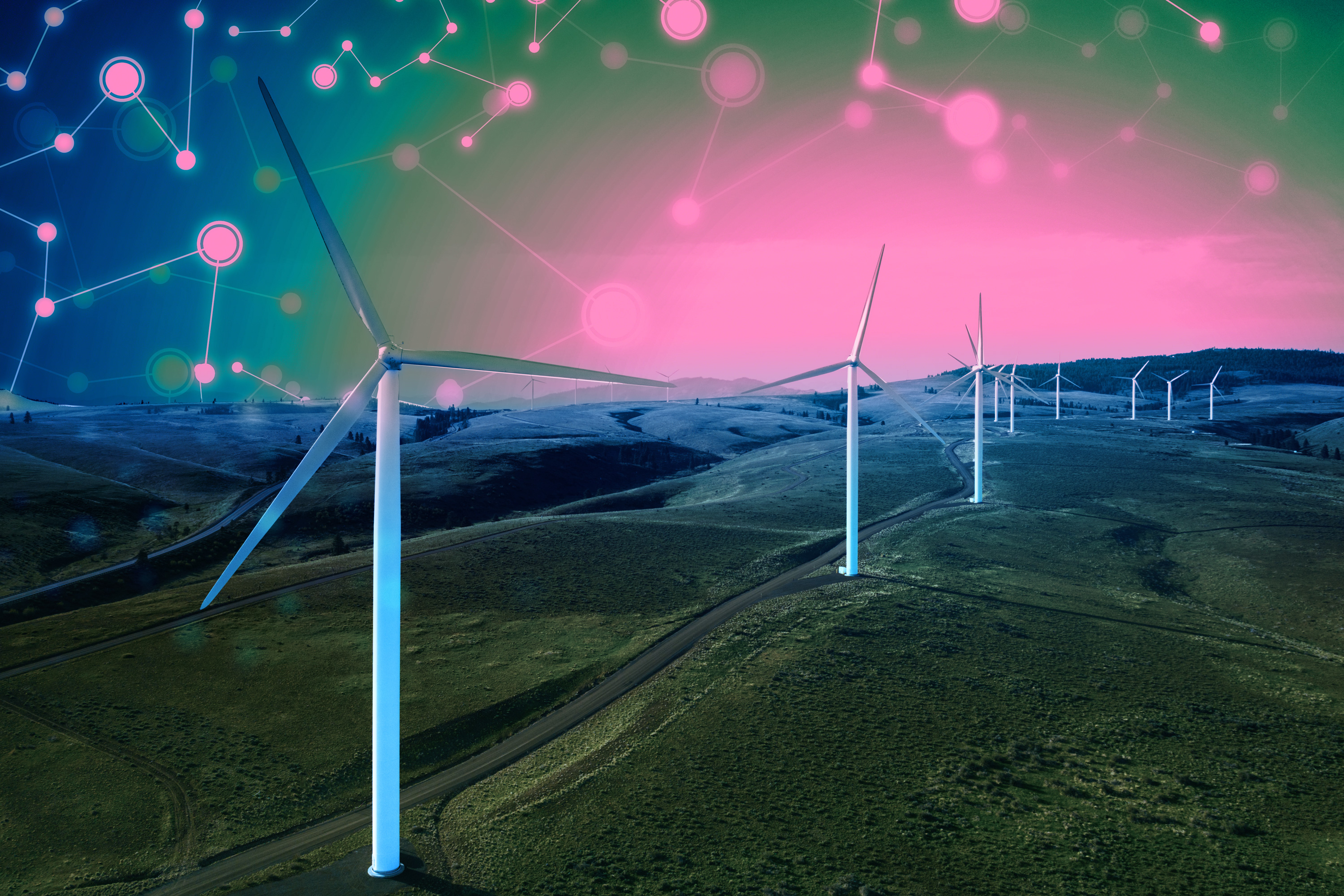

Through this cross-feeding interaction, Prochlorococcus could be helping many microbial communities to grow sustainably, simply by giving away what it doesn’t need. And they’re doing so in a way that could set the daily rhythms of microbes around the world.

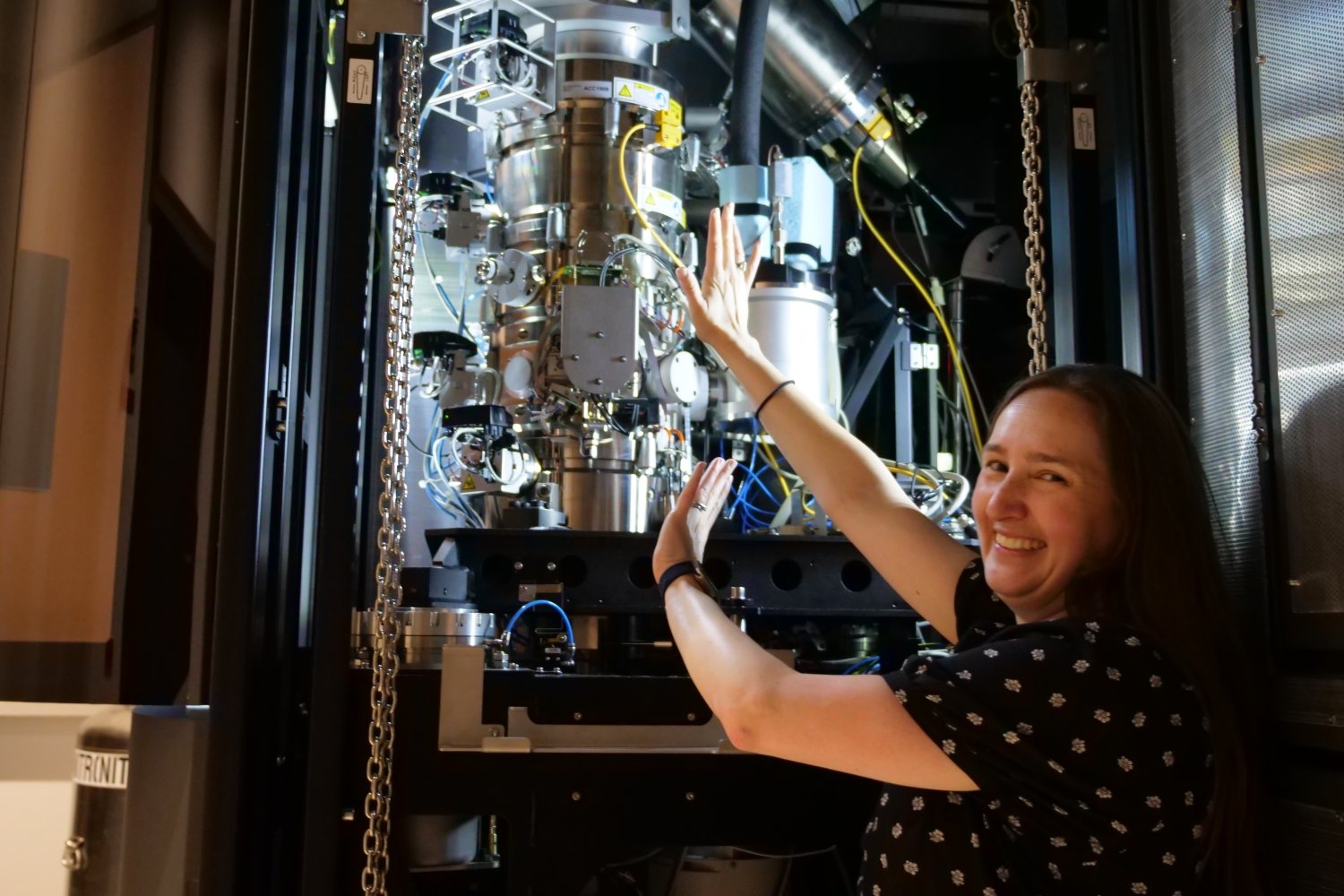

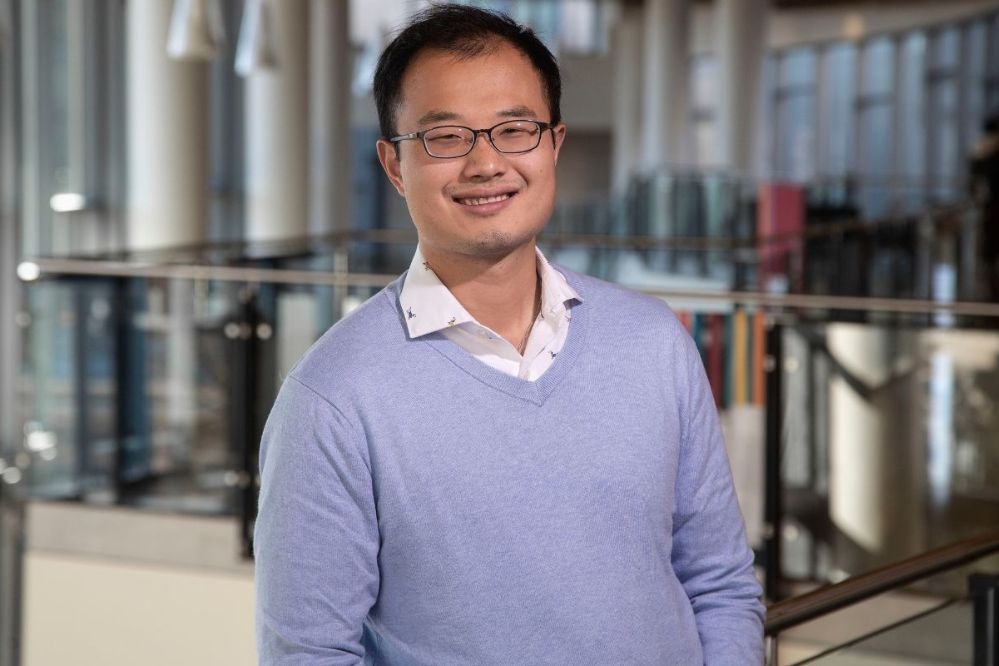

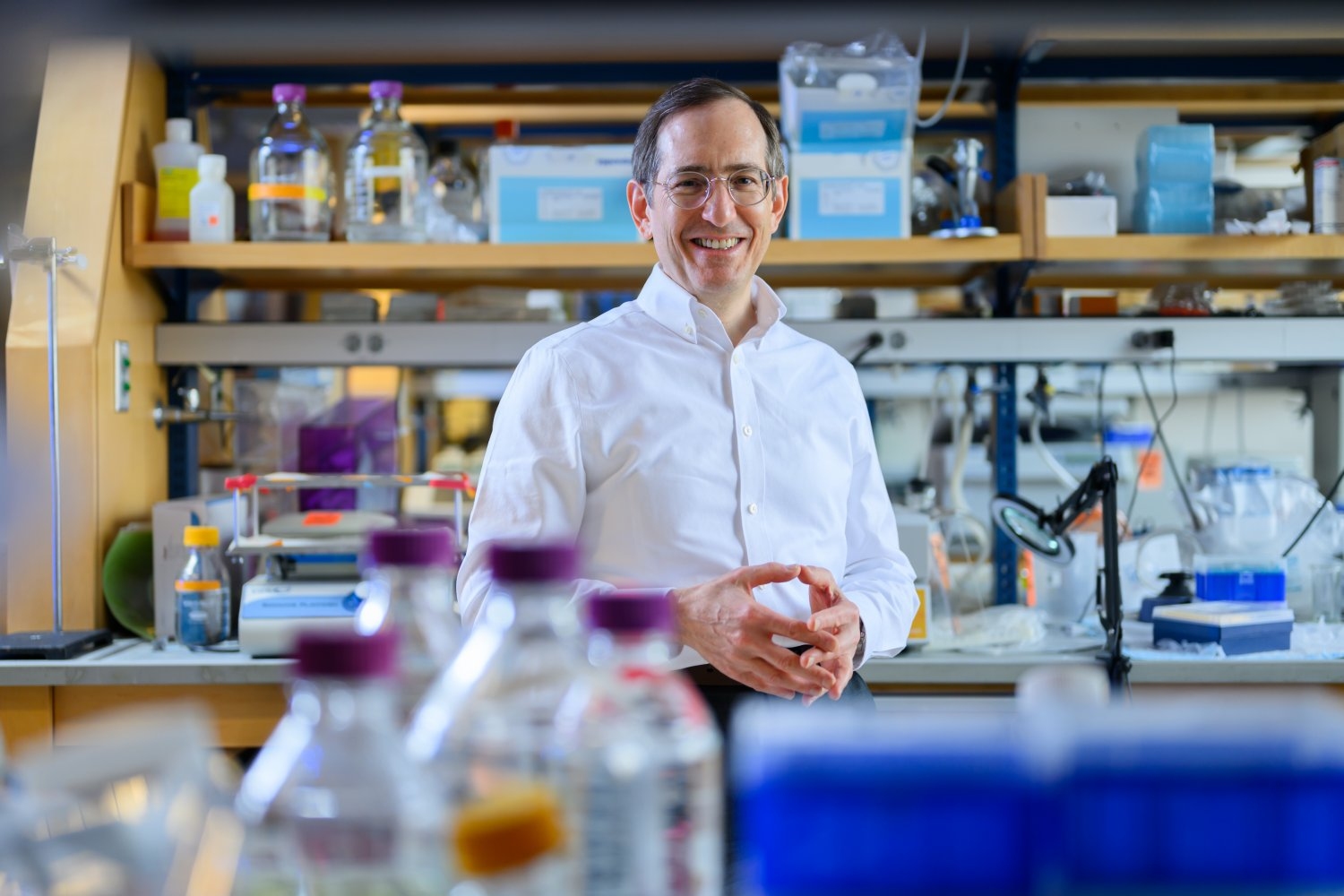

“The relationship between the two most abundant groups of microbes in ocean ecosystems has intrigued oceanographers for years,” says co-author and MIT Institute Professor Sallie “Penny” Chisholm, who played a role in the discovery of Prochlorococcus in 1986. “Now we have a glimpse of the finely tuned choreography that contributes to their growth and stability across vast regions of the oceans.”

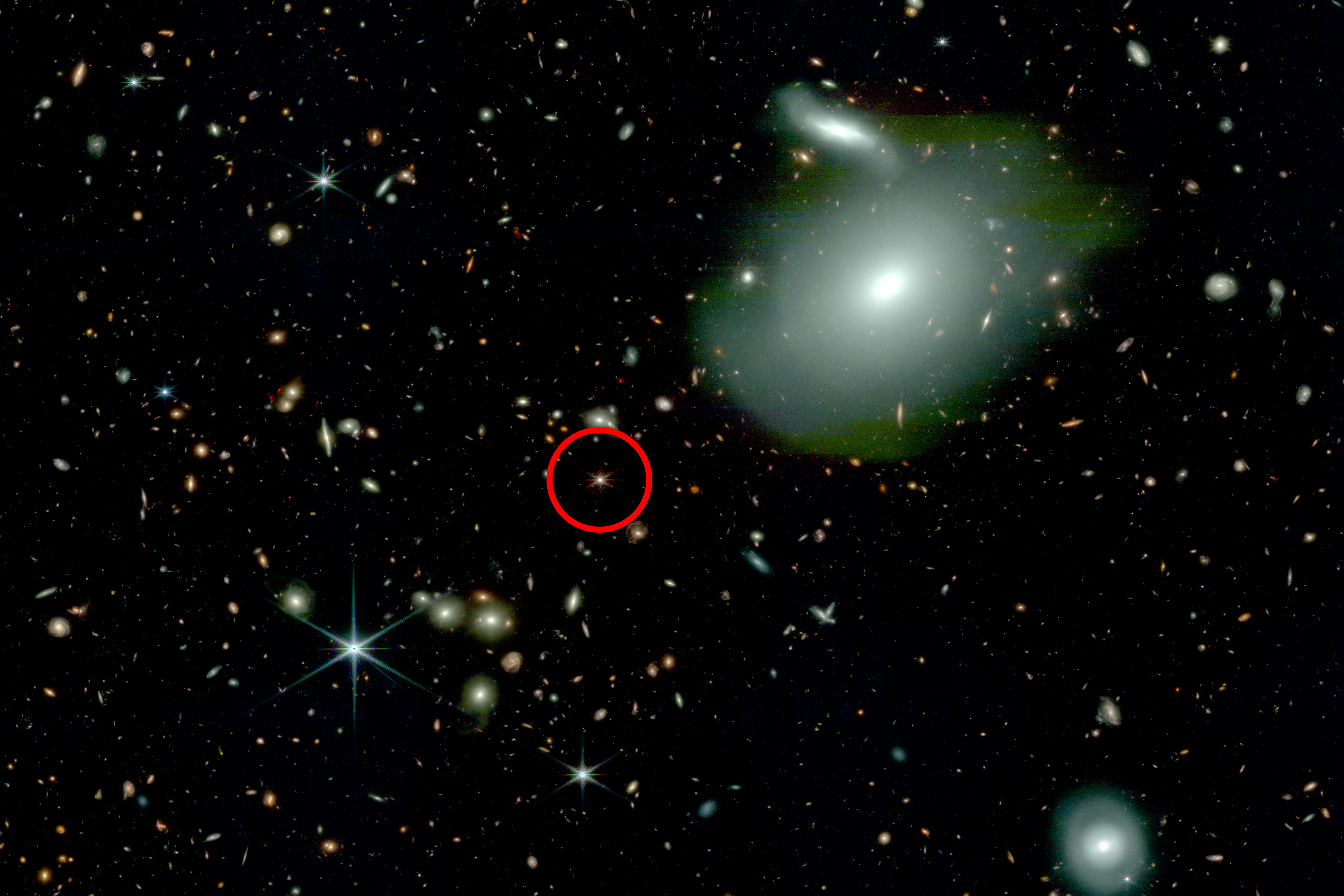

Given that Prochlorococcus and SAR11 suffuse the surface oceans, the team suspects that the exchange of molecules from one to the other could amount to one of the major cross-feeding relationships in the ocean, making it an important regulator of the ocean carbon cycle.

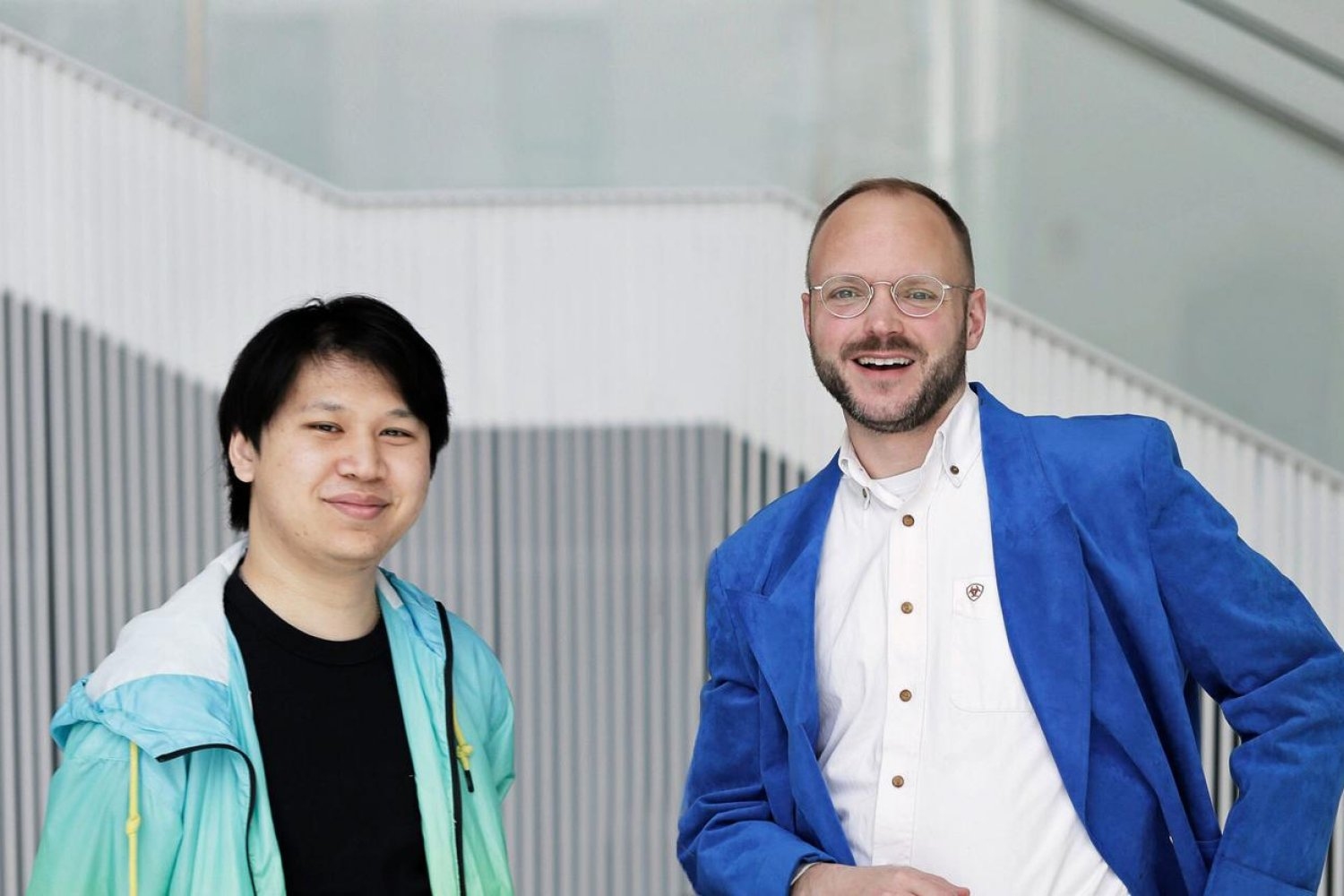

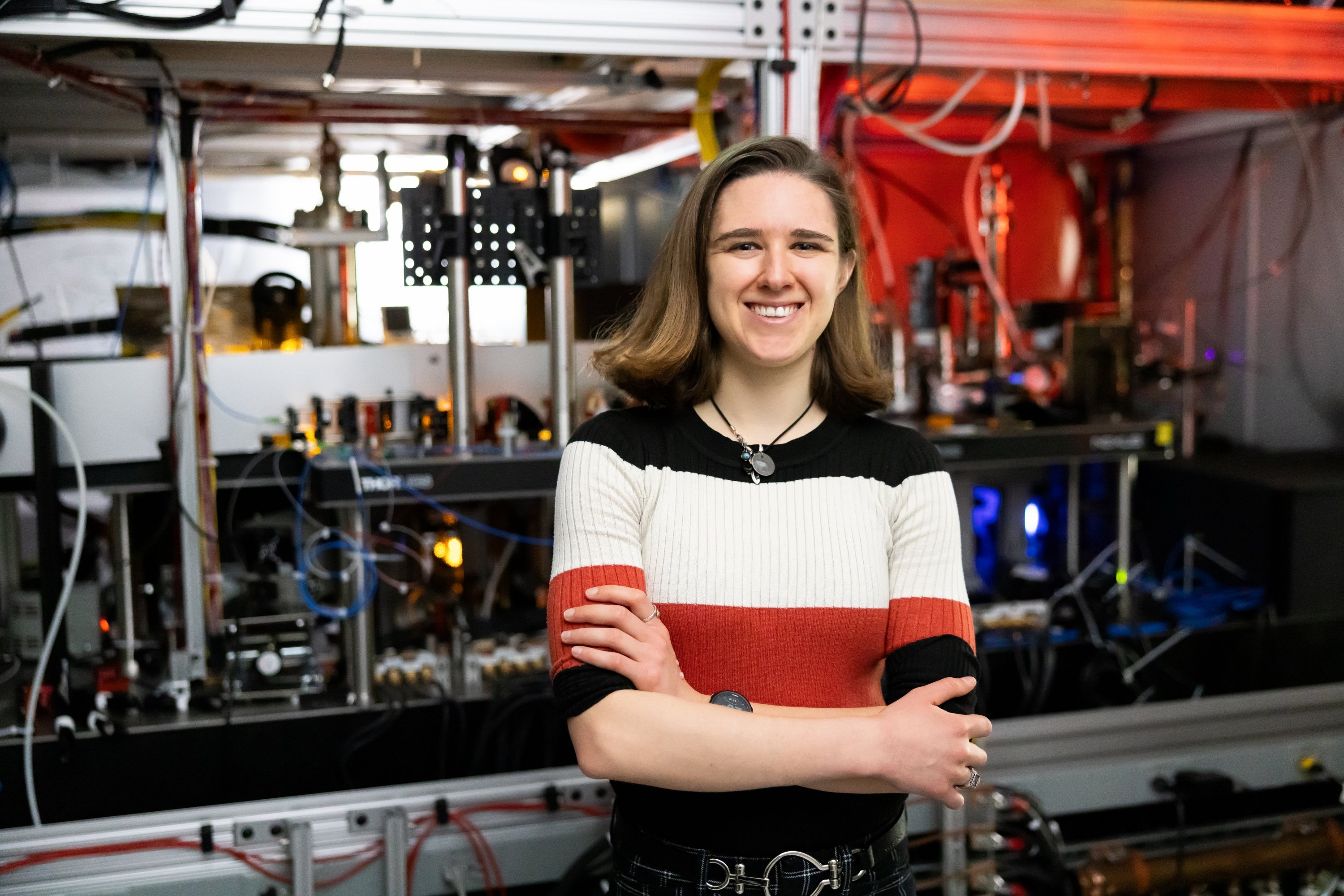

“By looking at the details and diversity of cross-feeding processes, we can start to unearth important forces that are shaping the carbon cycle,” says the study’s lead author, Rogier Braakman, a research scientist in MIT’s Department of Earth, Atmospheric and Planetary Sciences (EAPS).

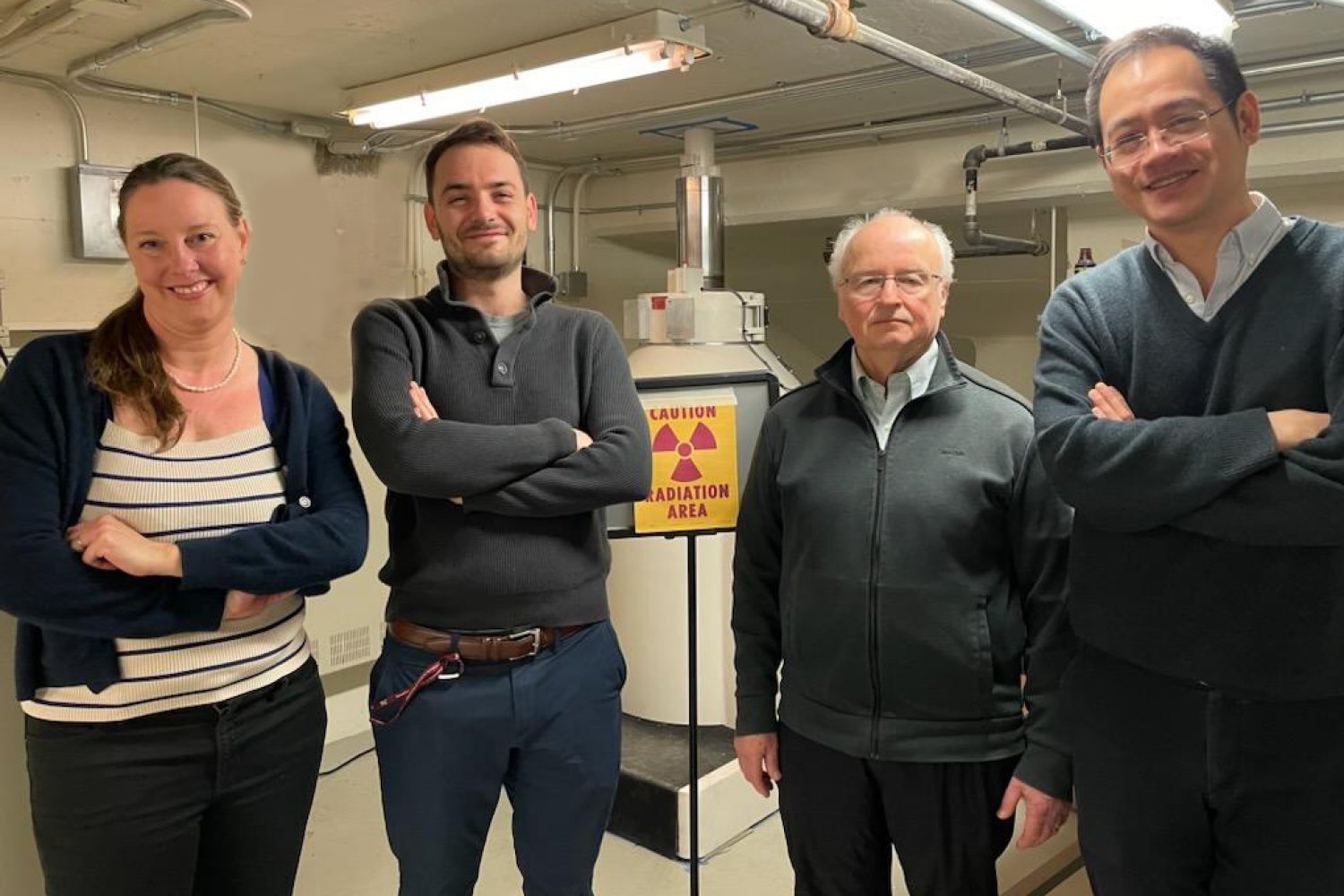

Other MIT co-authors include Brandon Satinsky, Tyler O’Keefe, Shane Hogle, Jamie Becker, Robert Li, Keven Dooley, and Aldo Arellano, along with Krista Longnecker, Melissa Soule, and Elizabeth Kujawinski of Woods Hole Oceanographic Institution (WHOI).

Spotting castaways

Cross-feeding occurs throughout the microbial world, though the process has mainly been studied in close-knit communities. In the human gut, for instance, microbes are in close proximity and can easily exchange and benefit from shared resources.

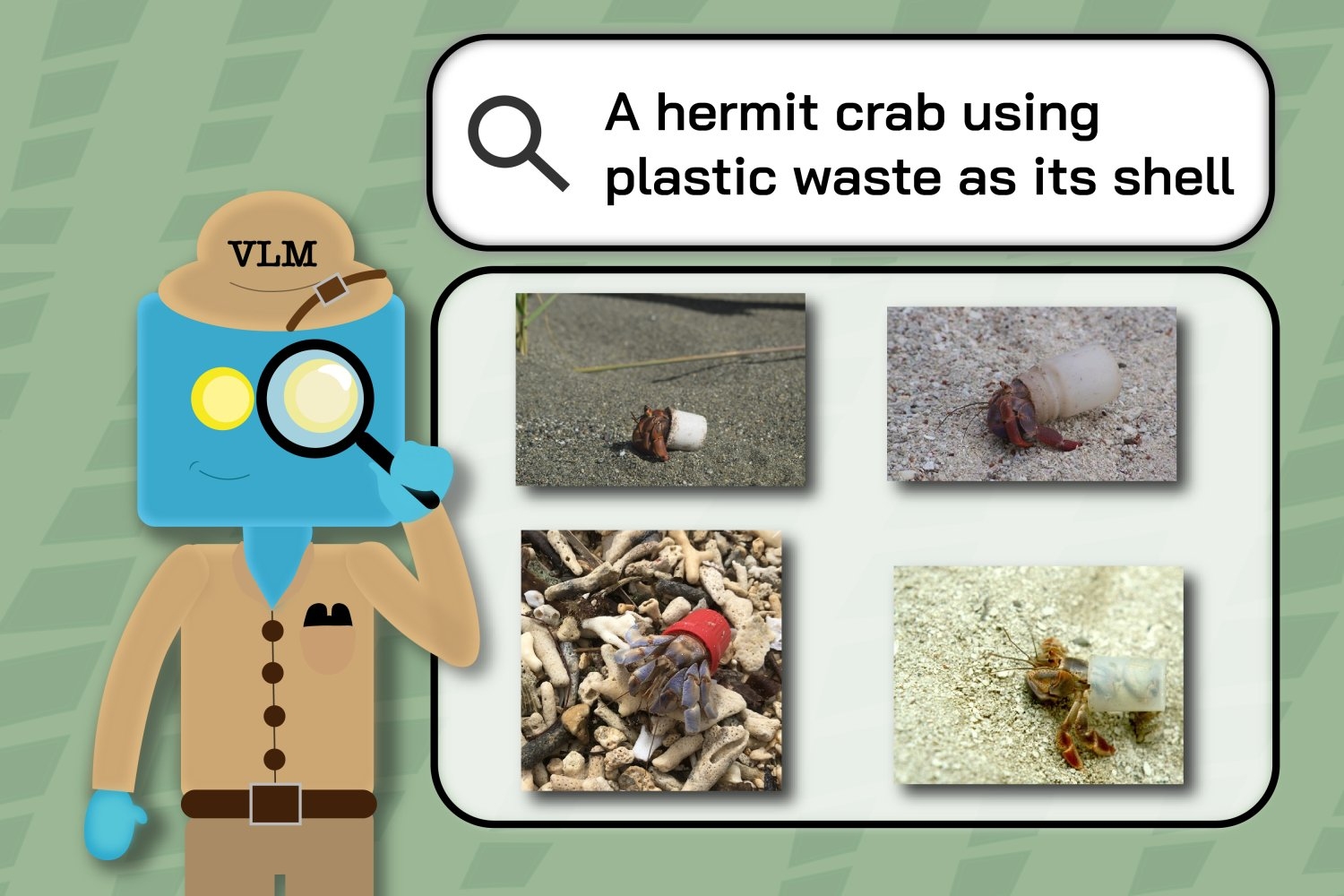

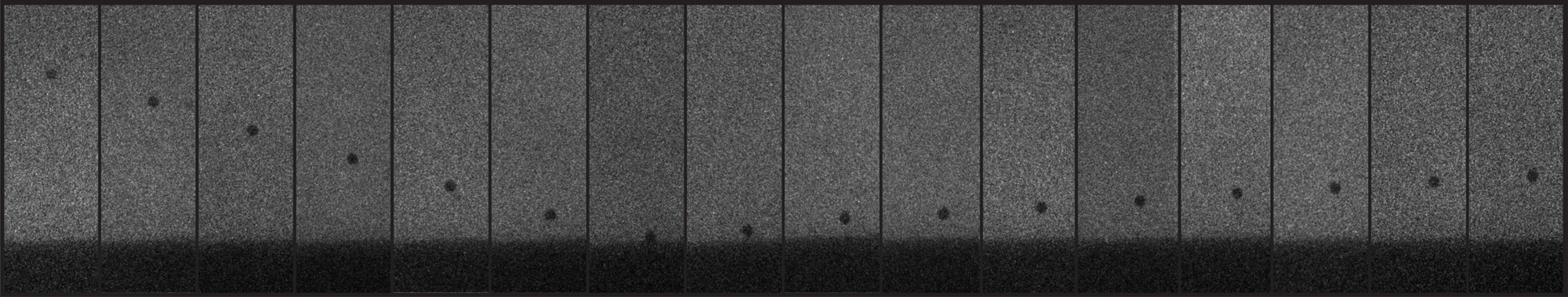

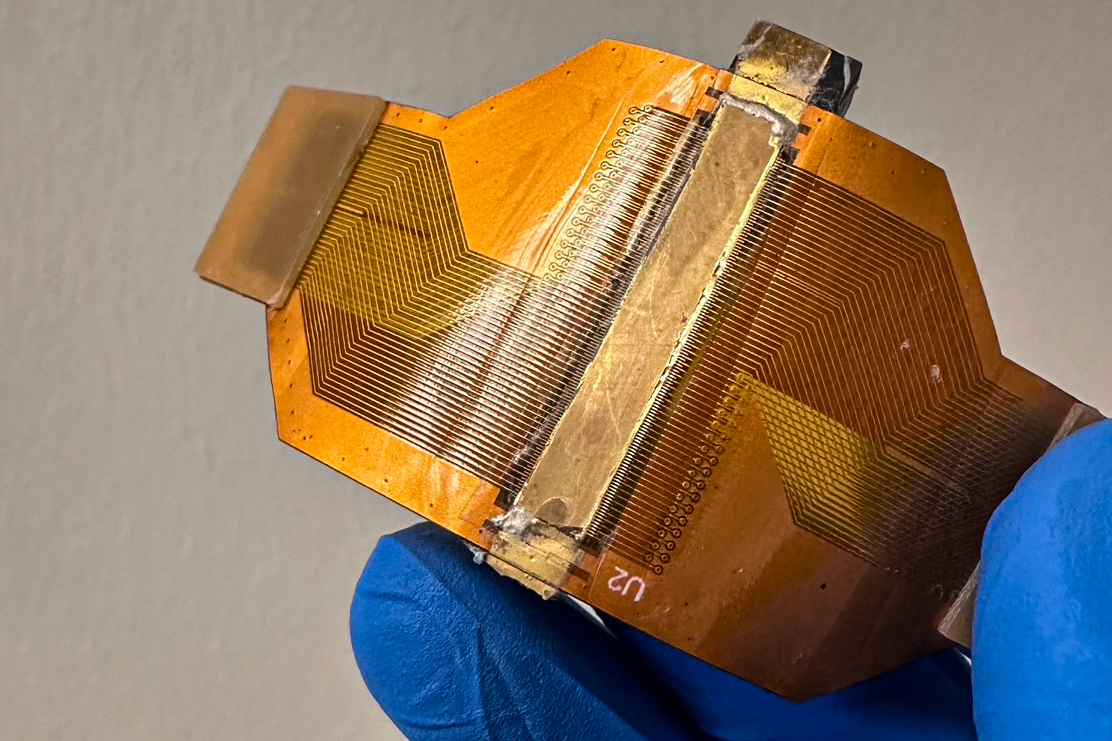

By comparison, Prochlorococcus are free-floating microbes that are regularly tossed and mixed through the ocean’s surface layers. While scientists assume that the plankton are involved in some amount of cross-feeding, exactly how this occurs, and who would benefit, have historically been challenging to probe; any stuff that Prochlorococcus cast away would have vanishingly low concentrations,and be exceedingly difficult to measure.

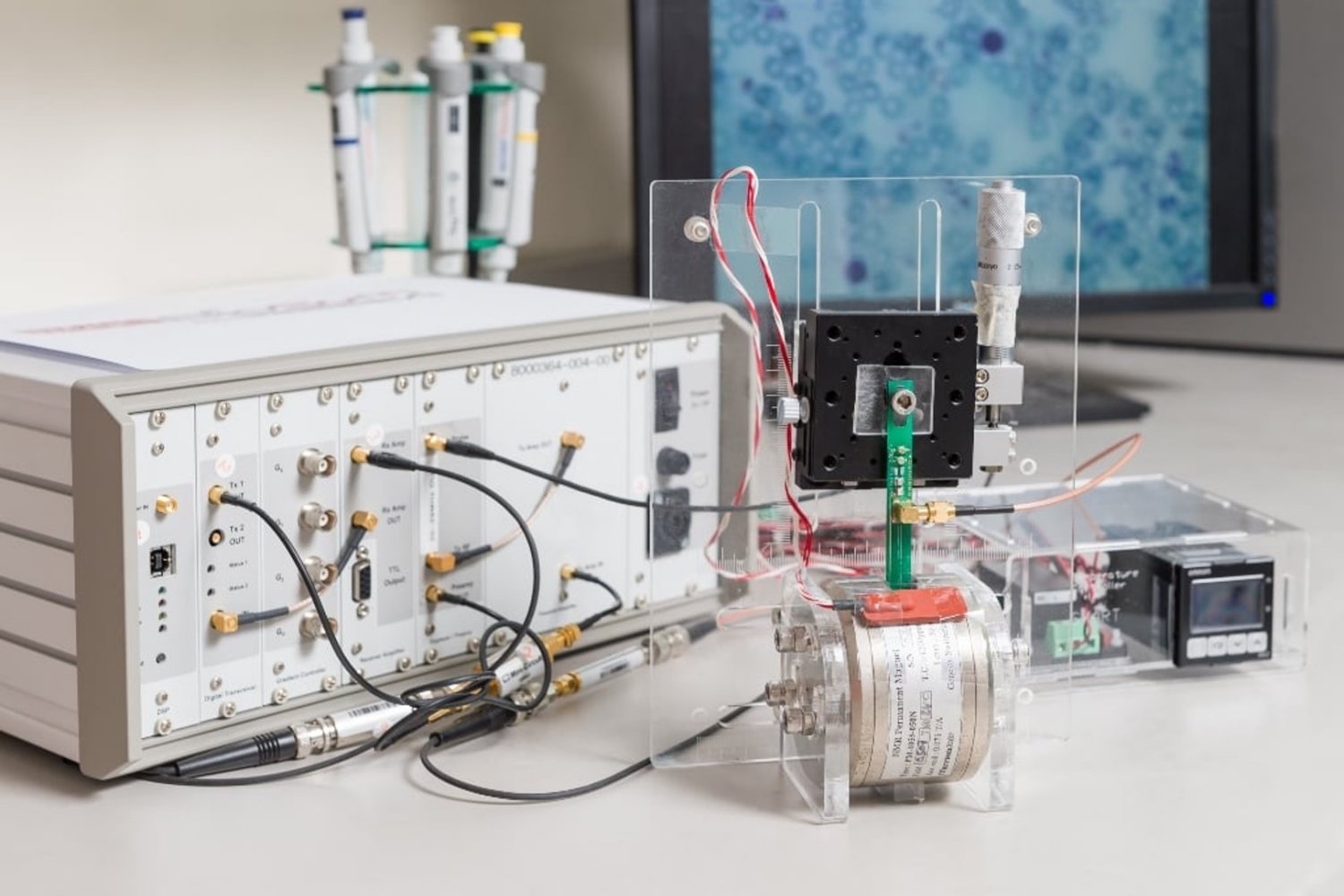

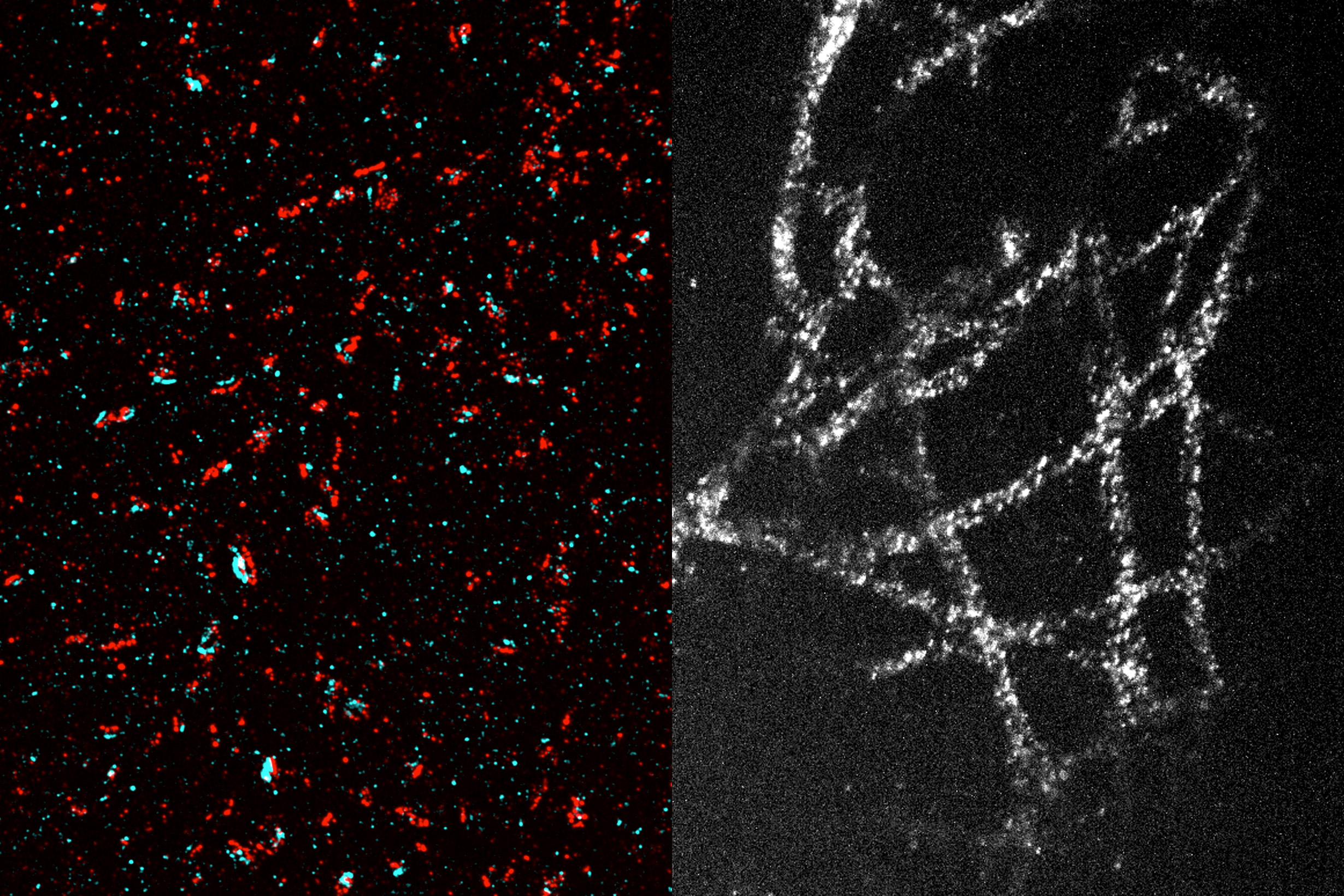

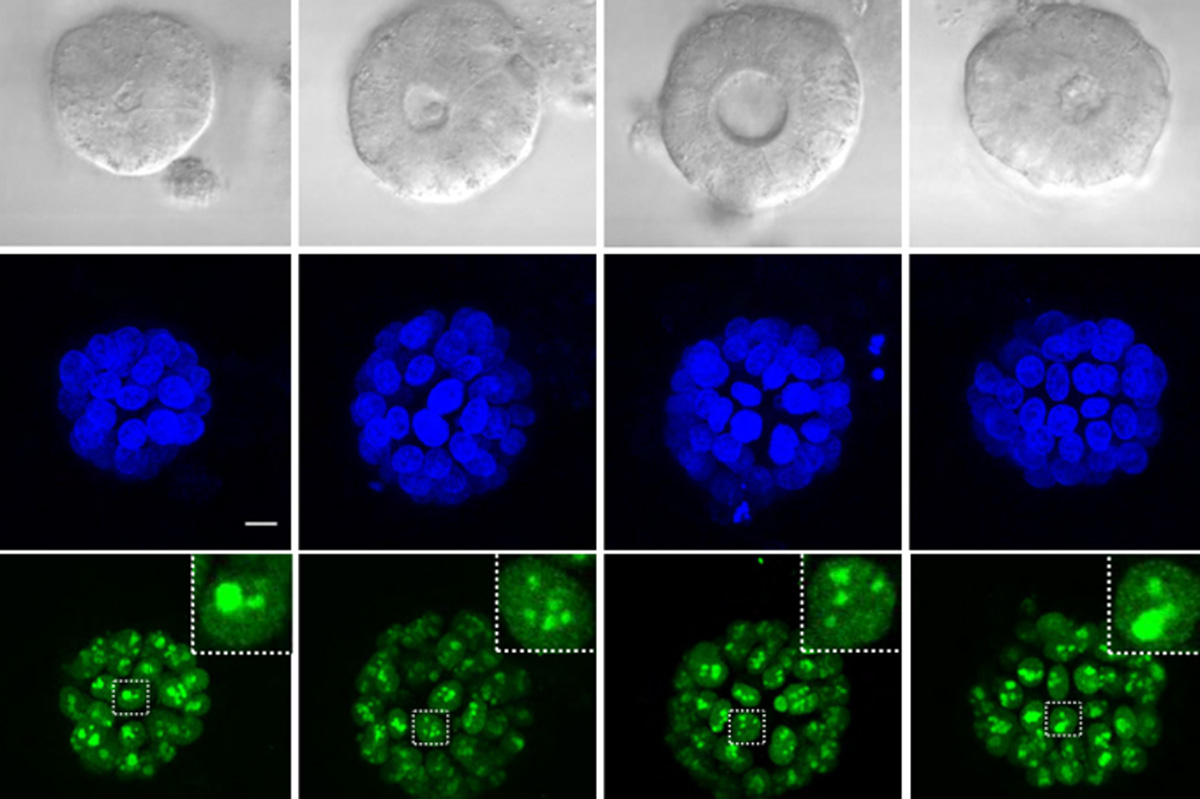

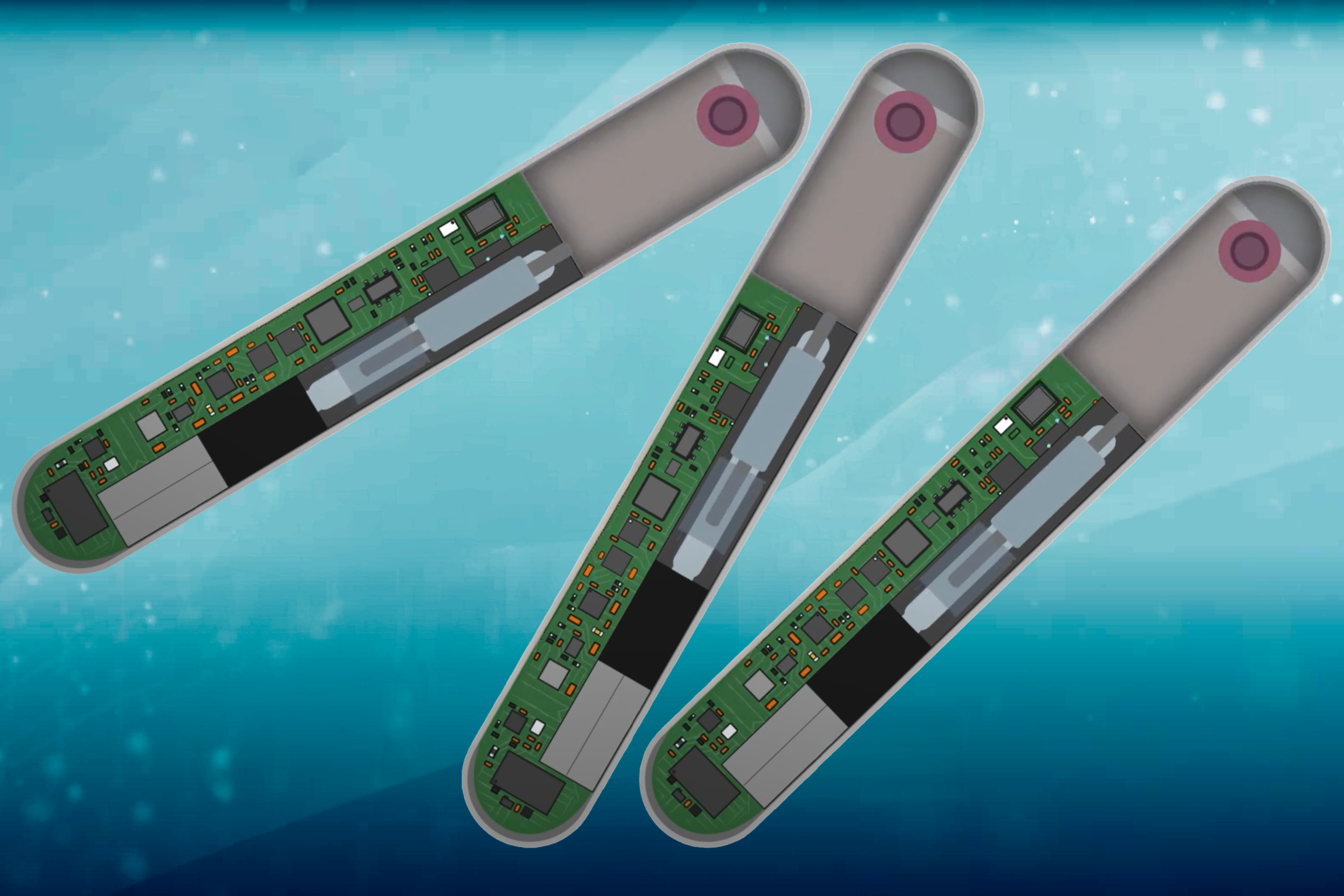

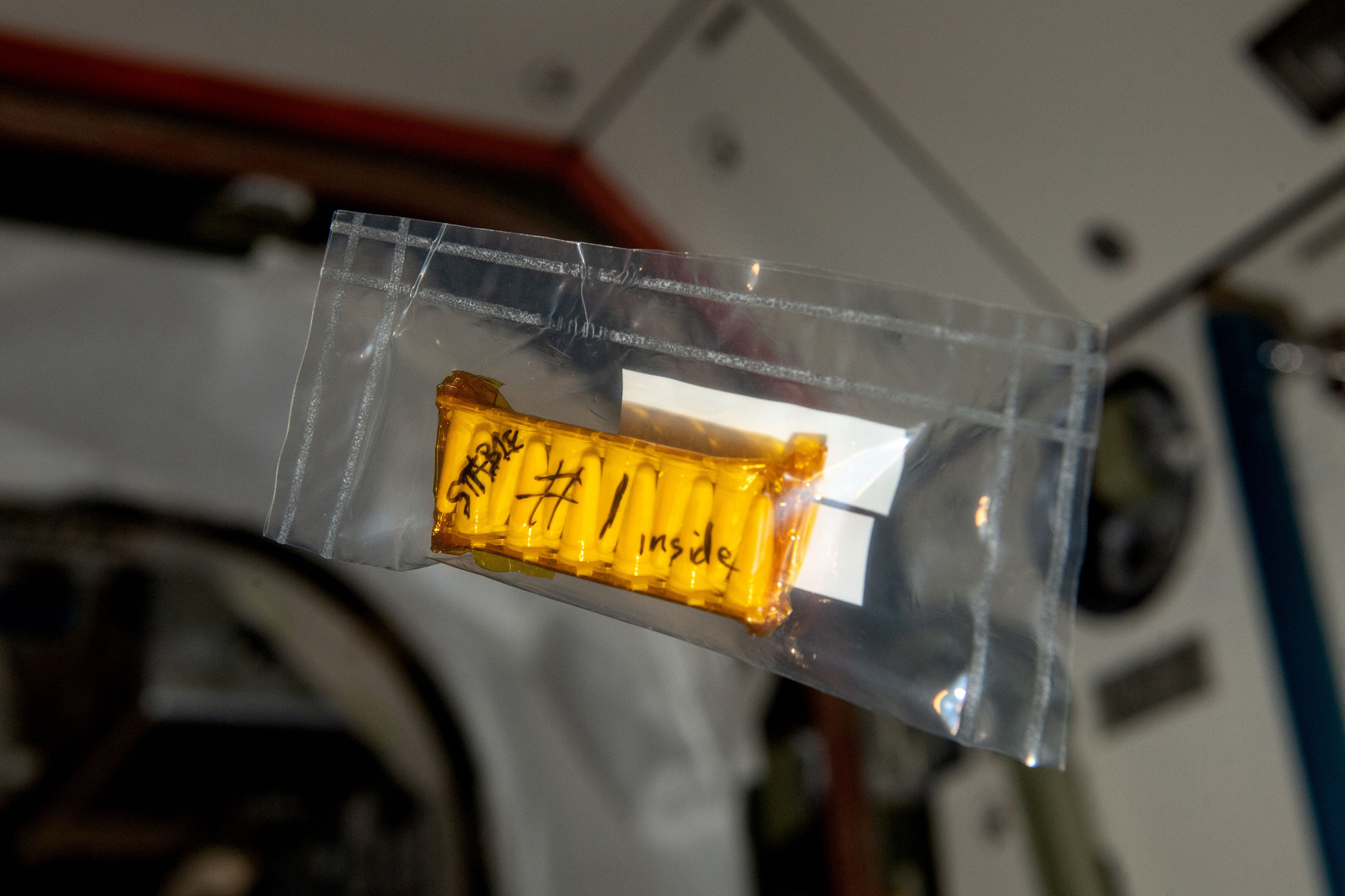

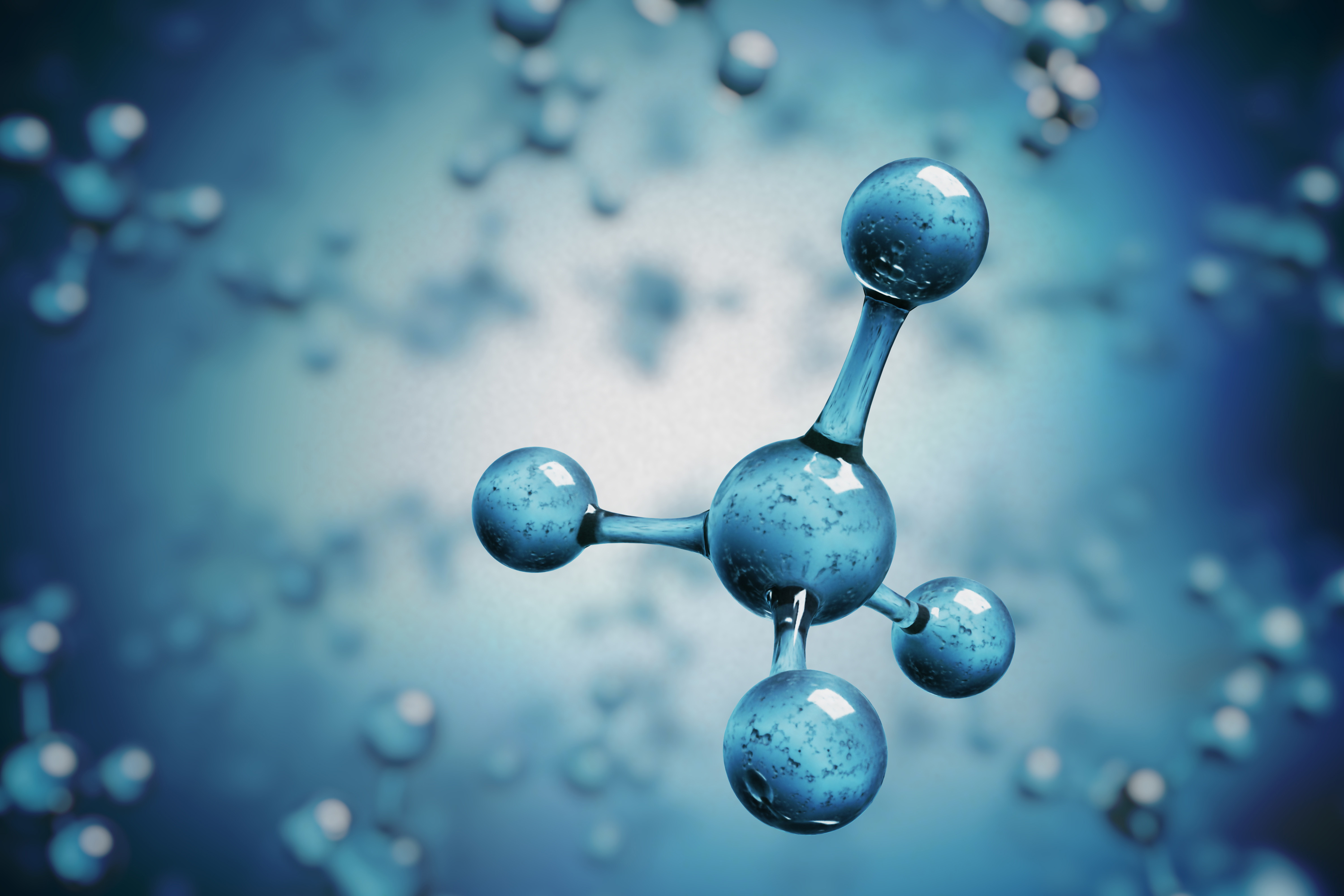

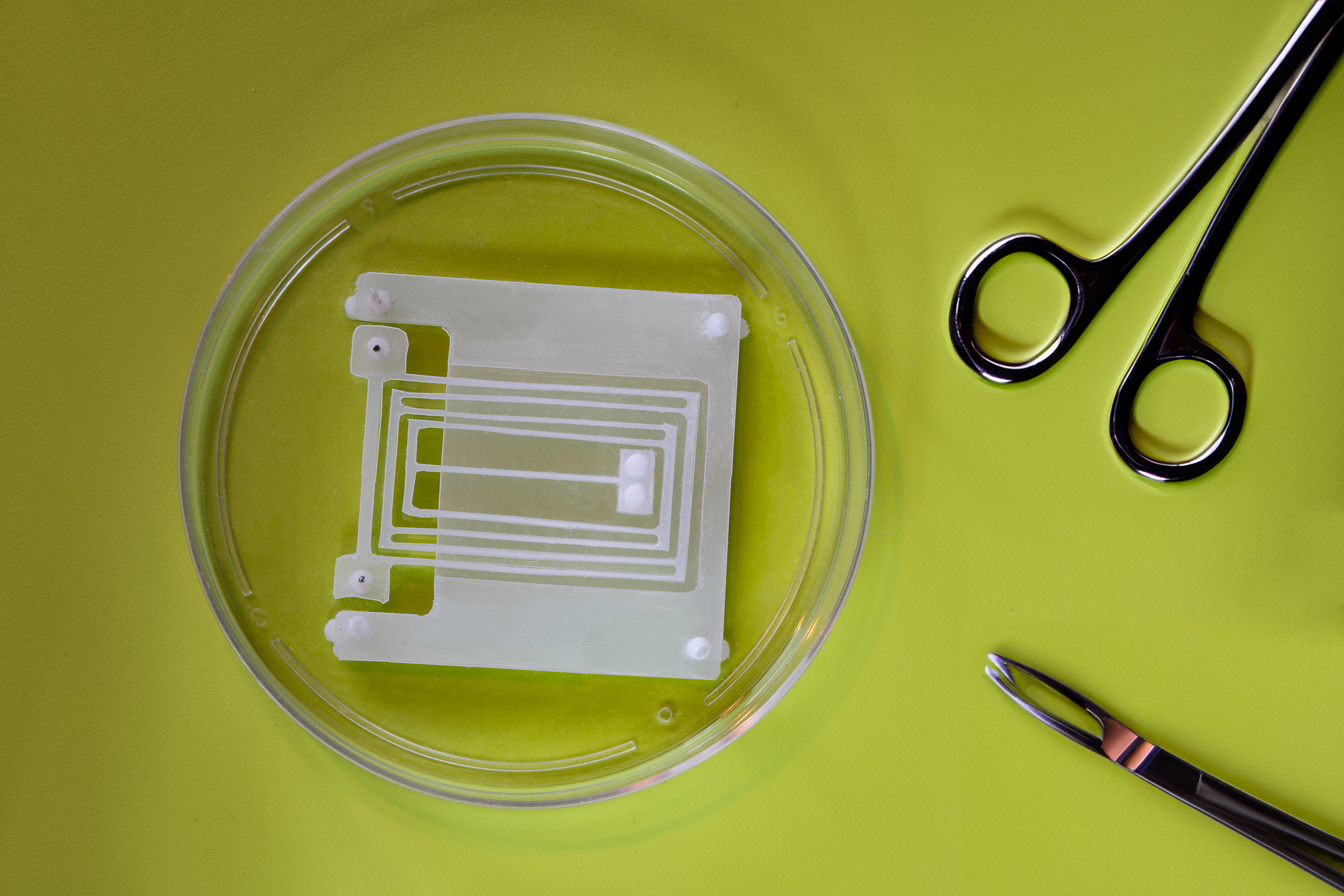

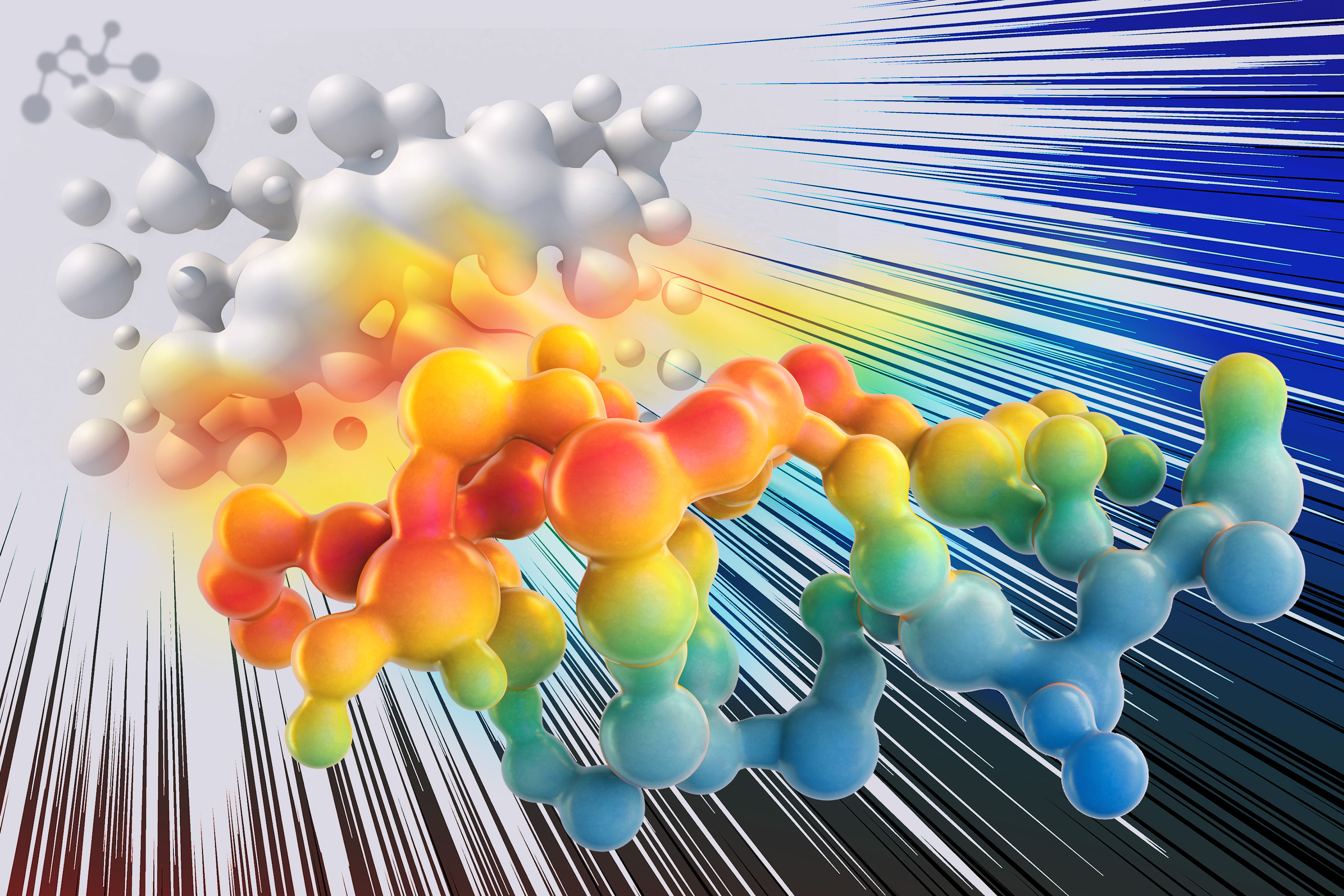

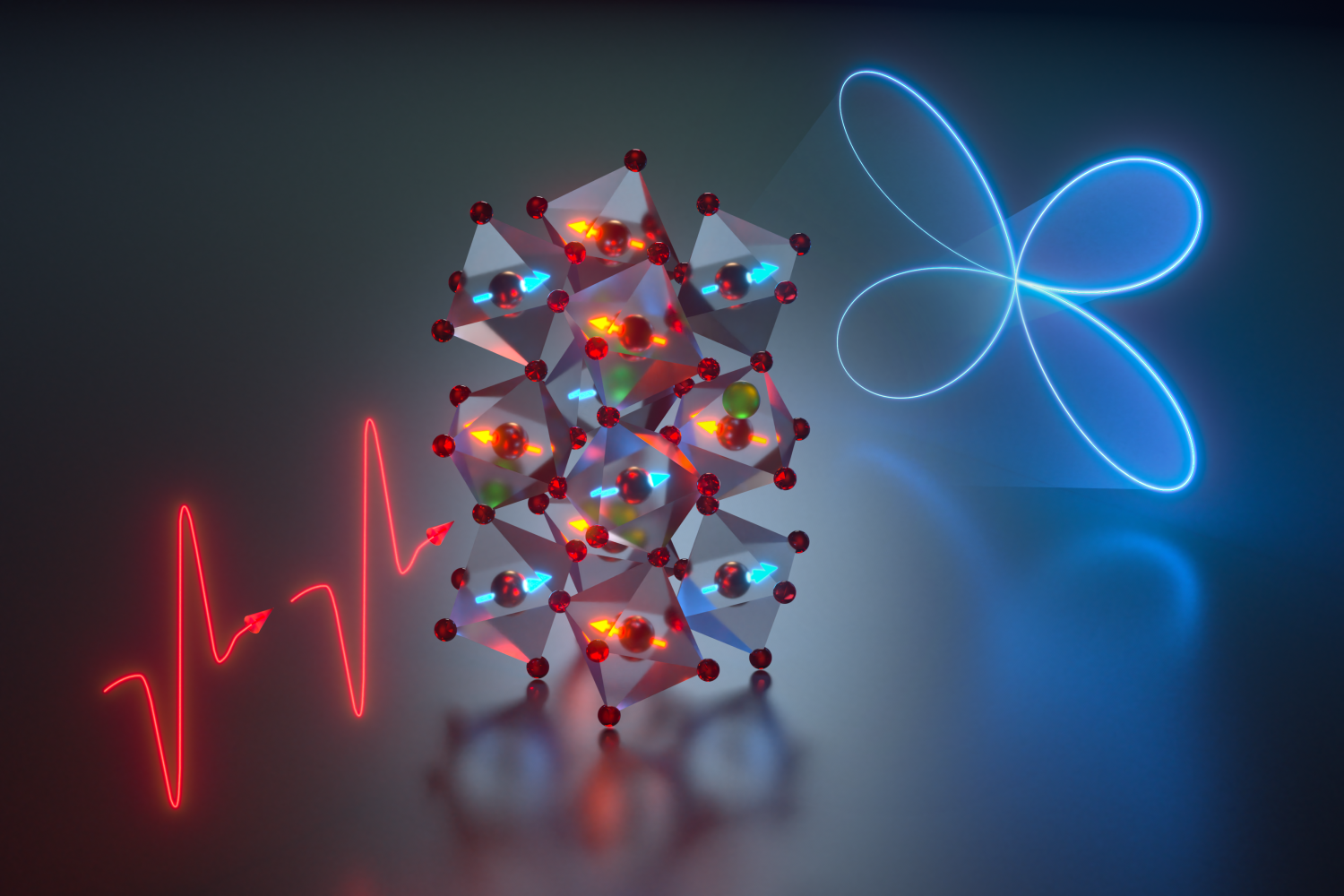

But in work published in 2023, Braakman teamed up with scientists at WHOI, who pioneered ways to measure small organic compounds in seawater. In the lab, they grew various strains of Prochlorococcus under different conditions and characterized what the microbes released. They found that among the major “exudants,” or released molecules, were purines and pyridines, which are molecular building blocks of DNA. The molecules also happen to be nitrogen-rich — a fact that puzzled the team. Prochlorococcus are mainly found in ocean regions that are low in nitrogen, so it was assumed they’d want to retain any and all nitrogen-containing compounds they can. Why, then, were they instead throwing such compounds away?

Global symphony

In their new study, the researchers took a deep dive into the details of Prochlorococcus’ cross-feeding and how it influences various types of ocean microbes.

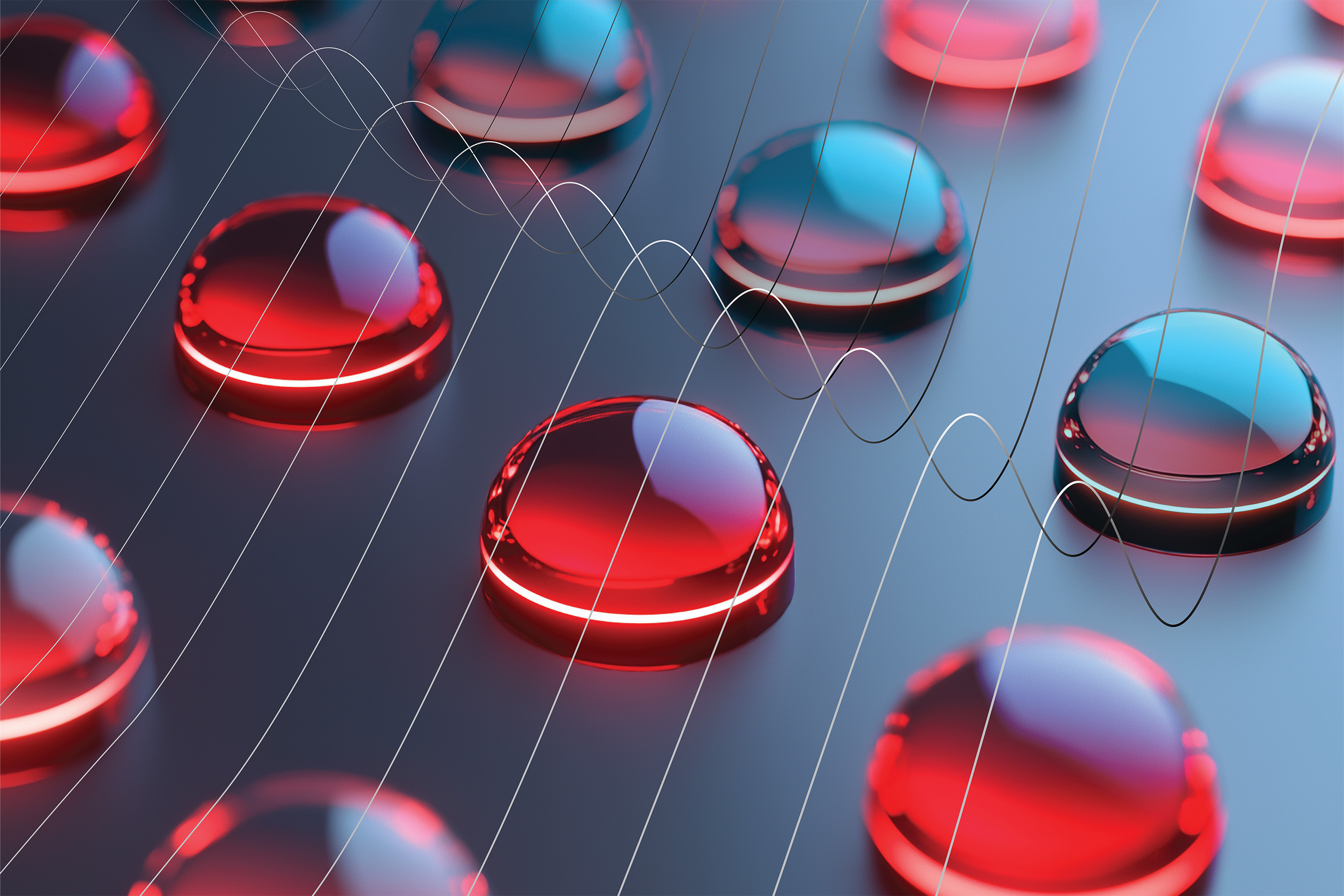

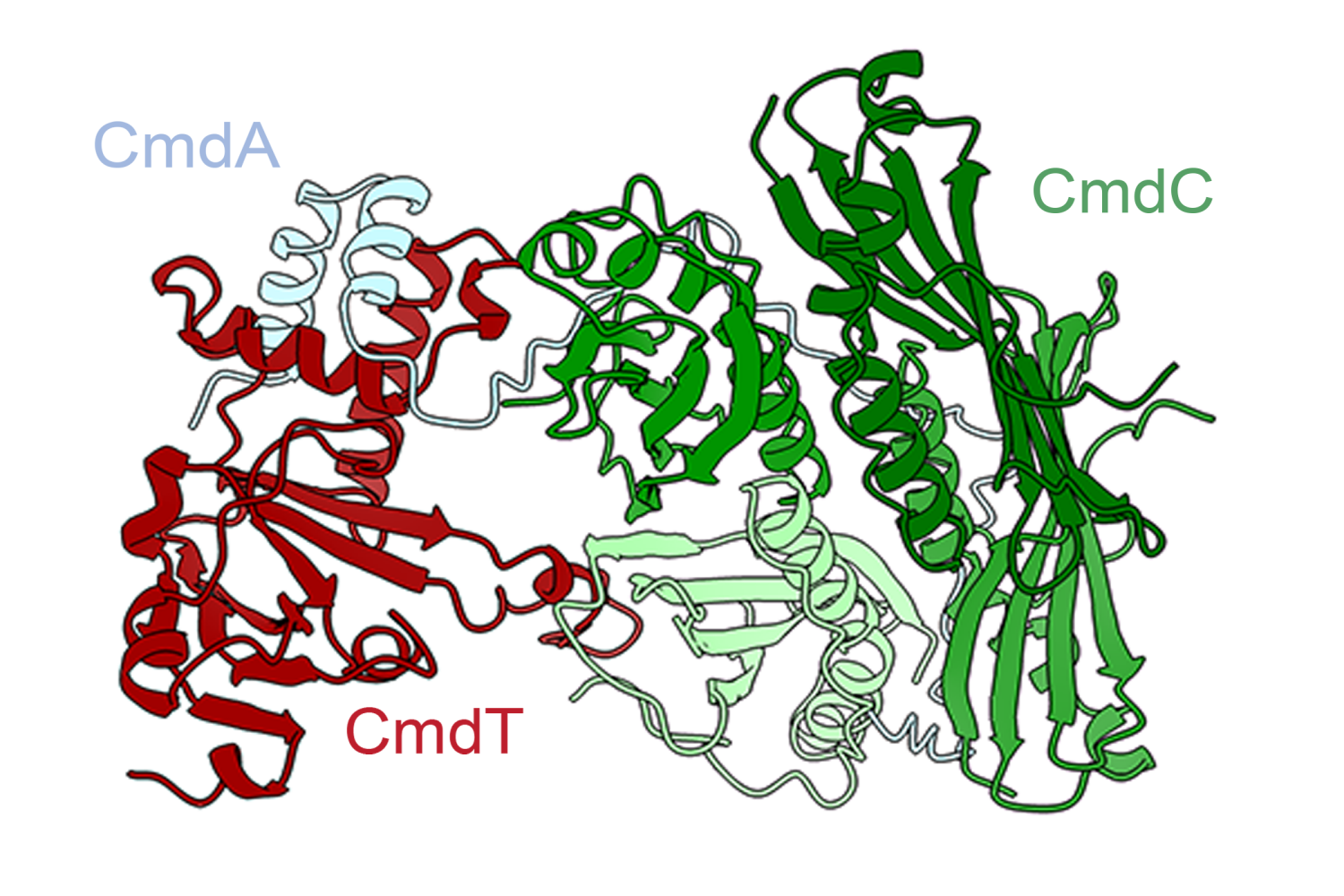

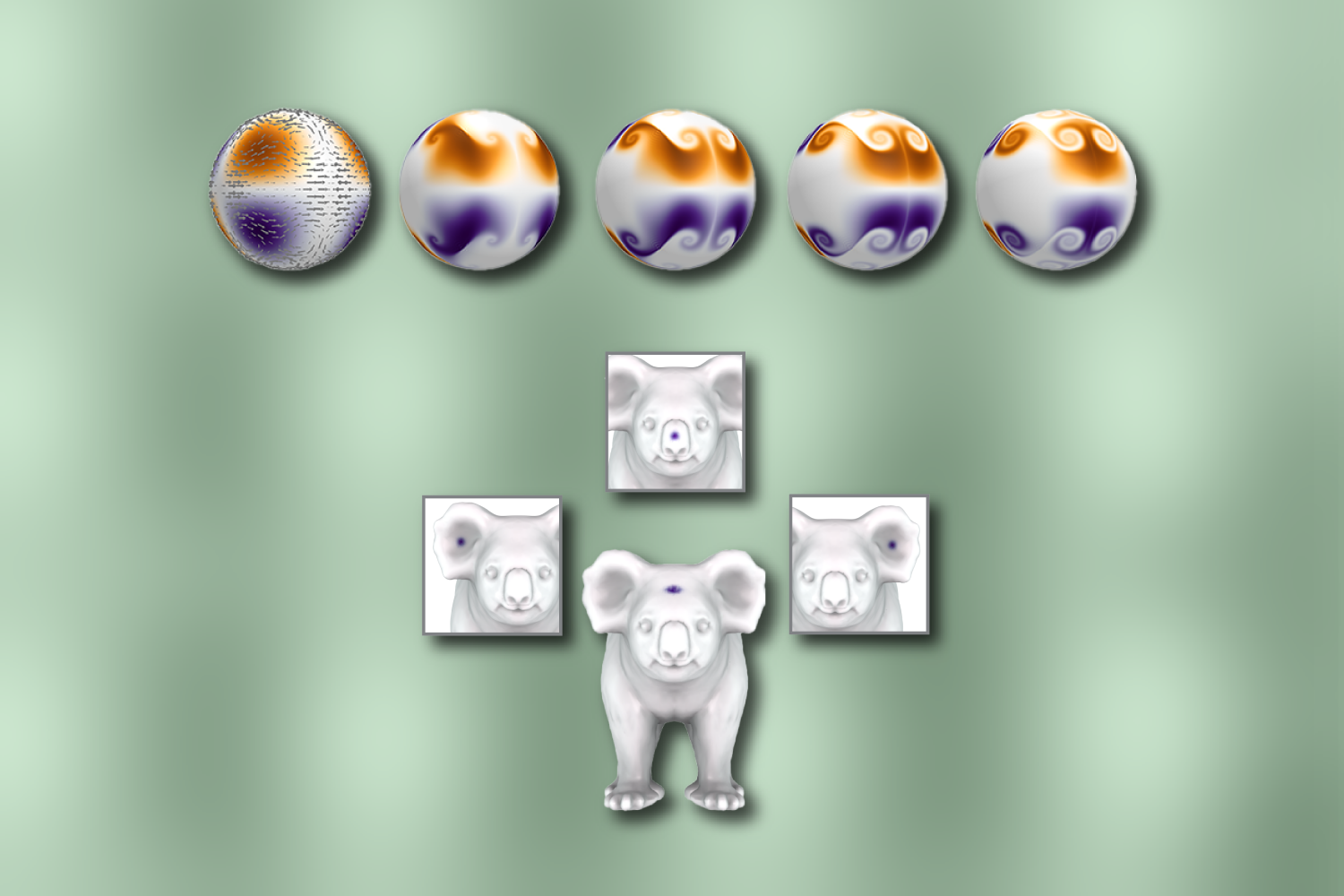

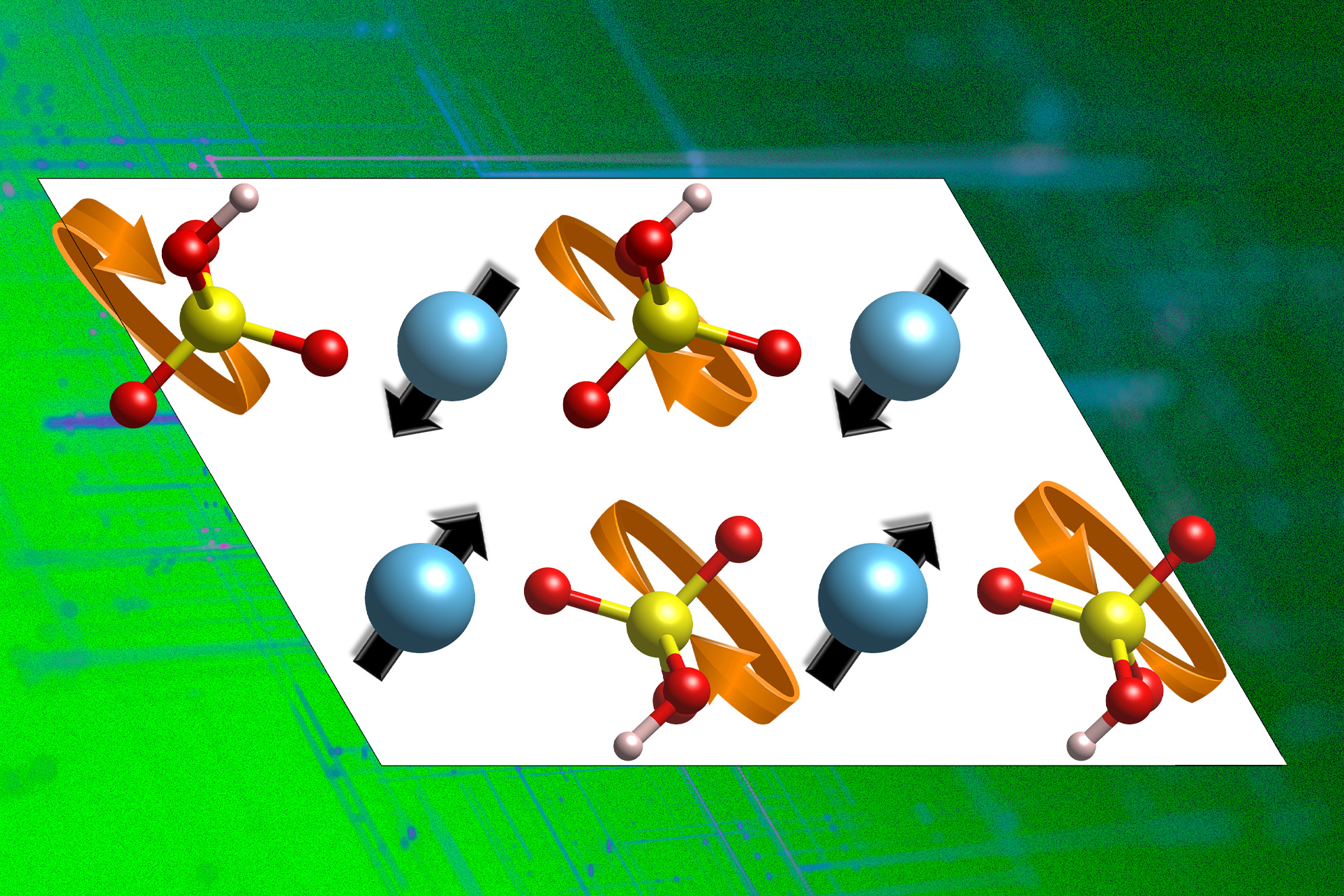

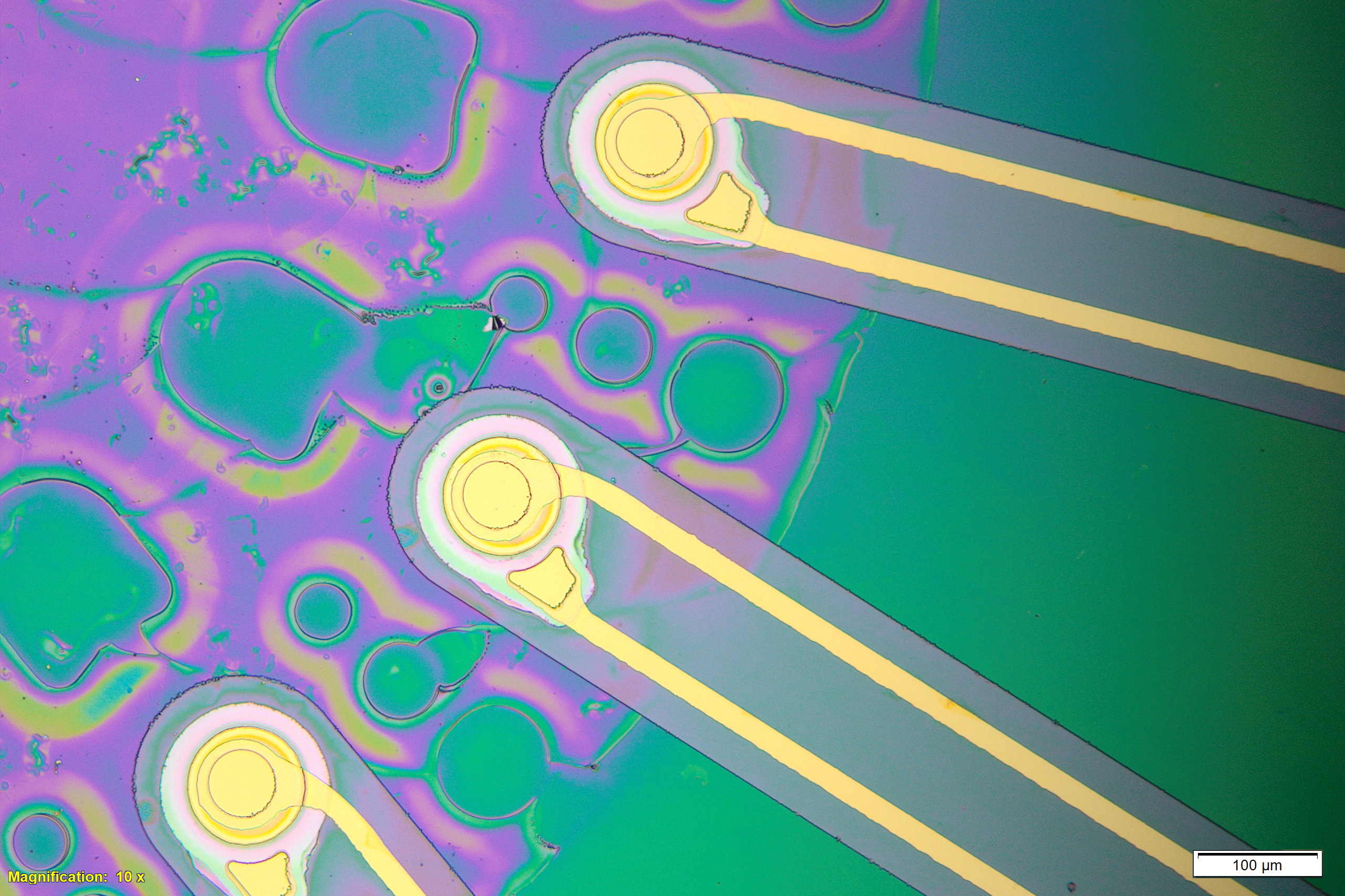

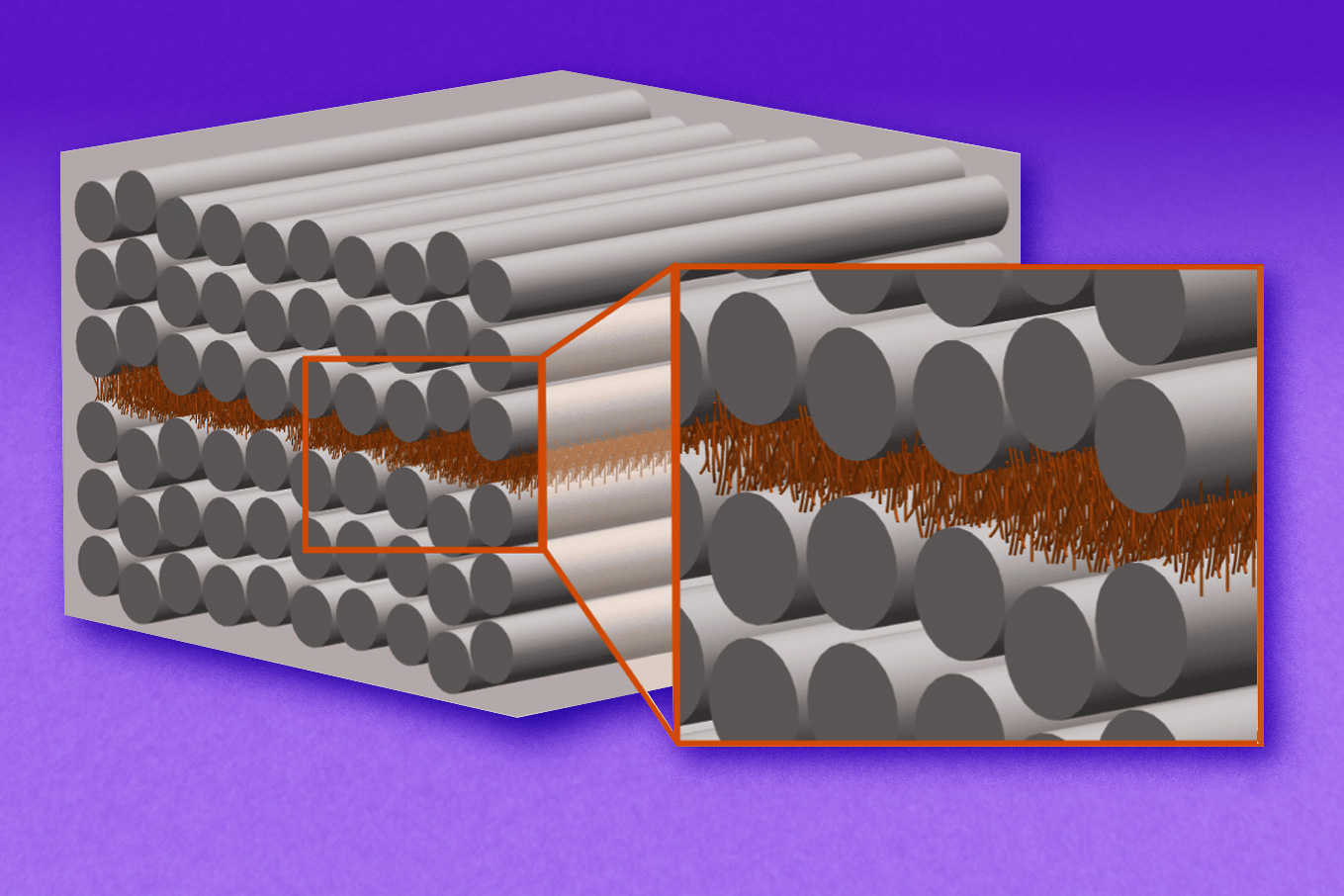

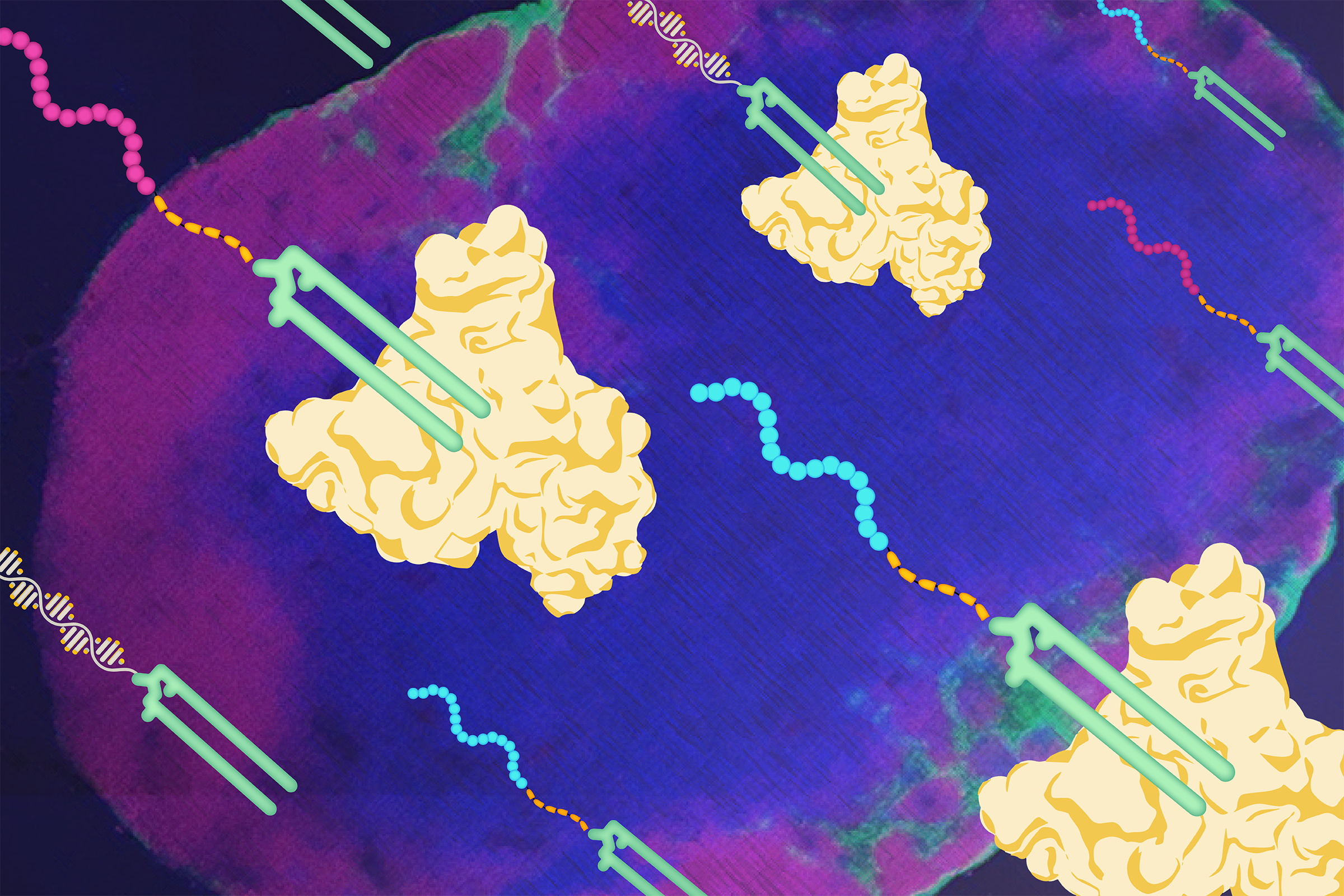

They set out to study how Prochlorococcus use purine and pyridine in the first place, before expelling the compounds into their surroundings. They compared published genomes of the microbes, looking for genes that encode purine and pyridine metabolism. Tracing the genes forward through the genomes, the team found that once the compounds are produced, they are used to make DNA and replicate the microbes’ genome. Any leftover purine and pyridine is recycled and used again, though a fraction of the stuff is ultimately released into the environment. Prochlorococcus appear to make the most of the compounds, then cast off what they can’t.

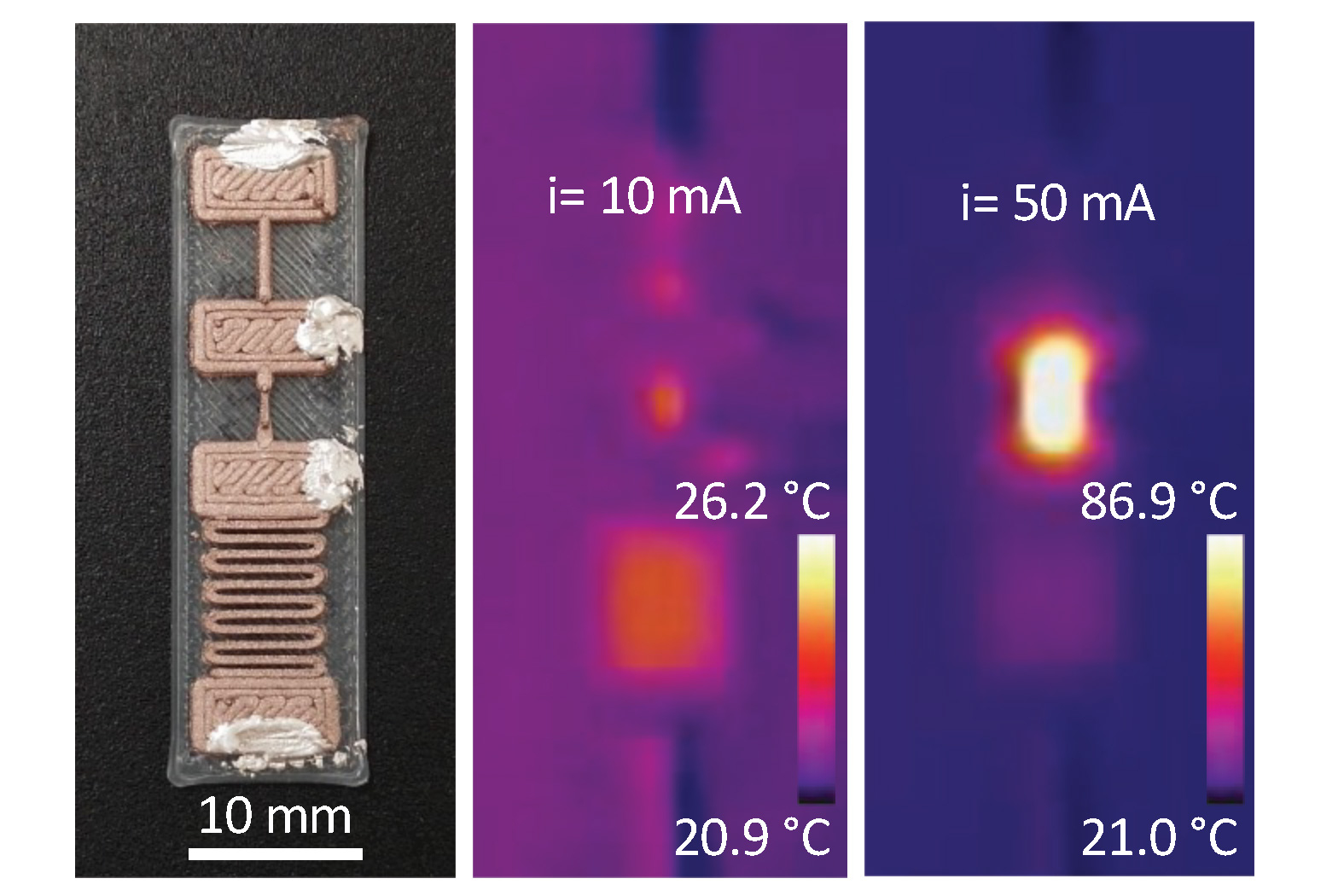

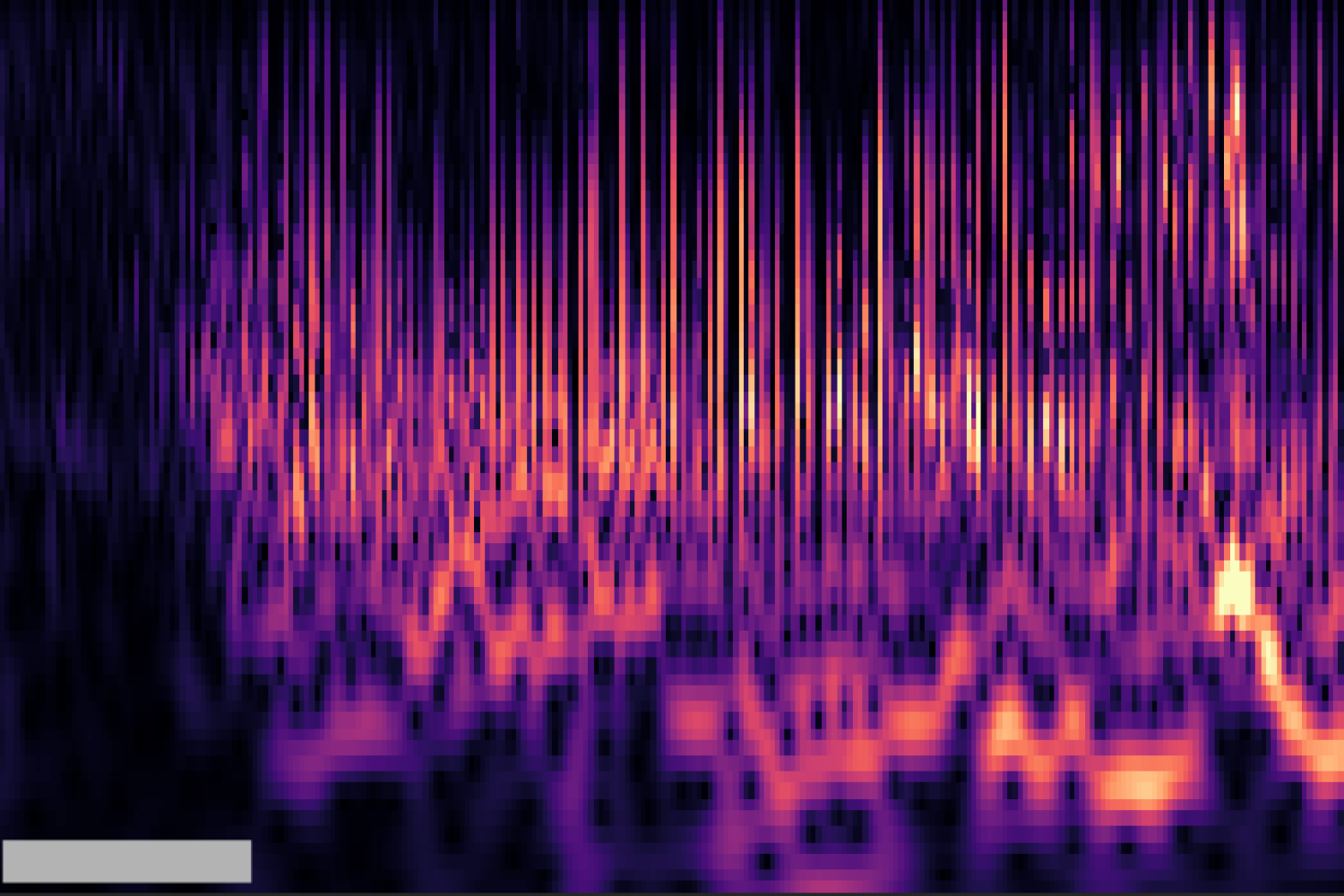

The team also looked to gene expression data and found that genes involved in recycling purine and pyrimidine peak several hours after the recognized peak in genome replication that occurs at dusk. The question then was: What could be benefiting from this nightly shedding?

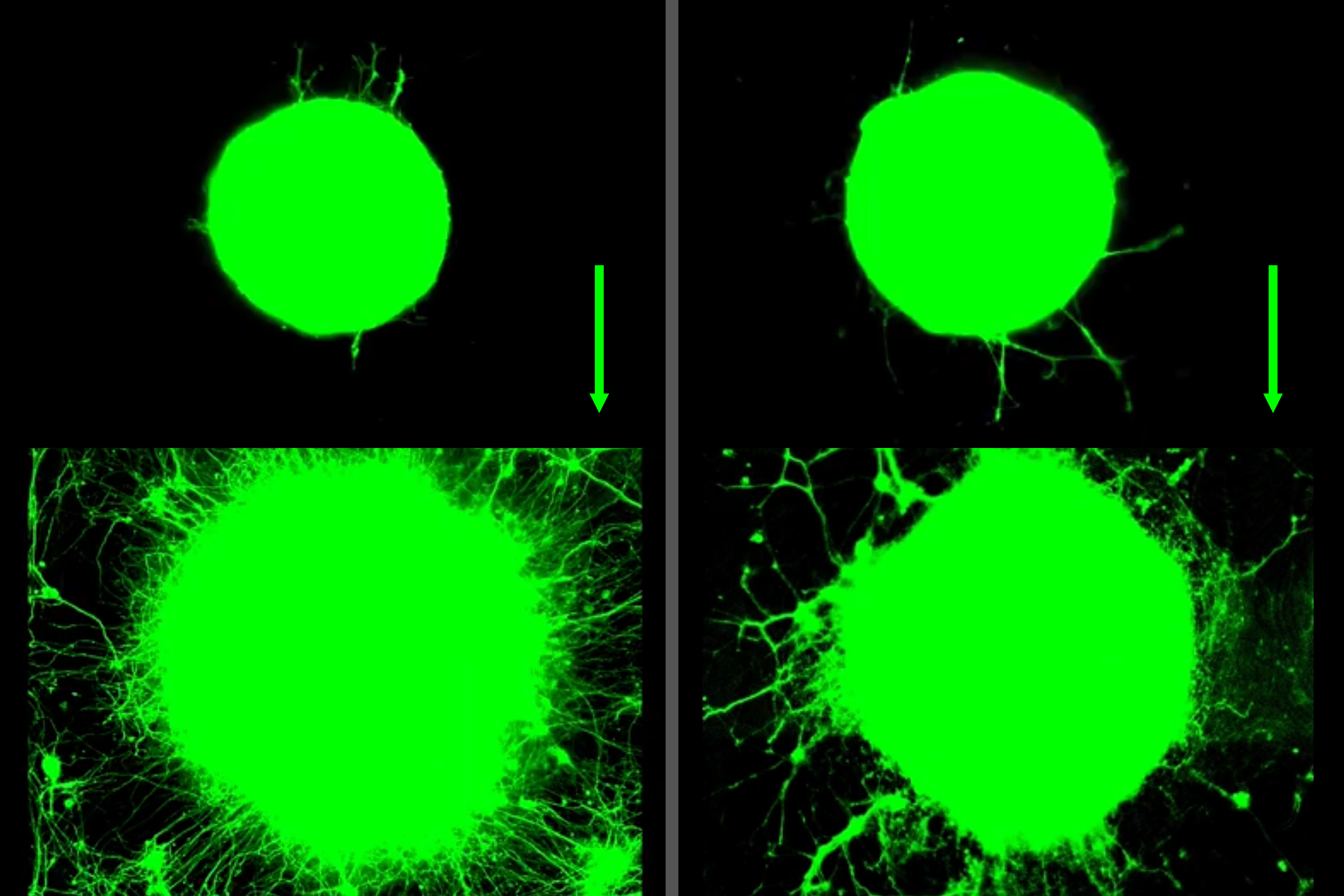

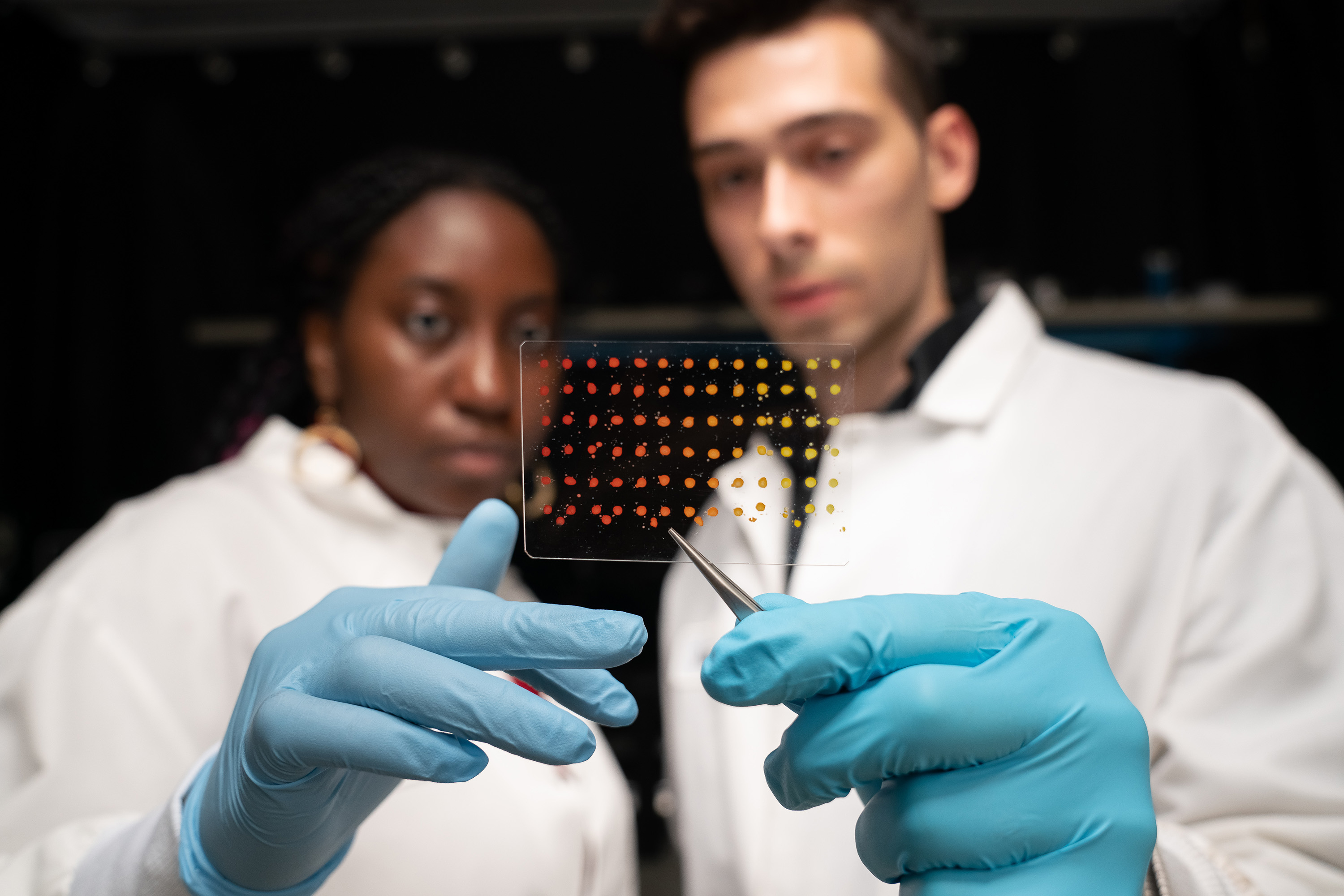

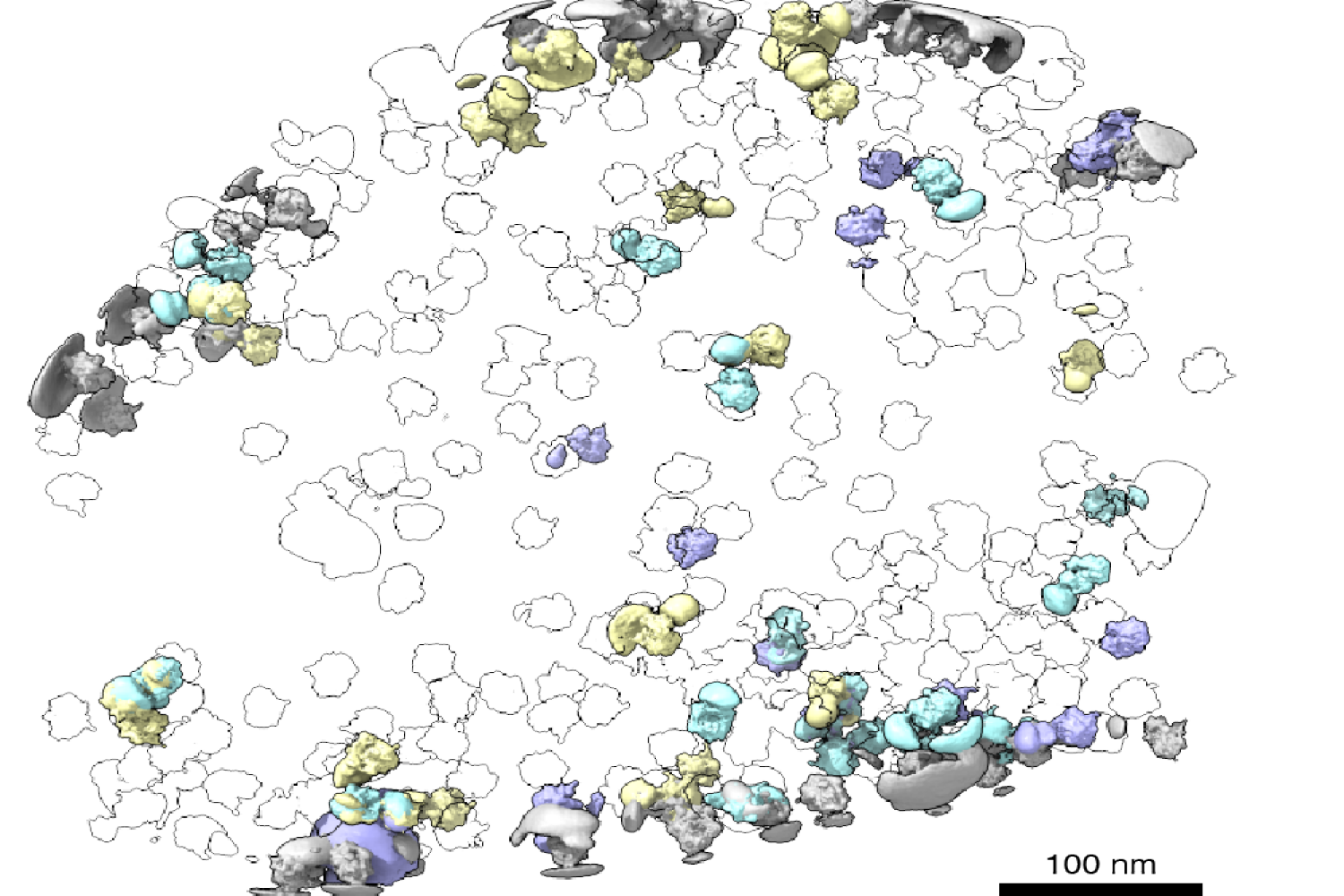

For this, the team looked at the genomes of more than 300 heterotrophic microbes — organisms that consume organic carbon rather than making it themselves through photosynthesis. They suspected that such carbon-feeders could be likely consumers of Prochlorococcus’ organic rejects. They found most of the heterotrophs contained genes that take up either purine or pyridine, or in some cases, both, suggesting microbes have evolved along different paths in terms of how they cross-feed.

The group zeroed in on one purine-preferring microbe, SAR11, as it is the most abundant heterotrophic microbe in the ocean. When they then compared the genes across different strains of SAR11, they found that various types use purines for different purposes, from simply taking them up and using them intact to breaking them down for their energy, carbon, or nitrogen. What could explain the diversity in how the microbes were using Prochlorococcus’ cast-offs?

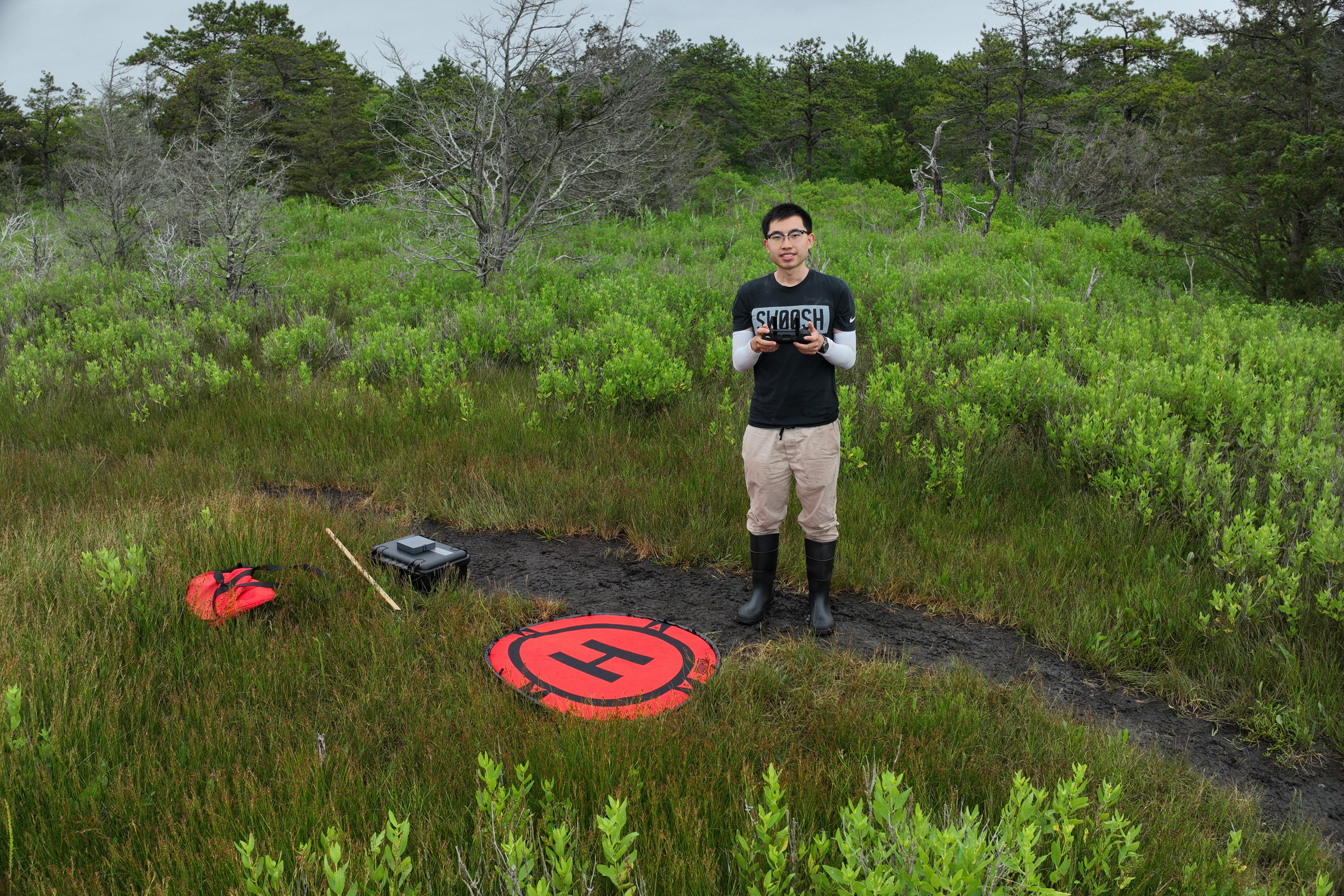

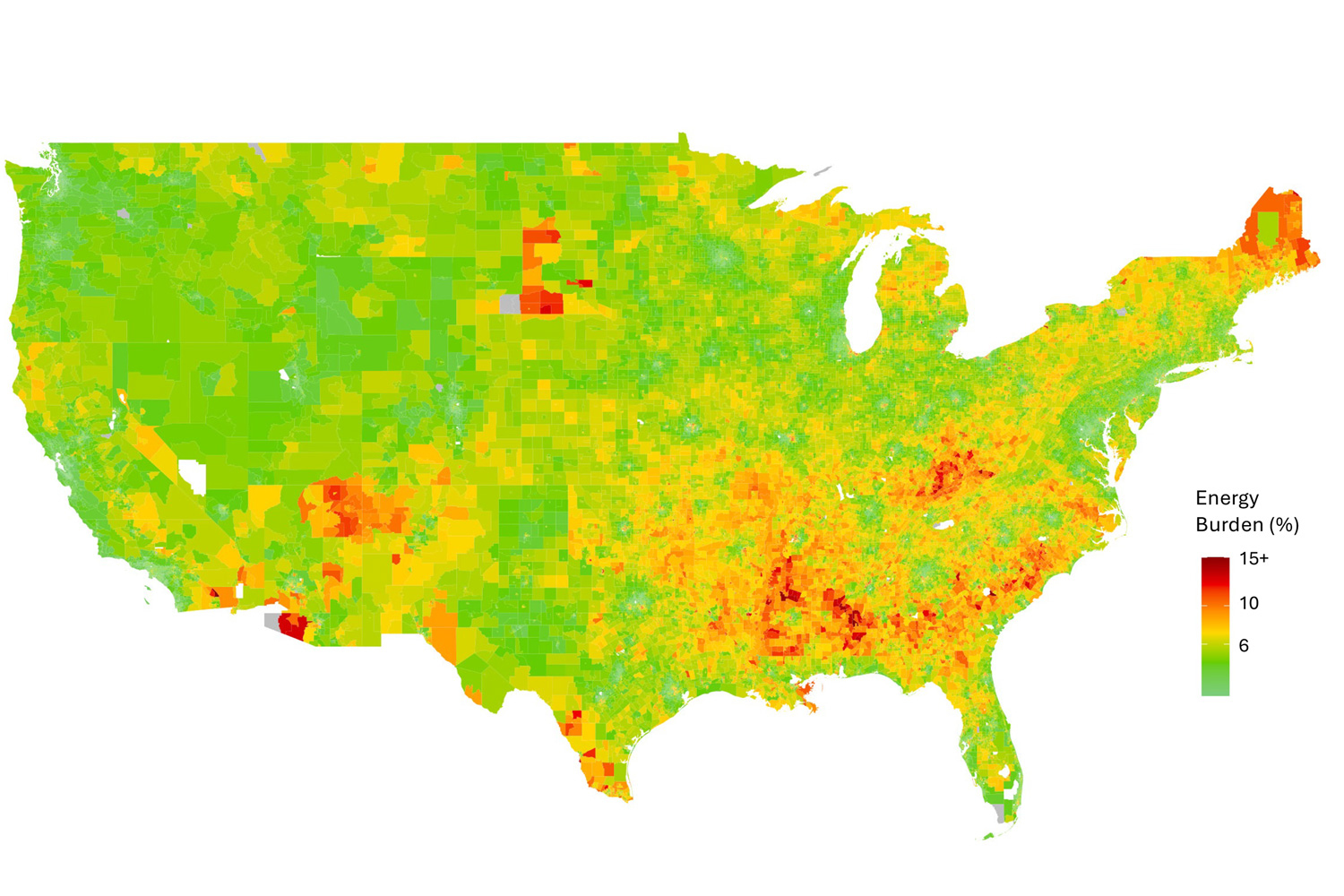

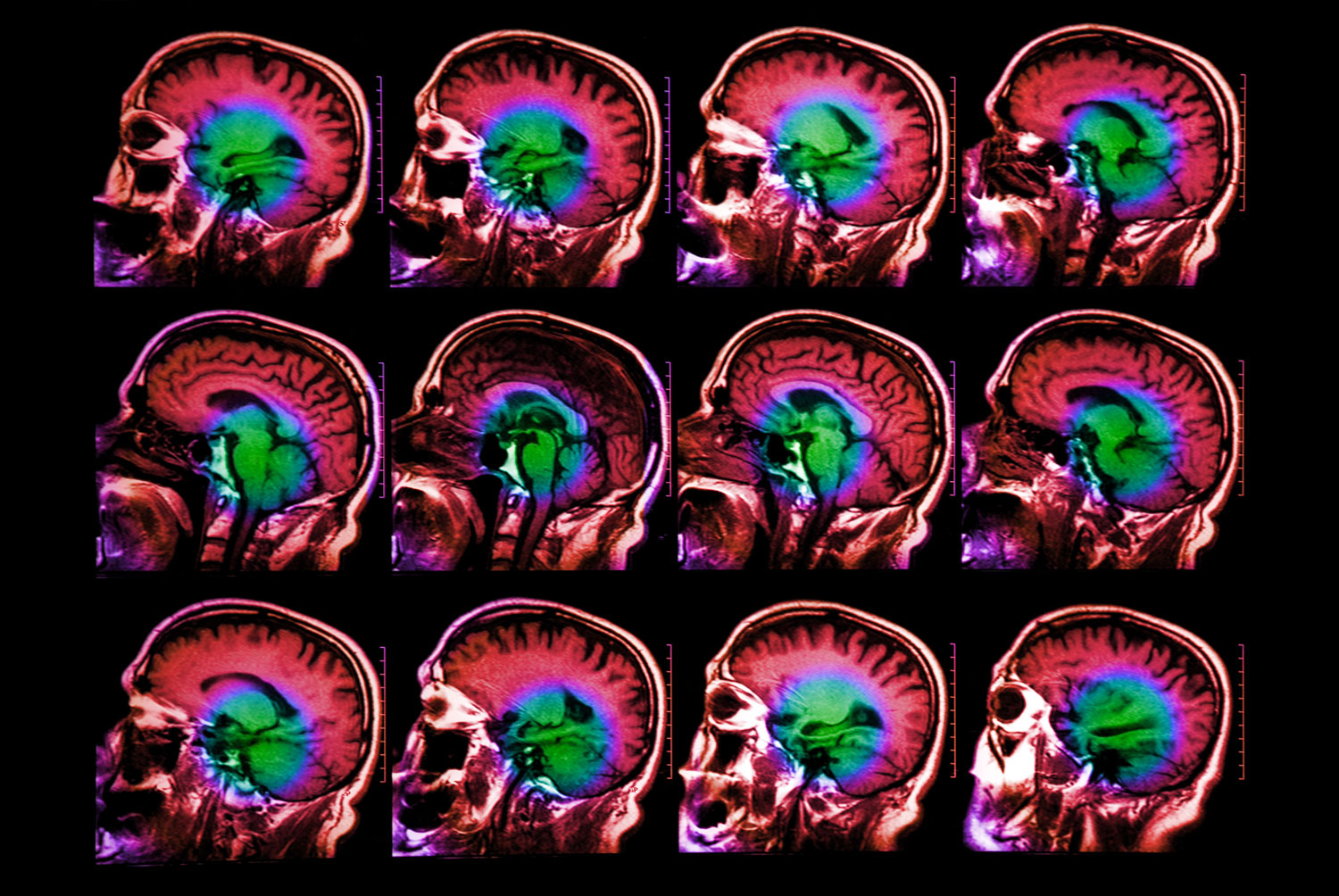

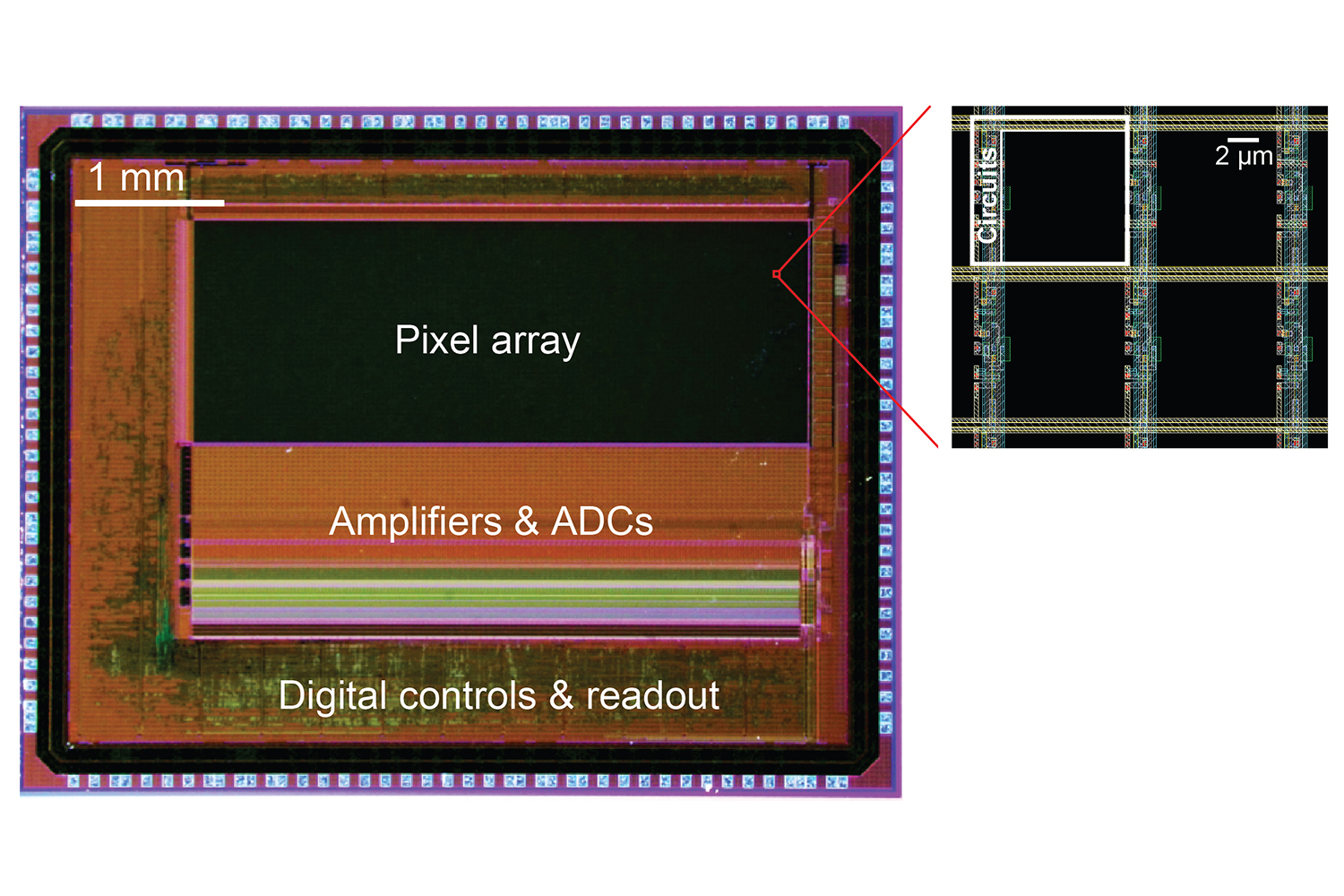

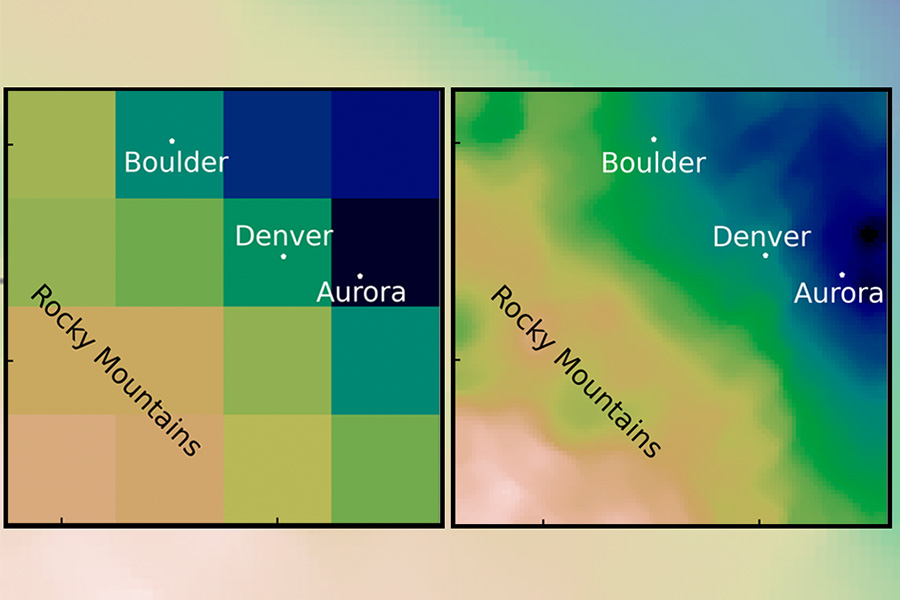

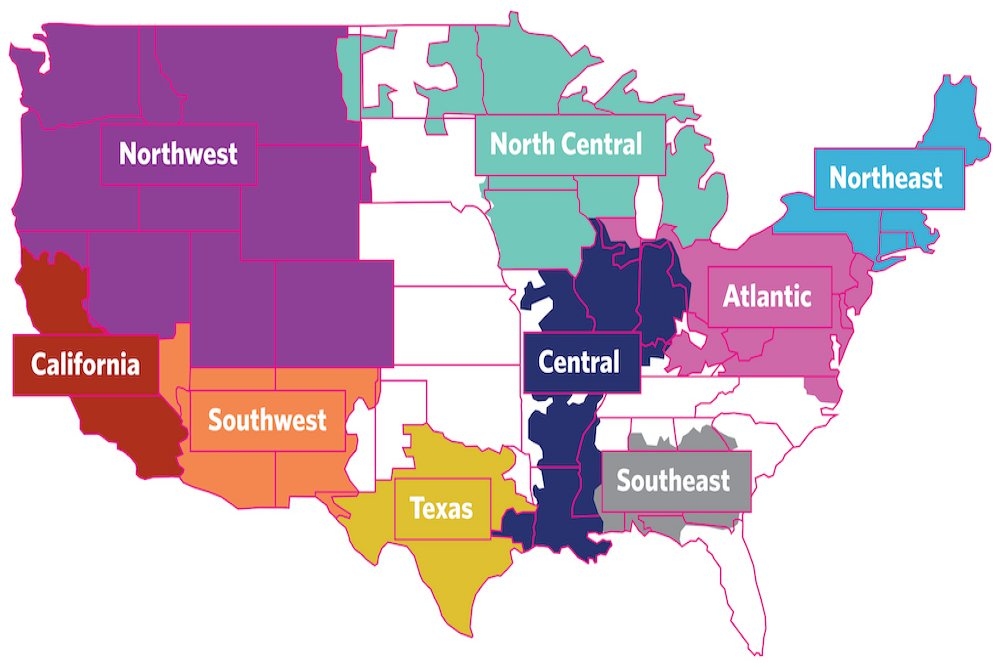

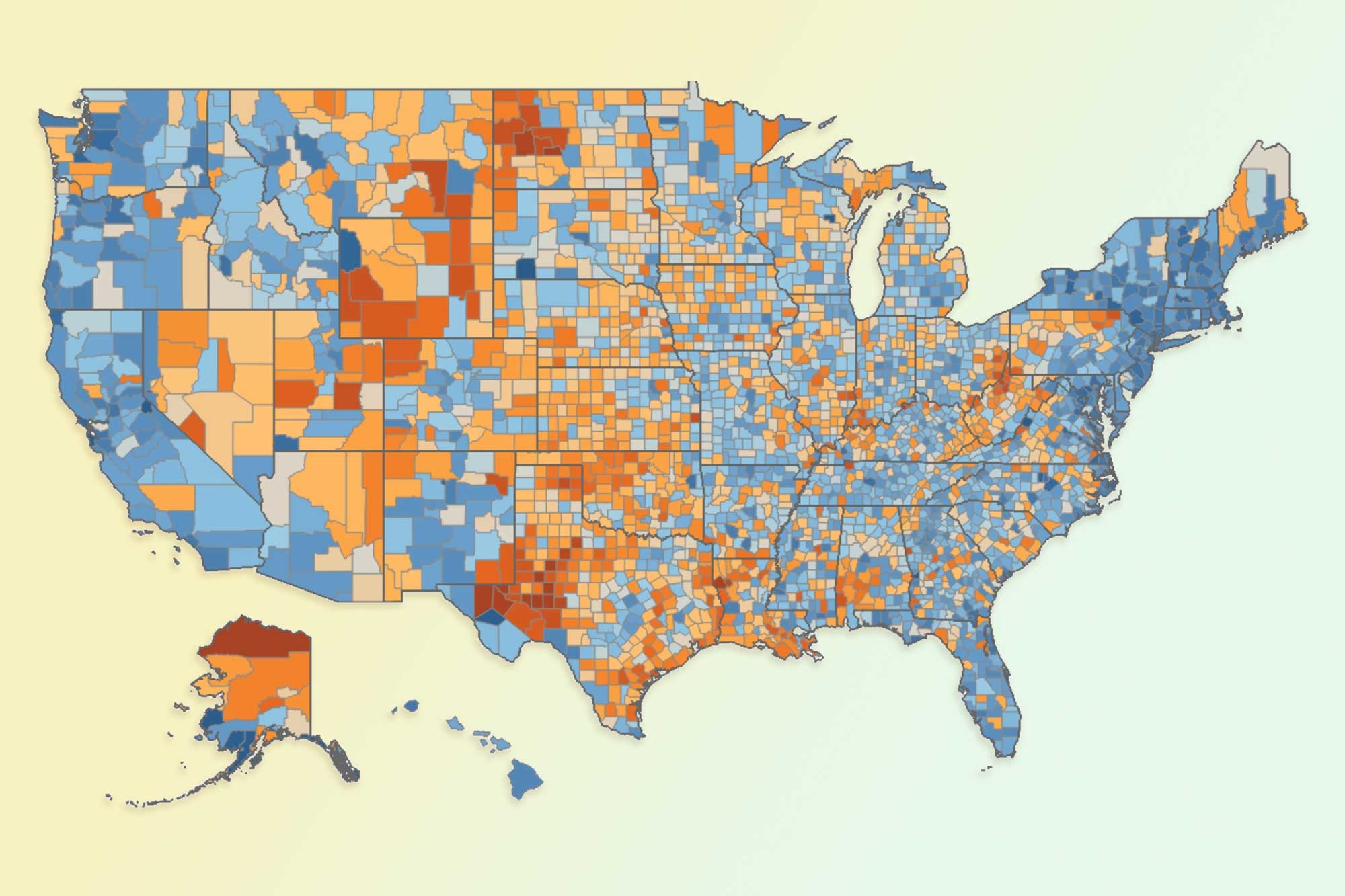

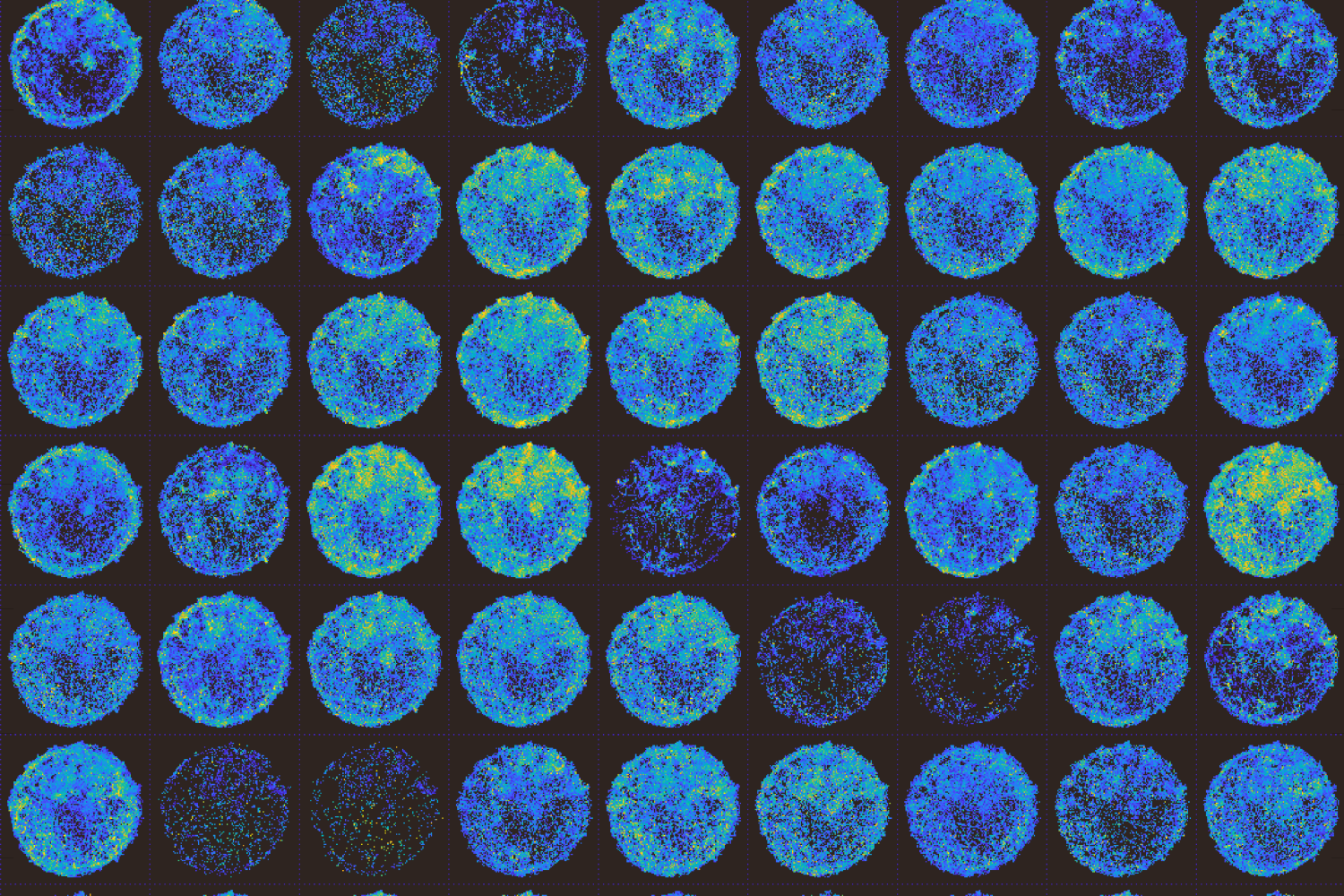

It turns out the local environment plays a big role. Braakman and his collaborators performed a metagenome analysis in which they compared the collectively sequenced genomes of all microbes in over 600 seawater samples from around the world, focusing on SAR11 bacteria. Metagenome sequences were collected alongside measurements of various environmental conditions and geographic locations in which they are found. This analysis showed that the bacteria gobble up purine for its nitrogen when the nitrogen in seawater is low, and for its carbon or energy when nitrogen is in surplus — revealing the selective pressures shaping these communities in different ocean regimes.

“The work here suggests that microbes in the ocean have developed relationships that advance their growth potential in ways we don’t expect,” says co-author Kujawinski.

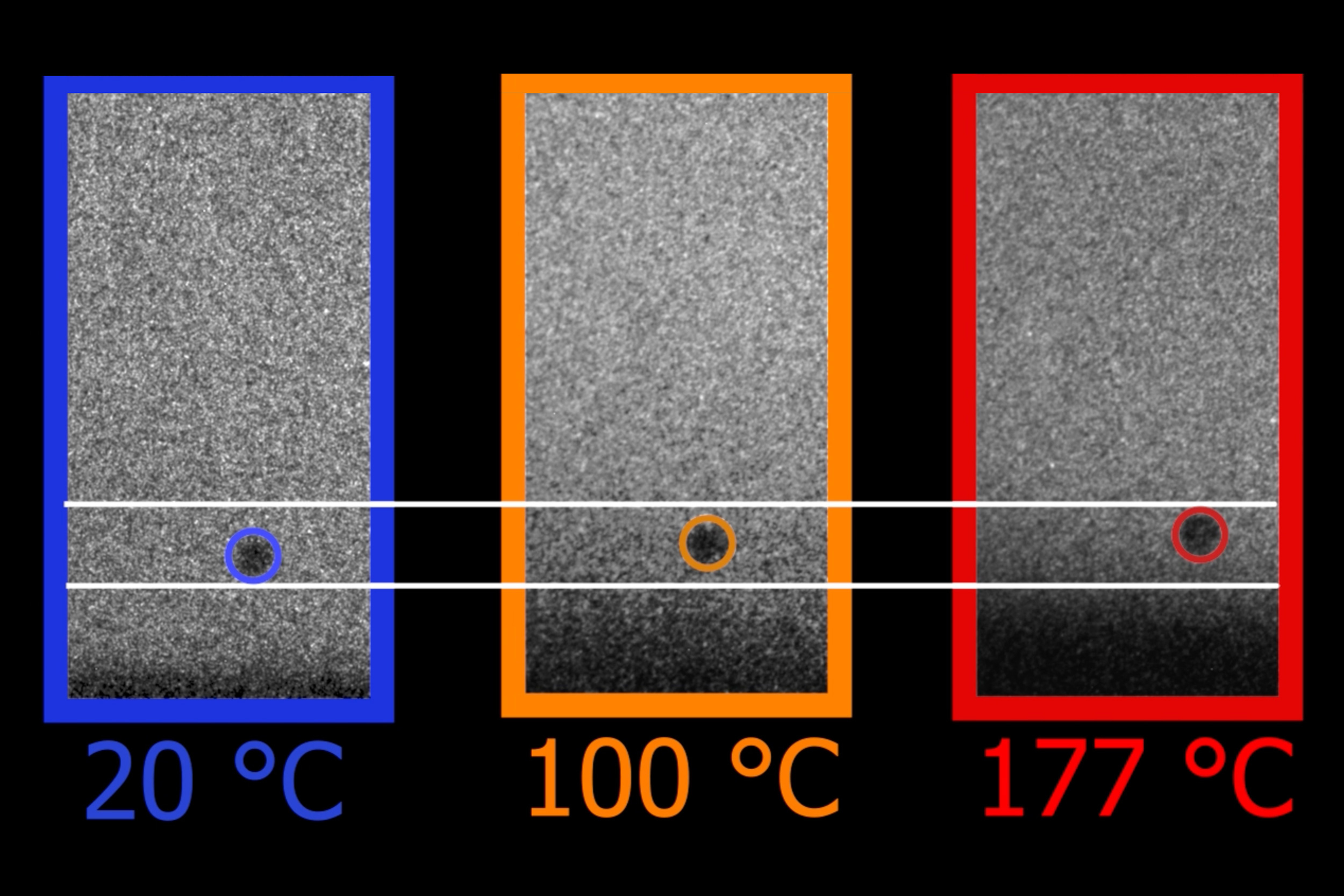

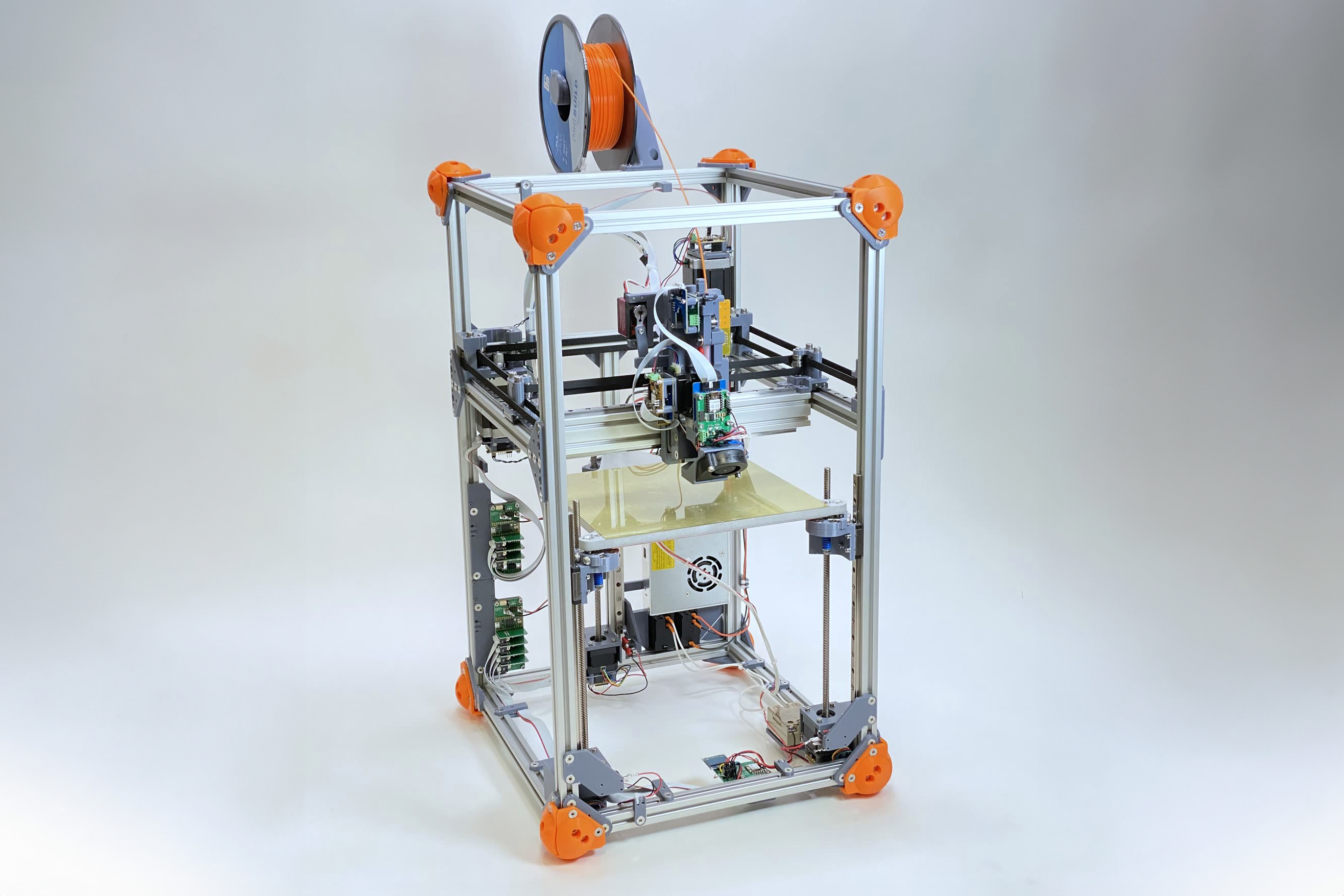

Finally, the team carried out a simple experiment in the lab, to see if they could directly observe a mechanism by which purine acts on SAR11. They grew the bacteria in cultures, exposed them to various concentrations of purine, and unexpectedly found it causes them to slow down their normal metabolic activities and even growth. However, when the researchers put these same cells under environmentally stressful conditions, they continued growing strong and healthy cells, as if the metabolic pausing by purines helped prime them for growth, thereby avoiding the effects of the stress.

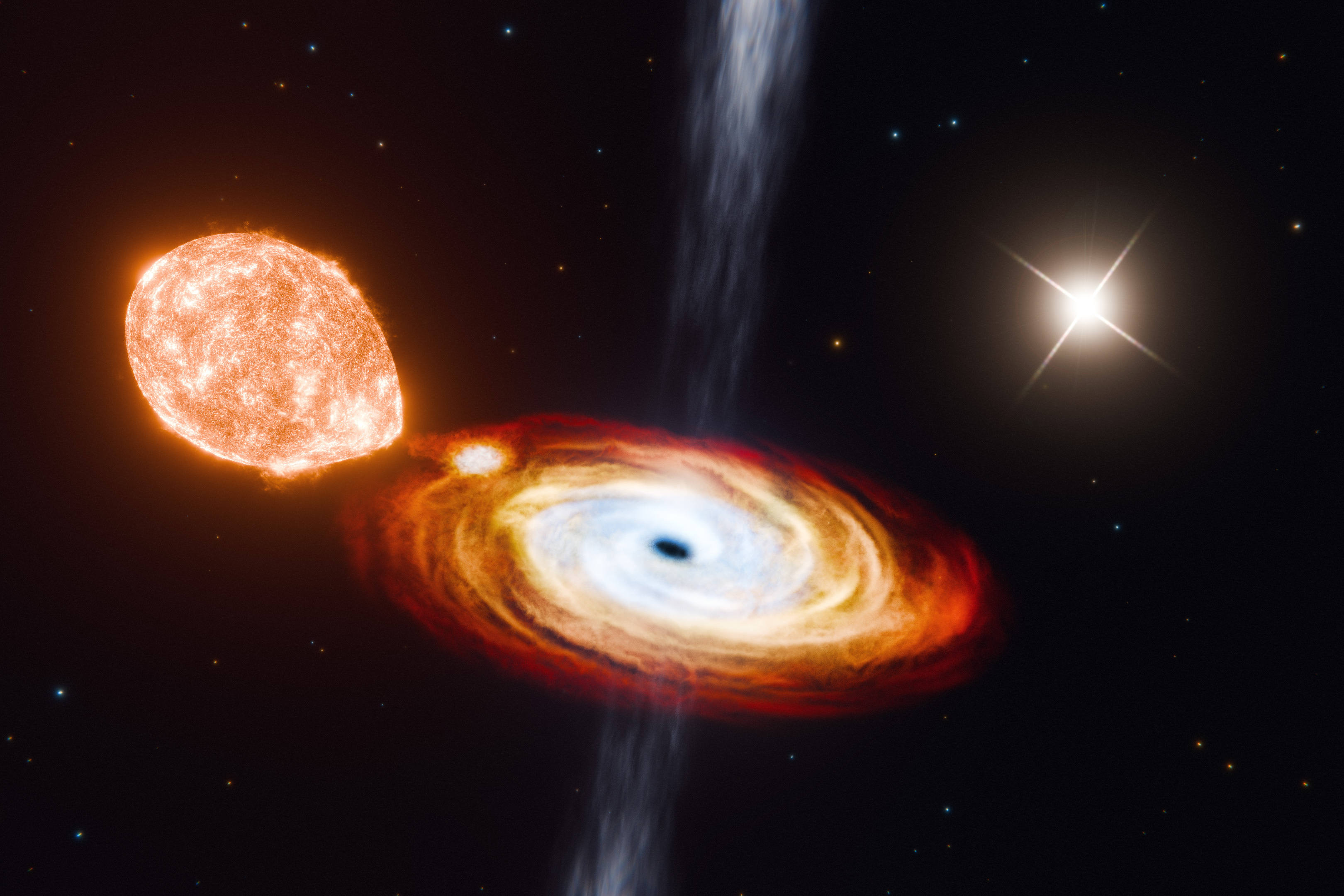

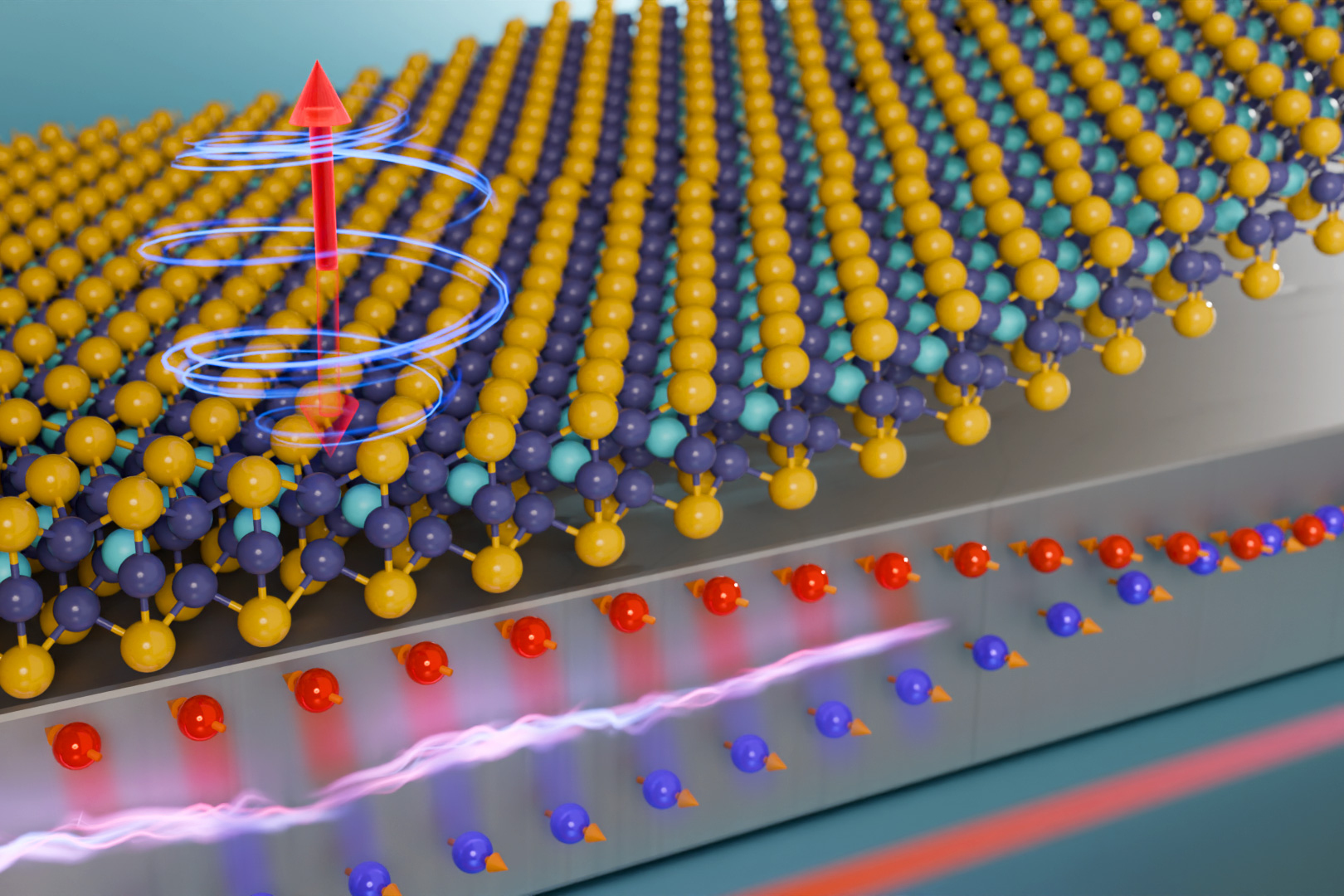

“When you think about the ocean, where you see this daily pulse of purines being released by Prochlorococcus, this provides a daily inhibition signal that could be causing a pause in SAR11 metabolism, so that the next day when the sun comes out, they are primed and ready,” Braakman says. “So we think Prochlorococcus is acting as a conductor in the daily symphony of ocean metabolism, and cross-feeding is creating a global synchronization among all these microbial cells.”

This work was supported, in part, by the Simons Foundation and the National Science Foundation.

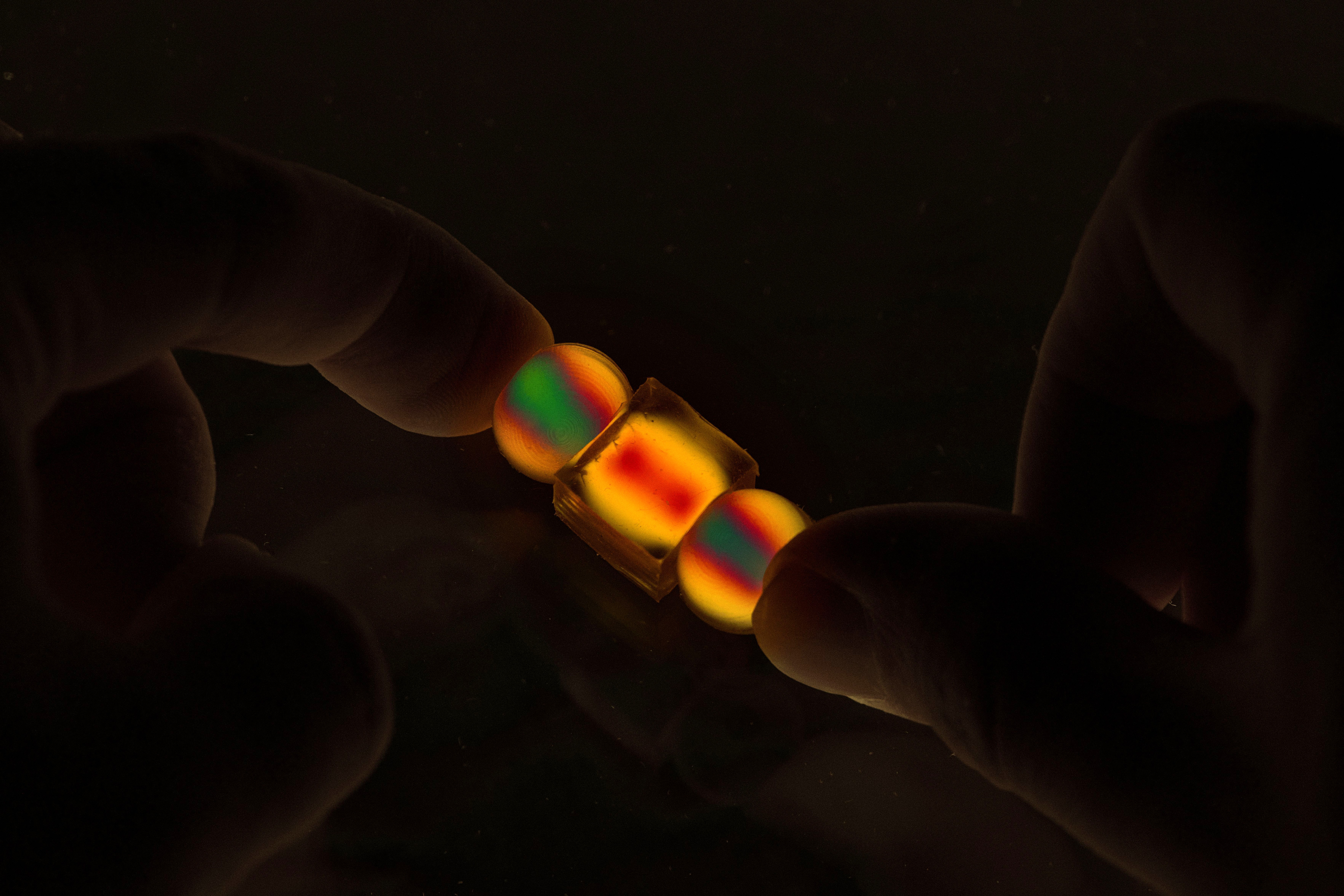

© Image: Jose-Luis Olivares, MIT