Reading view

Vulcan Centaur rocket is 'go' for historic Jan. 8 launch of private Peregrine moon lander

© ULA

Grant to validate blood test for early breast cancer detection

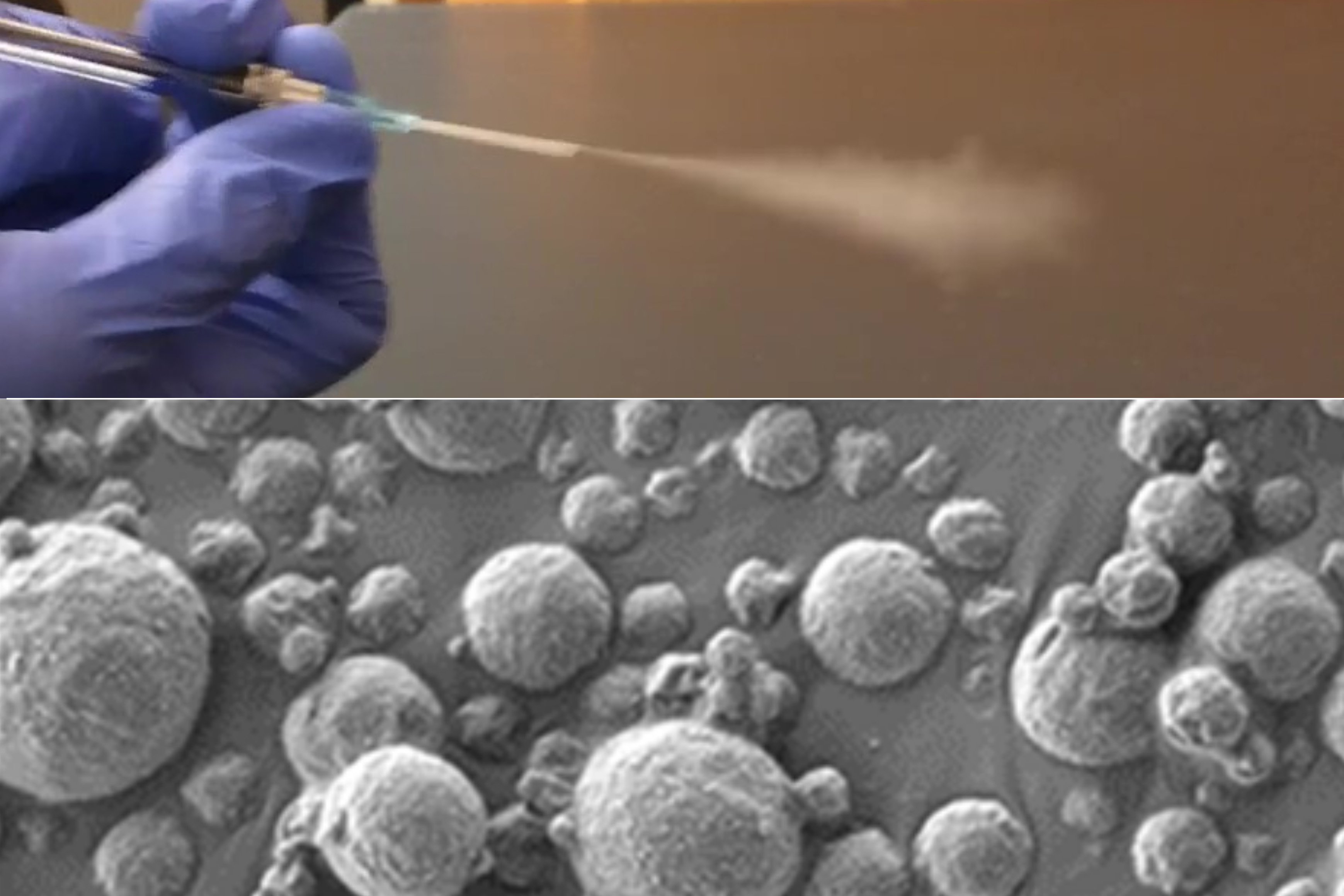

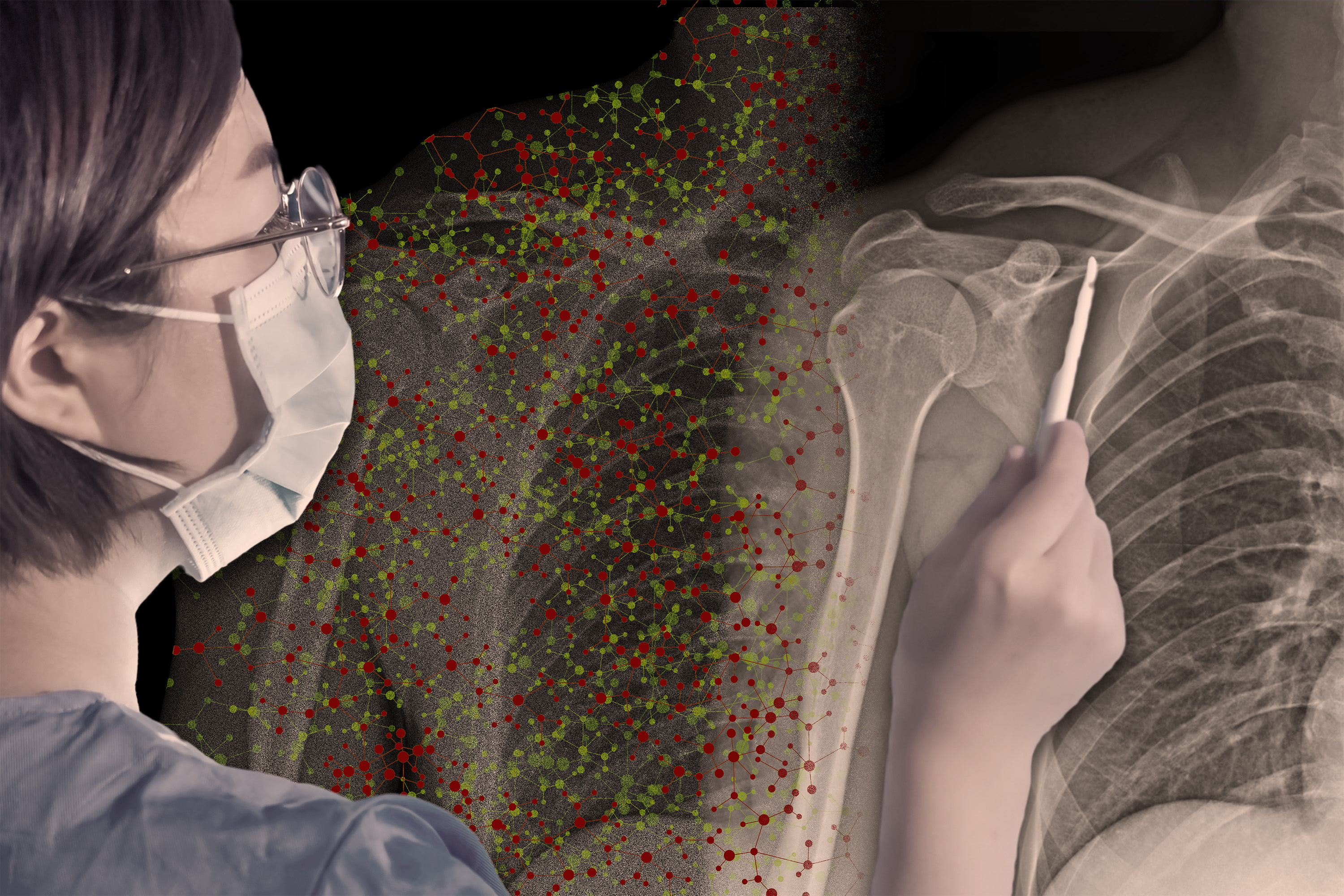

Inhalable sensors could enable early lung cancer detection

Using a new technology developed at MIT, diagnosing lung cancer could become as easy as inhaling nanoparticle sensors and then taking a urine test that reveals whether a tumor is present.

The new diagnostic is based on nanosensors that can be delivered by an inhaler or a nebulizer. If the sensors encounter cancer-linked proteins in the lungs, they produce a signal that accumulates in the urine, where it can be detected with a simple paper test strip.

This approach could potentially replace or supplement the current gold standard for diagnosing lung cancer, low-dose computed tomography (CT). It could have an especially significant impact in low- and middle-income countries that don’t have widespread availability of CT scanners, the researchers say.

“Around the world, cancer is going to become more and more prevalent in low- and middle-income countries. The epidemiology of lung cancer globally is that it’s driven by pollution and smoking, so we know that those are settings where accessibility to this kind of technology could have a big impact,” says Sangeeta Bhatia, the John and Dorothy Wilson Professor of Health Sciences and Technology and of Electrical Engineering and Computer Science at MIT, and a member of MIT’s Koch Institute for Integrative Cancer Research and the Institute for Medical Engineering and Science.

Bhatia is the senior author of the paper, which appears today in Science Advances. Qian Zhong, an MIT research scientist, and Edward Tan, a former MIT postdoc, are the lead authors of the study.

Inhalable particles

To help diagnose lung cancer as early as possible, the U.S. Preventive Services Task Force recommends that heavy smokers over the age of 50 undergo annual CT scans. However, not everyone in this target group receives these scans, and the high false-positive rate of the scans can lead to unnecessary, invasive tests.

Bhatia has spent the last decade developing nanosensors for use in diagnosing cancer and other diseases, and in this study, she and her colleagues explored the possibility of using them as a more accessible alternative to CT screening for lung cancer.

These sensors consist of polymer nanoparticles coated with a reporter, such as a DNA barcode, that is cleaved from the particle when the sensor encounters enzymes called proteases, which are often overactive in tumors. Those reporters eventually accumulate in the urine and are excreted from the body.

Previous versions of the sensors, which targeted other cancer sites such as the liver and ovaries, were designed to be given intravenously. For lung cancer diagnosis, the researchers wanted to create a version that could be inhaled, which could make it easier to deploy in lower resource settings.

“When we developed this technology, our goal was to provide a method that can detect cancer with high specificity and sensitivity, and also lower the threshold for accessibility, so that hopefully we can improve the resource disparity and inequity in early detection of lung cancer,” Zhong says.

To achieve that, the researchers created two formulations of their particles: a solution that can be aerosolized and delivered with a nebulizer, and a dry powder that can be delivered using an inhaler.

Once the particles reach the lungs, they are absorbed into the tissue, where they encounter any proteases that may be present. Human cells can express hundreds of different proteases, and some of them are overactive in tumors, where they help cancer cells to escape their original locations by cutting through proteins of the extracellular matrix. These cancerous proteases cleave DNA barcodes from the sensors, allowing the barcodes to circulate in the bloodstream until they are excreted in the urine.

In the earlier versions of this technology, the researchers used mass spectrometry to analyze the urine sample and detect DNA barcodes. However, mass spectrometry requires equipment that might not be available in low-resource areas, so for this version, the researchers created a lateral flow assay, which allows the barcodes to be detected using a paper test strip.

The researchers designed the strip to detect up to four different DNA barcodes, each of which indicates the presence of a different protease. No pre-treatment or processing of the urine sample is required, and the results can be read about 20 minutes after the sample is obtained.

“We were really pushing this assay to be point-of-care available in a low-resource setting, so the idea was to not do any sample processing, not do any amplification, just to be able to put the sample right on the paper and read it out in 20 minutes,” Bhatia says.

Accurate diagnosis

The researchers tested their diagnostic system in mice that are genetically engineered to develop lung tumors similar to those seen in humans. The sensors were administered 7.5 weeks after the tumors started to form, a time point that would likely correlate with stage 1 or 2 cancer in humans.

In their first set of experiments in the mice, the researchers measured the levels of 20 different sensors designed to detect different proteases. Using a machine learning algorithm to analyze those results, the researchers identified a combination of just four sensors that was predicted to give accurate diagnostic results. They then tested that combination in the mouse model and found that it could accurately detect early-stage lung tumors.

For use in humans, it’s possible that more sensors might be needed to make an accurate diagnosis, but that could be achieved by using multiple paper strips, each of which detects four different DNA barcodes, the researchers say.

The researchers now plan to analyze human biopsy samples to see if the sensor panels they are using would also work to detect human cancers. In the longer term, they hope to perform clinical trials in human patients. A company called Sunbird Bio has already run phase 1 trials on a similar sensor developed by Bhatia’s lab, for use in diagnosing liver cancer and a form of hepatitis known as nonalcoholic steatohepatitis (NASH).

In parts of the world where there is limited access to CT scanning, this technology could offer a dramatic improvement in lung cancer screening, especially since the results can be obtained during a single visit.

“The idea would be you come in and then you get an answer about whether you need a follow-up test or not, and we could get patients who have early lesions into the system so that they could get curative surgery or lifesaving medicines,” Bhatia says.

The research was funded by the Johnson & Johnson Lung Cancer Initiative, the Howard Hughes Medical Institute, the Koch Institute Support (core) Grant from the National Cancer Institute, and the National Institute of Environmental Health Sciences.

Additional related work was supported by the Marble Center for Cancer Nanomedicine and the Koch Institute Frontier Research Program via Upstage Lung Cancer.

© Credit: Courtesy of the researchers

A Soap Bubble Becomes a Laser

Author(s): Katherine Wright

Using a soap bubble, researchers have created a laser that could act as a sensitive sensor for environmental parameters including atmospheric pressure.

[Physics 17, 3] Published Fri Jan 05, 2024

20 of the best places to view the 2024 total solar eclipse

The total eclipse set to take place April 8, 2024, will dazzle everyone who views it. Here are some of the best places to see to see the 2024 eclipse.

The post 20 of the best places to view the 2024 total solar eclipse appeared first on Astronomy Magazine.

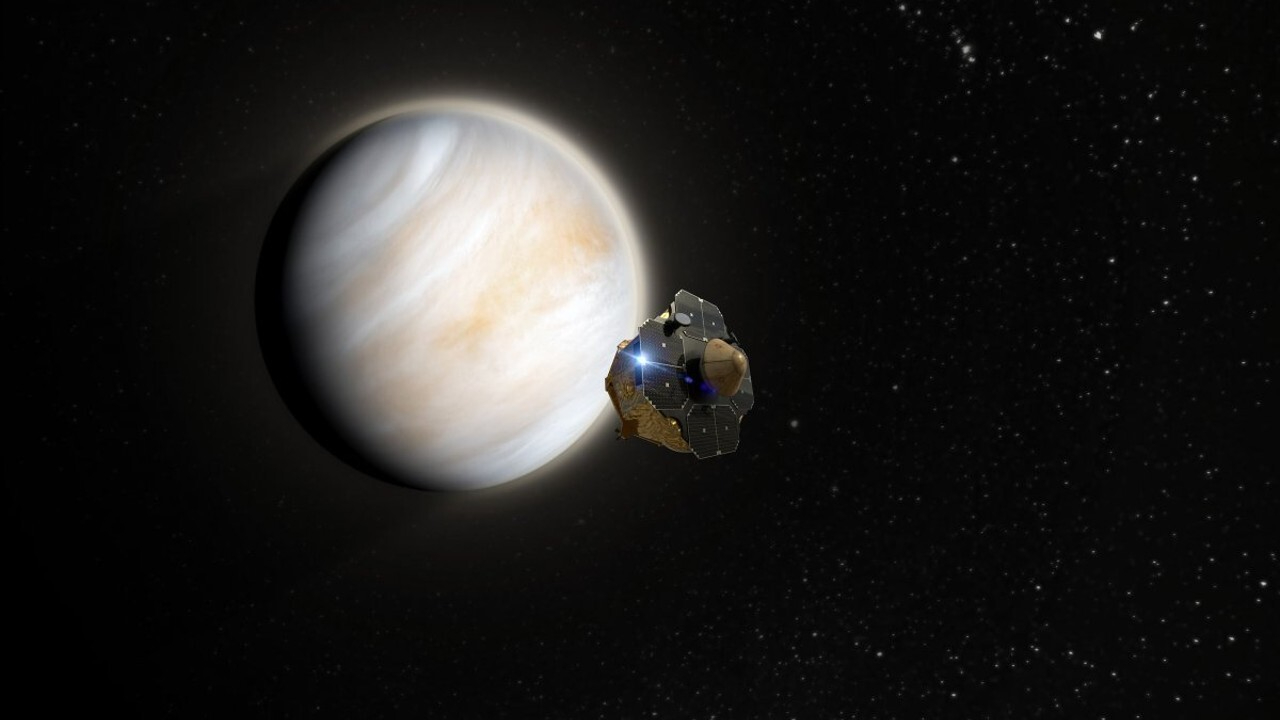

Alien life could thrive in Venus' acidic clouds, new study hints

© Rocket Lab

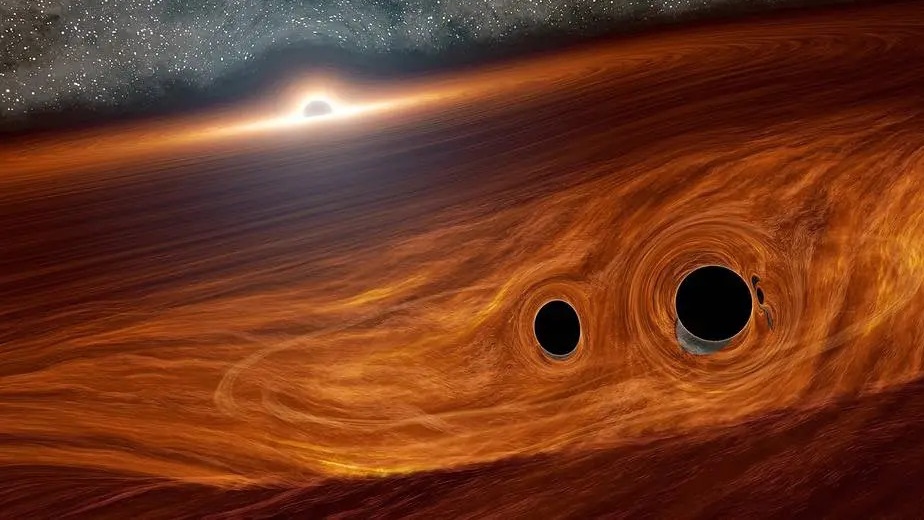

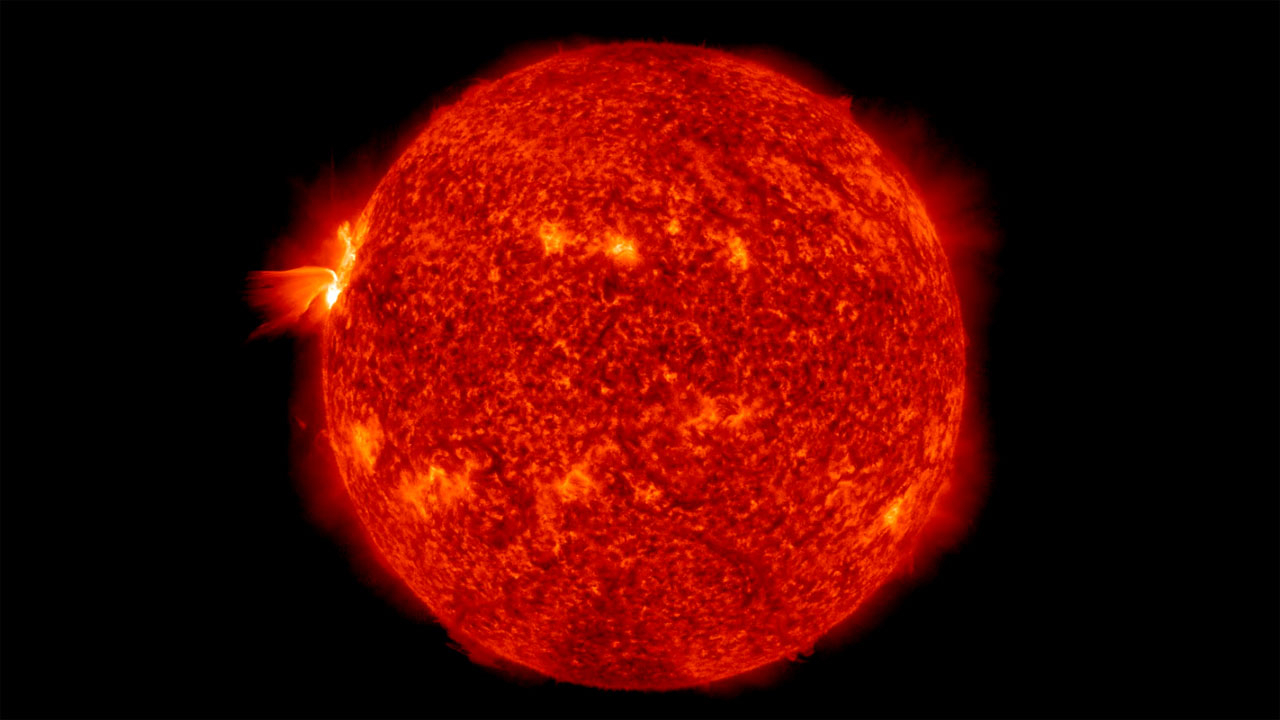

Is a black hole stuck inside the sun? No, but here's why scientists are asking

© NASA/Caltech/R. Hurt (IPAC)

NASA to unveil new X-59 'quiet' supersonic jet on Jan. 12

© Lockheed Martin

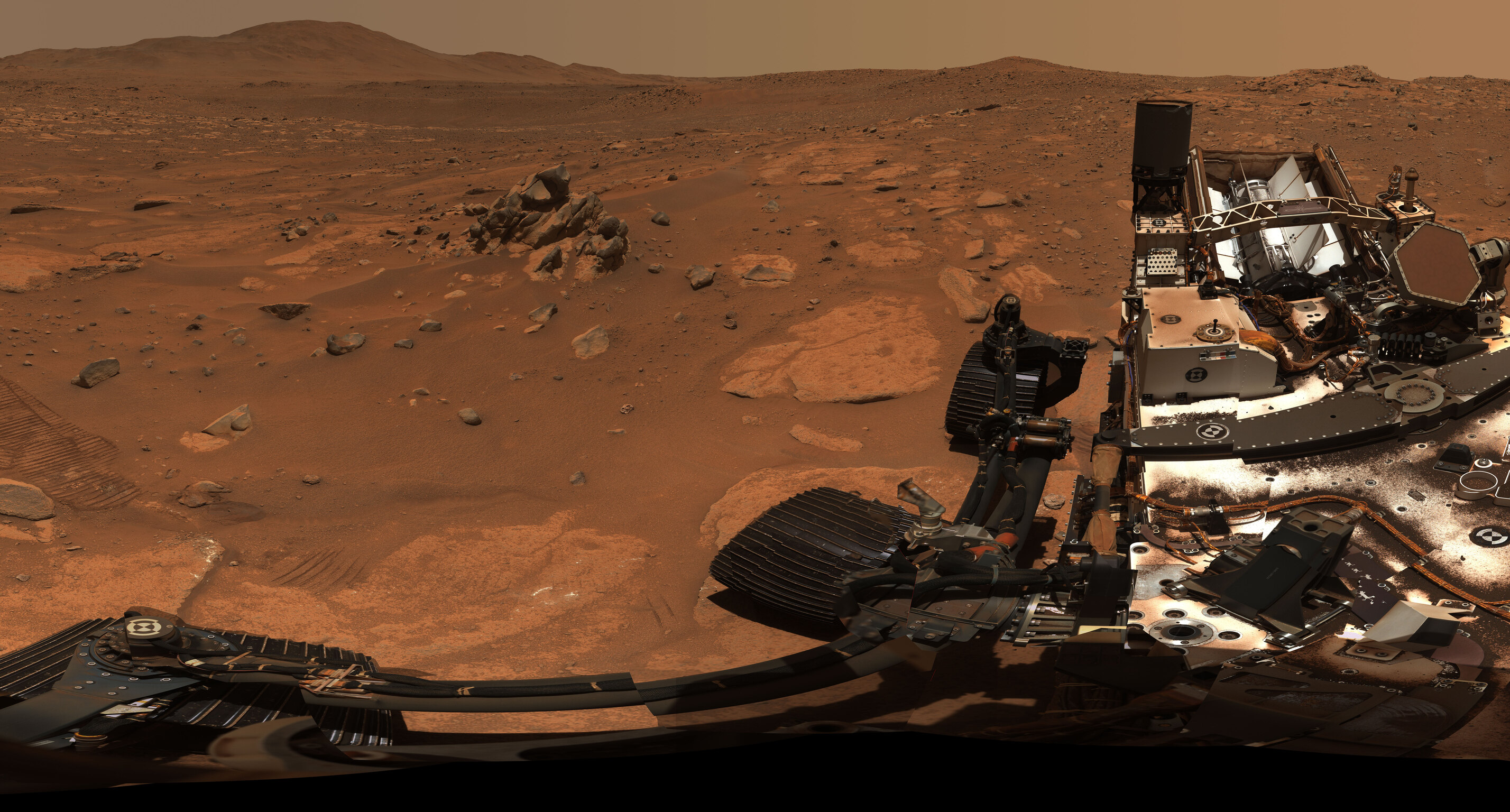

NASA's Perseverance rover captures 360-degree view of Mars' Jezero Crater (video)

© NASA/JPL-Caltech/ASU/MSSS

Improving patient safety using principles of aerospace engineering

Approximately 13 billion laboratory tests are administered every year in the United States, but not every result is timely or accurate. Laboratory missteps prevent patients from receiving appropriate, necessary, and sometimes lifesaving care. These medical errors are the third-leading cause of death in the nation.

To help reverse this trend, a research team from the MIT Department of Aeronautics and Astronautics (AeroAstro) Engineering Systems Lab and Synensys, a safety management contractor, examined the ecosystem of diagnostic laboratory data. Their findings, including six systemic factors contributing to patient hazards in laboratory diagnostics tests, offer a rare holistic view of this complex network — not just doctors and lab technicians, but also device manufacturers, health information technology (HIT) providers, and even government entities such as the White House. By viewing the diagnostic laboratory data ecosystem as an integrated system, an approach based on systems theory, the MIT researchers have identified specific changes that can lead to safer behaviors for health care workers and healthier outcomes for patients.

A report of the study, which was conducted by AeroAstro Professor Nancy Leveson, who serves as head of the System Safety and Cybersecurity group, along with Research Engineer John Thomas and graduate students Polly Harrington and Rodrigo Rose, was submitted to the U.S. Food and Drug Administration this past fall. Improving the infrastructure of laboratory data has been a priority for the FDA, who contracted the study through Synensys.

Hundreds of hazards, six causes

In a yearlong study that included more than 50 interviews, the Leveson team found the diagnostic laboratory data ecosystem to be vast yet fractured. No one understood how the whole system functioned or the totality of substandard treatment patients received. Well-intentioned workers were being influenced by the system to carry out unsafe actions, MIT engineers wrote.

Test results sent to the wrong patients, incompatible technologies that strain information sharing between the doctor and lab technician, and specimens transported to the lab without guarantees of temperature control were just some of the hundreds of hazards the MIT engineers identified. The sheer volume of potential risks, known as unsafe control actions (UCAs), should not dissuade health care stakeholders from seeking change, Harrington says.

“While there are hundreds of UCAs, there are only six systemic factors that are causing these hazards,” she adds. “Using a system-based methodology, the medical community can address many of these issues with one swoop.”

Four of the systemic factors — decentralization, flawed communication and coordination, insufficient focus on safety-related regulations, and ambiguous or outdated standards — reflect the need for greater oversight and accountability. The two remaining systemic factors — misperceived notions of risk and lack of systems theory integration — call for a fundamental shift in perspective and operations. For instance, the medical community, including doctors themselves, tends to blame physicians when errors occur. Understanding the real risk levels associated with laboratory data and HIT might prompt more action for change, the report’s authors wrote.

“There’s this expectation that doctors will catch every error,” Harrington says. “It’s unreasonable and unfair to expect that, especially when they have no reason to assume the data they're getting is flawed.”

Think like an engineer

Systems theory may be a new concept to the medical community, but the aviation industry has used it for decades.

“After World War II, there were so many commercial aviation crashes that the public was scared to fly,” says Leveson, a leading expert in system and software safety. In the early 2000s, she developed the System-Theoretic Process Analysis (STPA), a technique based on systems theory that offers insights into how complex systems can become safer. Researchers used STPA in its report to the FDA. “Industry and government worked together to put controls and error reporting in place. Today, there are nearly zero crashes in the U.S. What’s happening in health care right now is like having a Boeing 787 crash every day.”

Other engineering principles that work well in aviation, such as control systems, could be applied to health care as well, Thomas says. For instance, closed-loop controls solicit feedback so a system can change and adapt. Having laboratories confirm that physicians received their patients’ test results or investigating all reports of diagnostic errors are examples of closed-loop controls that are not mandated in the current ecosystem, Thomas says.

“Operating without controls is like asking a robot to navigate a city street blindfolded,” Thomas says. “There’s no opportunity for course correction. Closed-loop controls help inform future decision-making, and, at this point in time, it’s missing in the U.S. health-care system.”

The Leveson team will continue working with Synensys on behalf of the FDA. Their next study will investigate diagnostic screenings outside the laboratory, such as at a physician’s office (point of care) or at home (over the counter). Since the start of the Covid-19 pandemic, nonclinical lab testing has surged in the country. About 600 million Covid-19 tests were sent to U.S. households between January and September 2022, according to Synensys. Yet, few systems are in place to aggregate these data or report findings to public health agencies.

“There’s a lot of well-meaning people trying to solve this and other lab data challenges,” Rose says. “If we can convince people to think of health care as an engineered system, we can go a long way in solving some of these entrenched problems.”

The Synensys research contract is part of the Systemic Harmonization and Interoperability Enhancement for Laboratory Data (SHIELD) campaign, an agency initiative that seeks assistance and input in using systems theory to address this challenge.

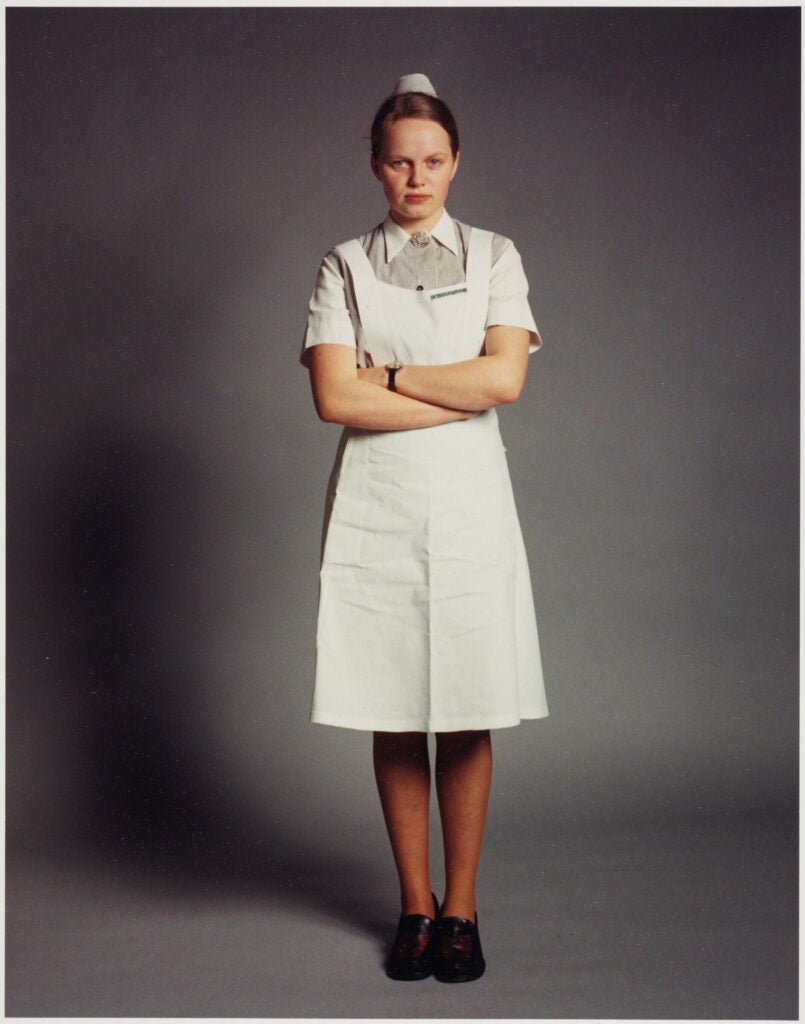

© Photo courtesy of the National Cancer Institute.

'Cooling glass' could fight climate change by reflecting solar radiation back into space

© NASA

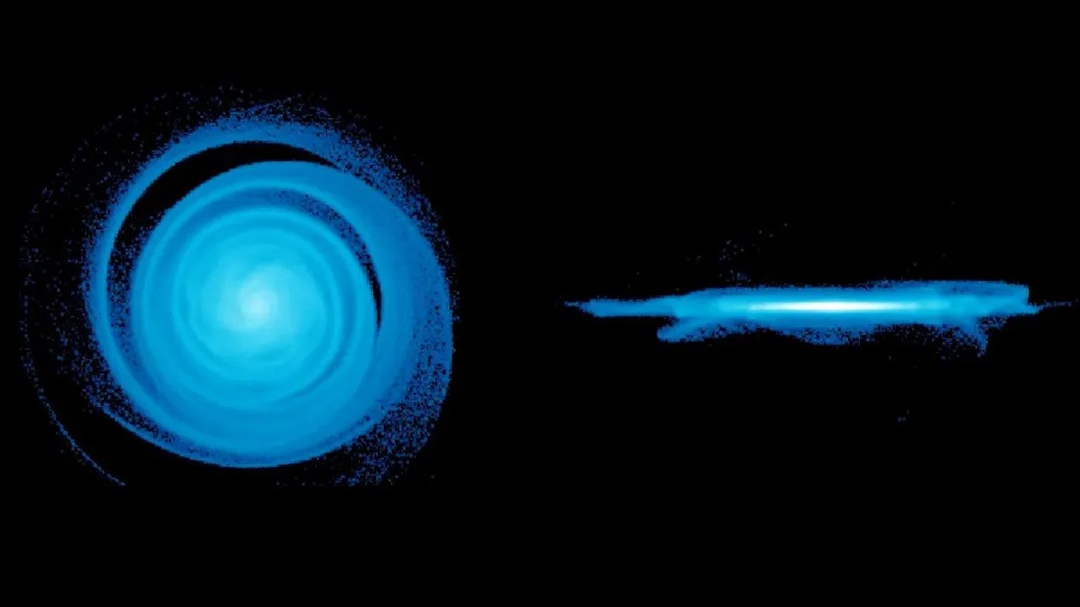

Ripples in the oldest known spiral galaxy may shed light on the origins of our Milky Way

© Illustration: Jonathan Bland-Hawthorn and Thorsten Tepper-Garcia/University of Sydney

James Webb Space Telescope could look for 'carbon-lite' exoplanet atmospheres in search for alien life

© Christine Daniloff, MIT; iStock

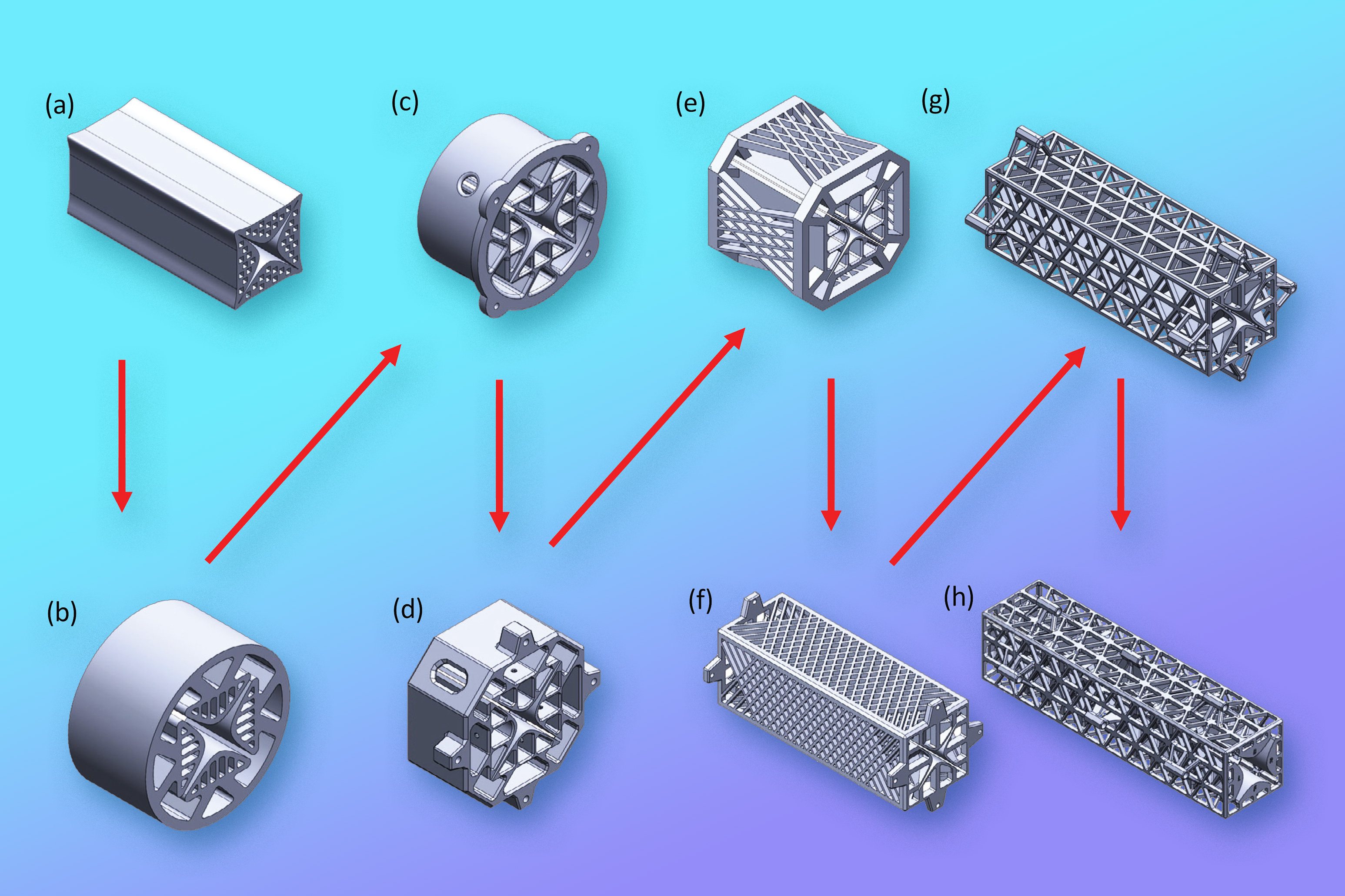

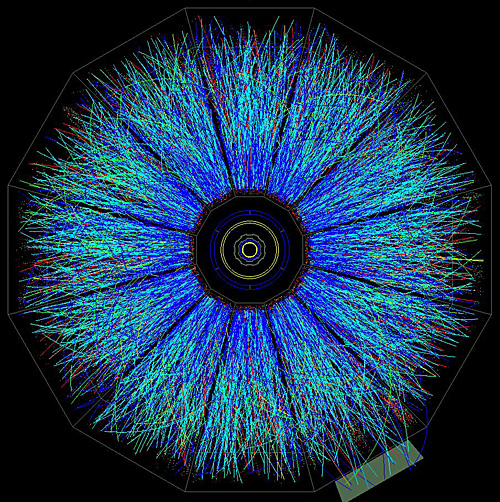

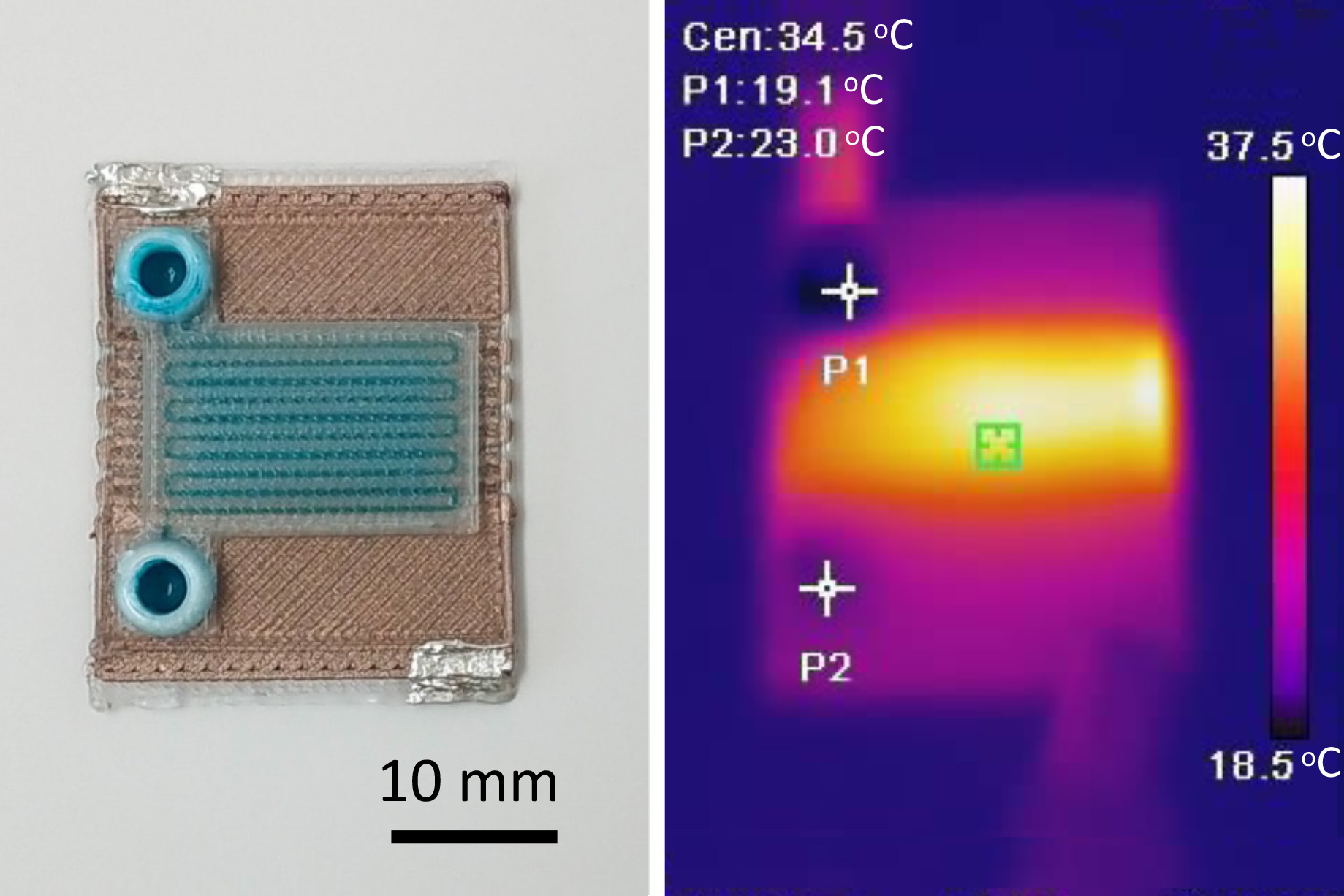

Researchers 3D print components for a portable mass spectrometer

Mass spectrometers, devices that identify chemical substances, are widely used in applications like crime scene analysis, toxicology testing, and geological surveying. But these machines are bulky, expensive, and easy to damage, which limits where they can be effectively deployed.

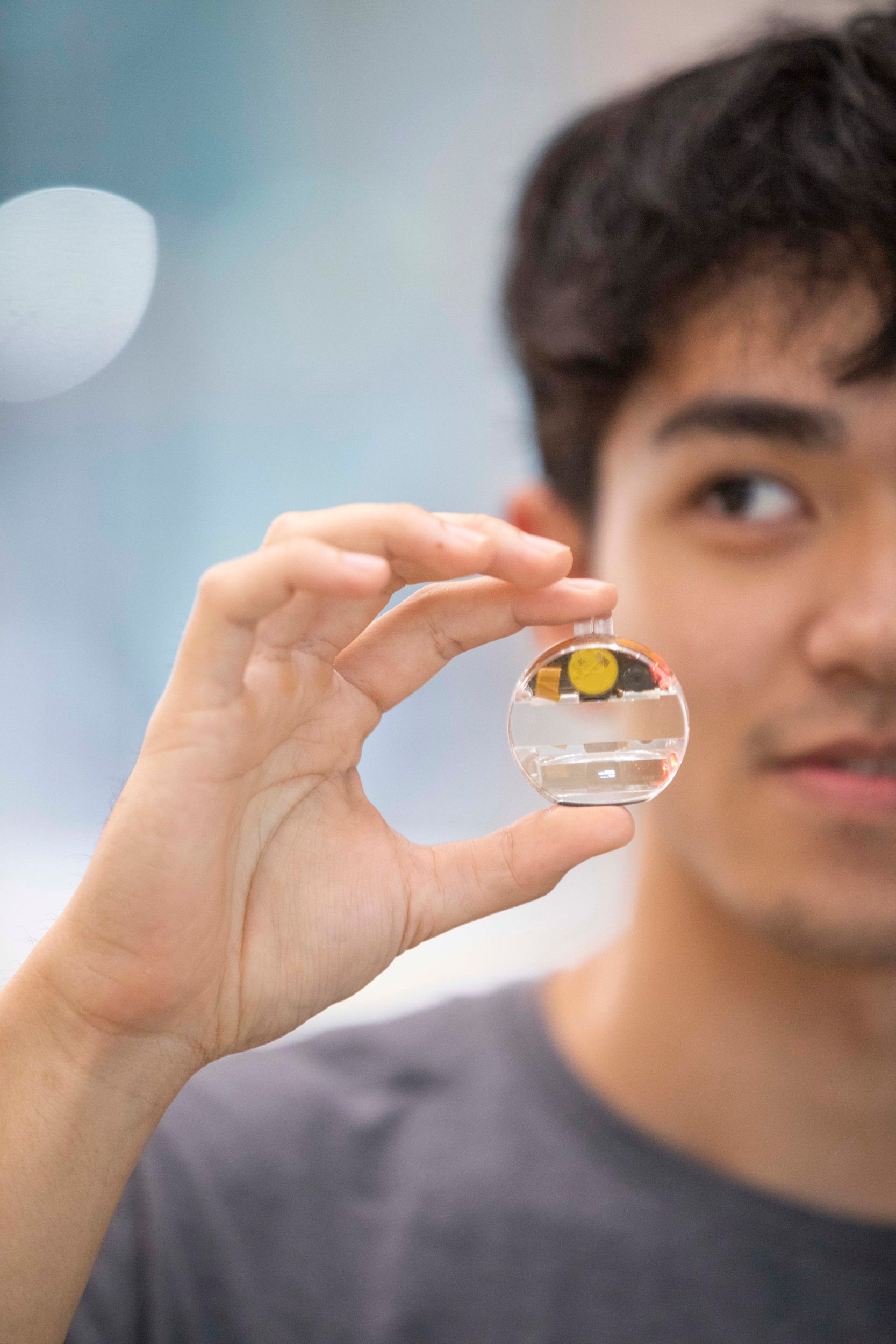

Using additive manufacturing, MIT researchers produced a mass filter, which is the core component of a mass spectrometer, that is far lighter and cheaper than the same type of filter made with traditional techniques and materials.

Their miniaturized filter, known as a quadrupole, can be completely fabricated in a matter of hours for a few dollars. The 3D-printed device is as precise as some commercial-grade mass filters that can cost more than $100,000 and take weeks to manufacture.

Built from durable and heat-resistant glass-ceramic resin, the filter is 3D printed in one step, so no assembly is required. Assembly often introduces defects that can hamper the performance of quadrupoles.

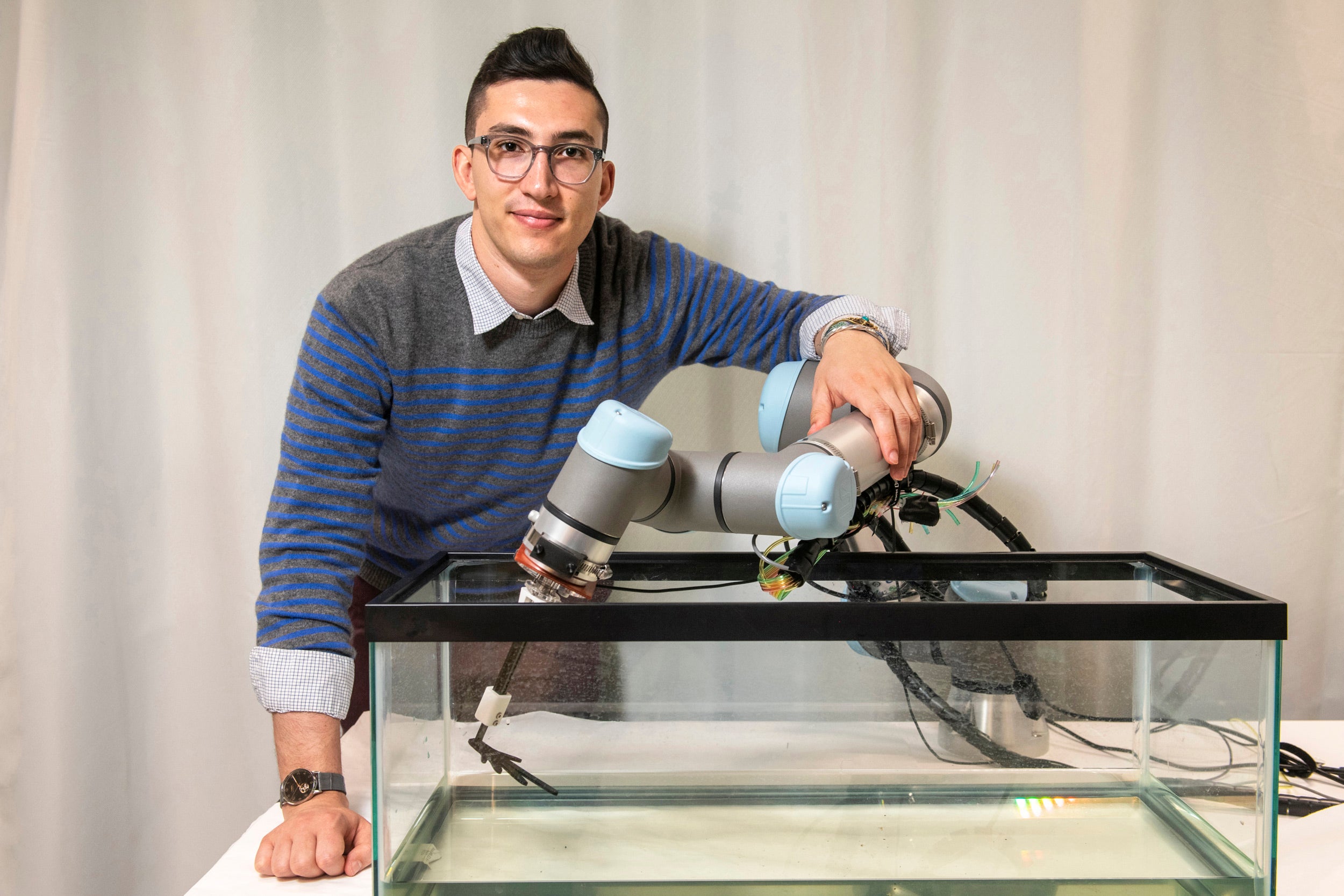

This lightweight, cheap, yet precise quadrupole is one important step in Luis Fernando Velásquez-García’s 20-year quest to produce a 3D-printed, portable mass spectrometer.

“We are not the first ones to try to do this. But we are the first ones who succeeded at doing this. There are other miniaturized quadrupole filters, but they are not comparable with professional-grade mass filters. There are a lot of possibilities for this hardware if the size and cost could be smaller without adversely affecting the performance,” says Velásquez-García, a principal research scientist in MIT’s Microsystems Technology Laboratories (MTL) and senior author of a paper detailing the miniaturized quadrupole.

For instance, a scientist could bring a portable mass spectrometer to remote areas of the rainforest, using it to rapidly analyze potential pollutants without shipping samples back to a lab. And a lightweight device would be cheaper and easier to send into space, where it could monitor chemicals in Earth’s atmosphere or on those of distant planets.

Velásquez-García is joined on the paper by lead author Colin Eckhoff, an MIT graduate student in electrical engineering and computer science (EECS); Nicholas Lubinsky, a former MIT postdoc; and Luke Metzler and Randall Pedder of Ardara Technologies. The research is published in Advanced Science.

Size matters

At the heart of a mass spectrometer is the mass filter. This component uses electric or magnetic fields to sort charged particles based on their mass-to-charge ratio. In this way, the device can measure the chemical components in a sample to identify an unknown substance.

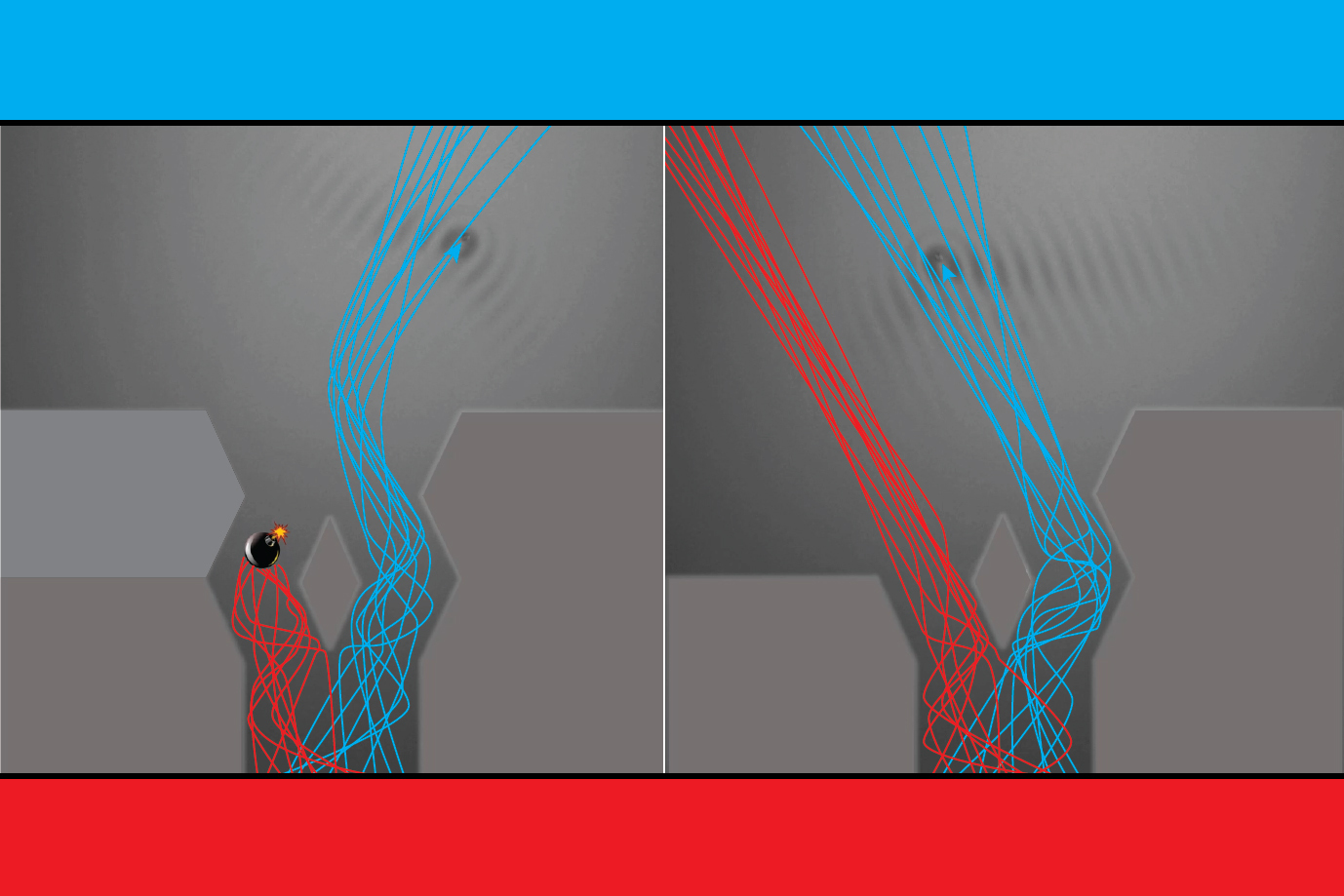

A quadrupole, a common type of mass filter, is composed of four metallic rods surrounding an axis. Voltages are applied to the rods, which produce an electromagnetic field. Depending on the properties of the electromagnetic field, ions with a specific mass-to-charge ratio will swirl around through the middle of the filter, while other particles escape out the sides. By varying the mix of voltages, one can target ions with different mass-to-charge ratios.

While fairly simple in design, a typical stainless-steel quadrupole might weigh several kilograms. But miniaturizing a quadrupole is no easy task. Making the filter smaller usually introduces errors during the manufacturing process. Plus, smaller filters collect fewer ions, which makes chemical analysis less sensitive.

“You can’t make quadrupoles arbitrarily smaller — there is a tradeoff,” Velásquez-García adds.

His team balanced this tradeoff by leveraging additive manufacturing to make miniaturized quadrupoles with the ideal size and shape to maximize precision and sensitivity.

They fabricate the filter from a glass-ceramic resin, which is a relatively new printable material that can withstand temperatures up to 900 degrees Celsius and performs well in a vacuum.

The device is produced using vat photopolymerization, a process where a piston pushes into a vat of liquid resin until it nearly touches an array of LEDs at the bottom. These illuminate, curing the resin that remains in the minuscule gap between the piston and the LEDs. A tiny layer of cured polymer is then stuck to the piston, which rises up and repeats the cycle, building the device one tiny layer at a time.

“This is a relatively new technology for printing ceramics that allows you to make very precise 3D objects. And one key advantage of additive manufacturing is that you can aggressively iterate the designs,” Velásquez-García says.

Since the 3D printer can form practically any shape, the researchers designed a quadrupole with hyperbolic rods. This shape is ideal for mass filtering but difficult to make with conventional methods. Many commercial filters employ rounded rods instead, which can reduce performance.

They also printed an intricate network of triangular lattices surrounding the rods, which provides durability while ensuring the rods remain positioned correctly if the device is moved or shaken.

To finish the quadrupole, the researchers used a technique called electroless plating to coat the rods with a thin metal film, which makes them electrically conductive. They cover everything but the rods with a masking chemical and then submerge the quadrupole in a chemical bath heated to a precise temperature and stirring conditions. This deposits a thin metal film on the rods uniformly without damaging the rest of the device or shorting the rods.

“In the end, we made quadrupoles that were the most compact but also the most precise that could be made, given the constraints of our 3D printer,” Velásquez-García says.

Maximizing performance

To test their 3D-printed quadrupoles, the team swapped them into a commercial system and found that they could attain higher resolutions than other types of miniature filters. Their quadrupoles, which are about 12 centimeters in length, are one-quarter the density of comparable stainless-steel filters.

In addition, further experiments suggest that their 3D-printed quadrupoles could achieve precision that is on par with that of largescale commercial filters.

“Mass spectrometry is one of the most important of all scientific tools, and Velásquez-Garcia and co-workers describe the design, construction, and performance of a quadrupole mass filter that has several advantages over earlier devices,” says Graham Cooks, the Henry Bohn Hass Distinguished Professor of Chemistry in the Aston Laboratories for Mass Spectrometry at Purdue University, who was not involved with this work. “The advantages derive from these facts: It is much smaller and lighter than most commercial counterparts and it is fabricated monolithically, using additive construction. … It is an open question as to how well the performance will compare with that of quadrupole ion traps, which depend on the same electric fields for mass measurement but which do not have the stringent geometrical requirements of quadrupole mass filters.”

“This paper represents a real advance in the manufacture of quadrupole mass filters (QMF). The authors bring together their knowledge of manufacture using advanced materials, QMF drive electronics, and mass spectrometry to produce a novel system with good performance at low cost,” adds Steve Taylor, professor of electrical engineering and electronics at the University of Liverpool, who was also not involved with this paper. “Since QMFs are at the heart of the ‘analytical engine’ in many other types of mass spectrometry systems, the paper has an important significance across the whole mass spectrometry field, which worldwide represents a multibillion-dollar industry.”

In the future, the researchers plan to boost the quadrupole’s performance by making the filters longer. A longer filter can enable more precise measurements since more ions that are supposed to be filtered out will escape as the chemical travels along its length. They also intend to explore different ceramic materials that could better transfer heat.

“Our vision is to make a mass spectrometer where all the key components can be 3D printed, contributing to a device with much less weight and cost without sacrificing performance. There is still a lot of work to do, but this is a great start,” Velásquez-Garcia adds.

This work was funded by Empiriko Corporation.

© Image: Courtesy of the researchers, edited by MIT News

Targeting kids generates billions in ad revenue for social media

Social media platforms Facebook, Instagram, Snapchat, TikTok, X (formerly Twitter), and YouTube collectively derived nearly $11 billion in advertising revenue from U.S.-based users younger than 18 in 2022, according to a new study led by the Harvard T.H. Chan School of Public Health. The study is the first to offer estimates of the number of youth users on these platforms and how much annual ad revenue is attributable to them.

The study was published Dec.27 in PLOS ONE.

“As concerns about youth mental health grow, more and more policymakers are trying to introduce legislation to curtail social media platform practices that may drive depression, anxiety, and disordered eating in young people,” said senior author Bryn Austin, professor in the Department of Social and Behavioral Sciences. “Although social media platforms may claim that they can self-regulate their practices to reduce the harms to young people, they have yet to do so, and our study suggests they have overwhelming financial incentives to continue to delay taking meaningful steps to protect children.”

The researchers used a variety of public survey and market research data from 2021 and 2022 to comprehensively estimate Facebook, Instagram, Snapchat, TikTok, X, and YouTube’s number of youth users and related ad revenue. Population data from the U.S. Census and survey data from Common Sense Media and Pew Research were used to estimate the number of people younger than 18 using these platforms in the U.S. Data from eMarketer, a market research company, and Qustodio, a parental control app, provided estimations of each platform’s projected gross ad revenue in 2022 and users’ average minutes per day on each platform. The researchers used these estimations to build a simulation model that estimated how much ad revenue the platforms earned from young U.S. users.

The study found that in 2022, YouTube had 49.7 million U.S.-based users under age 18; TikTok, 18.9 million; Snapchat, 18 million; Instagram, 16.7 million; Facebook, 9.9 million; and X, 7 million. The platforms collectively generated nearly $11 billion in ad revenue from these users: $2.1 billion from users ages 12 and under and $8.6 billion from users ages 13-17.

YouTube derived the greatest ad revenue from users 12 and under ($959.1 million), followed by Instagram ($801.1 million) and Facebook ($137.2 million). Instagram derived the greatest ad revenue from users ages 13-17 ($4 billion), followed by TikTok ($2 billion) and YouTube ($1.2 billion). The researchers also calculated that Snapchat derived the greatest share of its overall 2022 ad revenue from users under 18 (41 percent), followed by TikTok (35 percent), YouTube (27 percent), and Instagram (16 percent).

The researchers noted that the study had limitations, including reliance on estimations and projections from public survey and market research sources, as social media platforms don’t disclose user age data or advertising revenue data by age group.

“Our finding that social media platforms generate substantial advertising revenue from youth highlights the need for greater data transparency as well as public health interventions and government regulations,” said lead author Amanda Raffoul, instructor in pediatrics at Harvard Medical School.

Zachary Ward, assistant professor in the Department of Health Policy and Management at Harvard Chan School, was also a co-author.

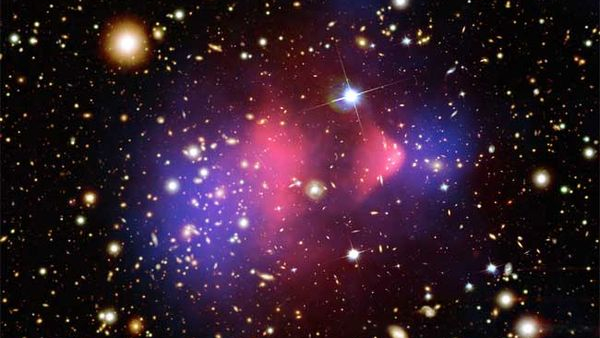

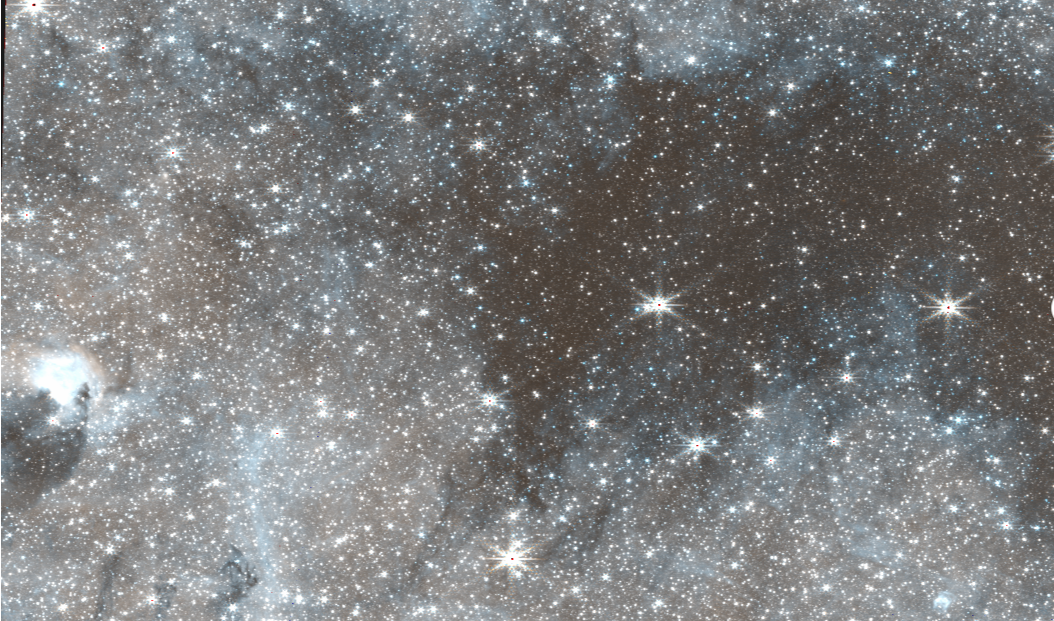

UGC 12914 and UGC 12915

A long time ago, two spiral galaxies far, far away were slowly drawing closer to each other, until, about 25 million to 30 million years before the image here was taken, they collided head-on. Found 180 million light-years away in the constellation Pegasus, both UGC 12914 and UGC 12915 managed to pull away from each otherContinue reading "UGC 12914 and UGC 12915"

The post UGC 12914 and UGC 12915 appeared first on Astronomy Magazine.

NGC 520

Although this deep-sky object is cataloged as NGC 520, it’s actually a pair of interacting spiral galaxies in the constellation Pisces the Fish. German-born English astronomer William Herschel discovered it in 1784. Even a small scope will show its odd shape, which has led amateur astronomers to christen it the Flying Ghost. It measures 4.6′Continue reading "NGC 520"

The post NGC 520 appeared first on Astronomy Magazine.

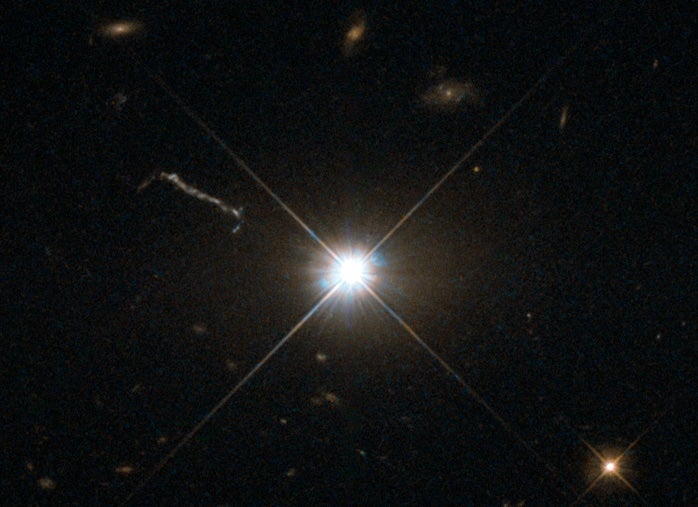

3C 273

Observing 3C 273 is a lot like observing Pluto. In both cases, you’ll only see a faint point of light, but the observations are meaningful because of what the objects are. In the case of 3C 273, you’re looking at the first quasar ever discovered, receiving photons emitted a couple of billion years ago fromContinue reading "3C 273"

The post 3C 273 appeared first on Astronomy Magazine.

Purgathofer-Weinberger 1

In May 1980, Austrian astronomers Alois Purgathofer and Ronald Weinberger discovered a large, faint planetary nebula while searching Palomar Observatory Sky Survey prints for possible flare stars. As their first co-discovery of a planetary, it was designated Purgathofer-Weinberger 1. This is usually abbreviated PuWe 1, but also carries the catalog designation PN G158.9+17.8. This objectContinue reading "Purgathofer-Weinberger 1"

The post Purgathofer-Weinberger 1 appeared first on Astronomy Magazine.

NGC 3190 galaxy group

You’ll find this grouping of galaxies 2° north-northwest of the 2nd-magnitude star Algieba (Gamma [γ] Leonis). It carries a couple of common names. One is the Gamma Leonis Group because of its nearness to Algieba. The other is Hickson 44, the brightest group in Canadian astronomer Paul Hickson’s catalog of 100 compact galaxy groups. HicksonContinue reading "NGC 3190 galaxy group"

The post NGC 3190 galaxy group appeared first on Astronomy Magazine.

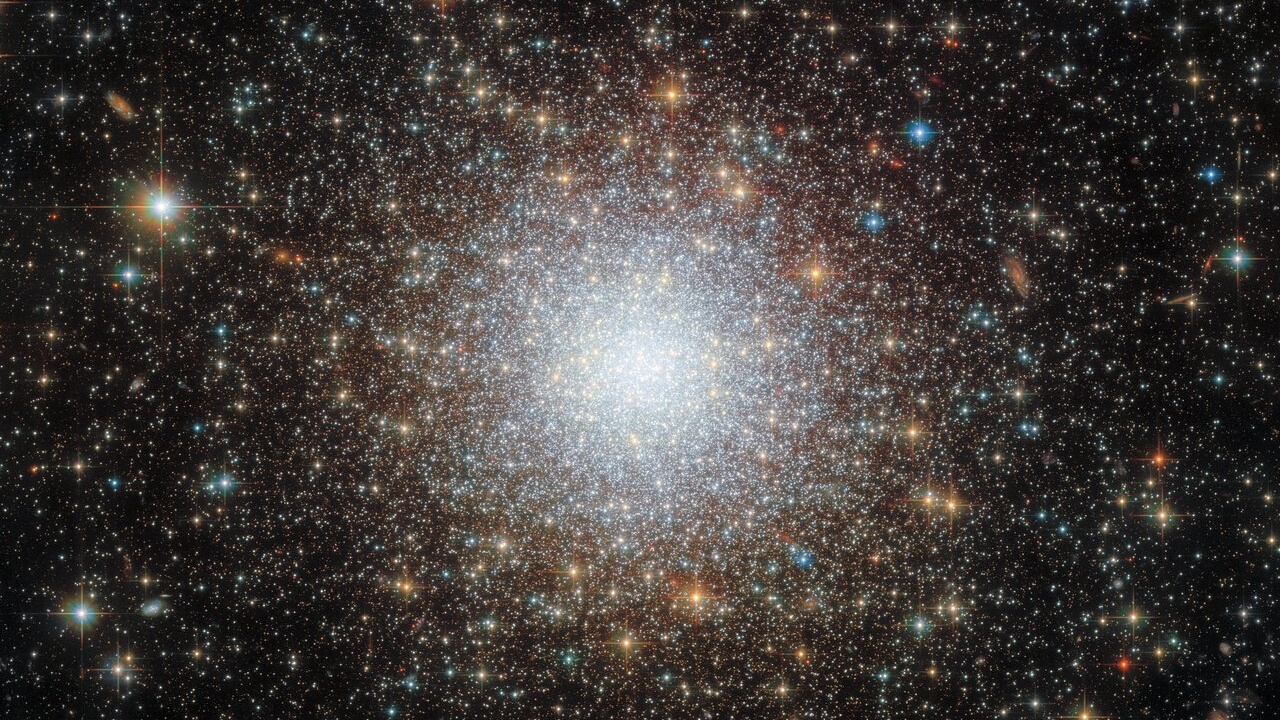

M15 and Pease 1

Globular cluster M15 in Pegasus is the “hey, let me show you this one” autumn object for amateur astronomers north of the equator. It’s also known as NGC 7078. M15 lies some 34,000 light-years from Earth and appears 18′ across. It has a true diameter of 175 light-years and contains more than 100,000 stars. AmongContinue reading "M15 and Pease 1"

The post M15 and Pease 1 appeared first on Astronomy Magazine.

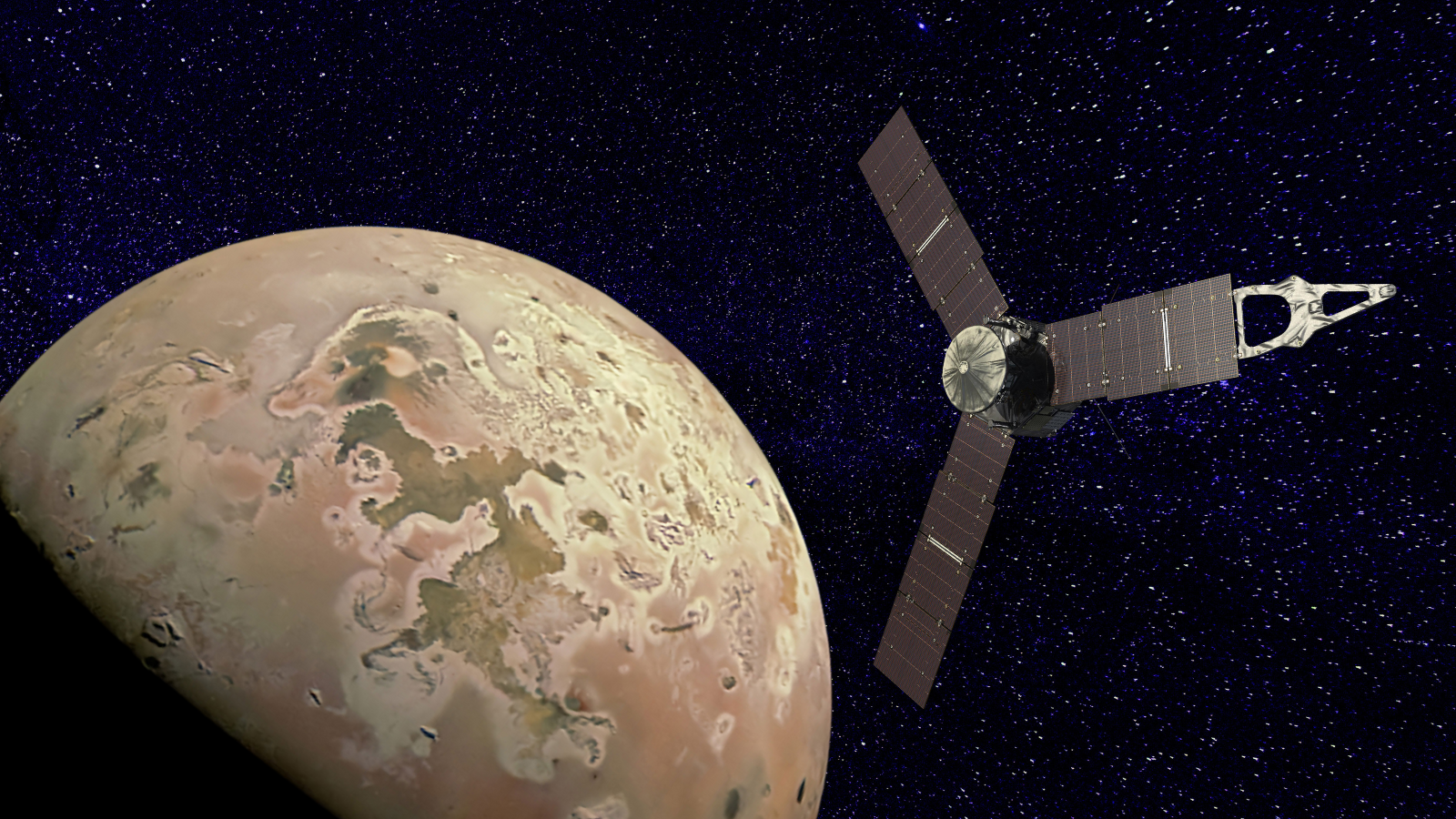

NASA's Juno spacecraft will get its closest look yet at Jupiter's moon Io on Dec. 30

© NASA/Robert Lea

Meet Taters, the cat who starred in the video streamed from space

Taters, a 3-year-old orange tabby cat, is having his 15 seconds of fame. The world met Taters after NASA used a laser to stream a test video of the feline 19 million miles from the Psyche spacecraft to Earth on Dec. 11. The footage, which took 101 seconds to reach the Hale Telescope at theContinue reading "Meet Taters, the cat who starred in the video streamed from space"

The post Meet Taters, the cat who starred in the video streamed from space appeared first on Astronomy Magazine.

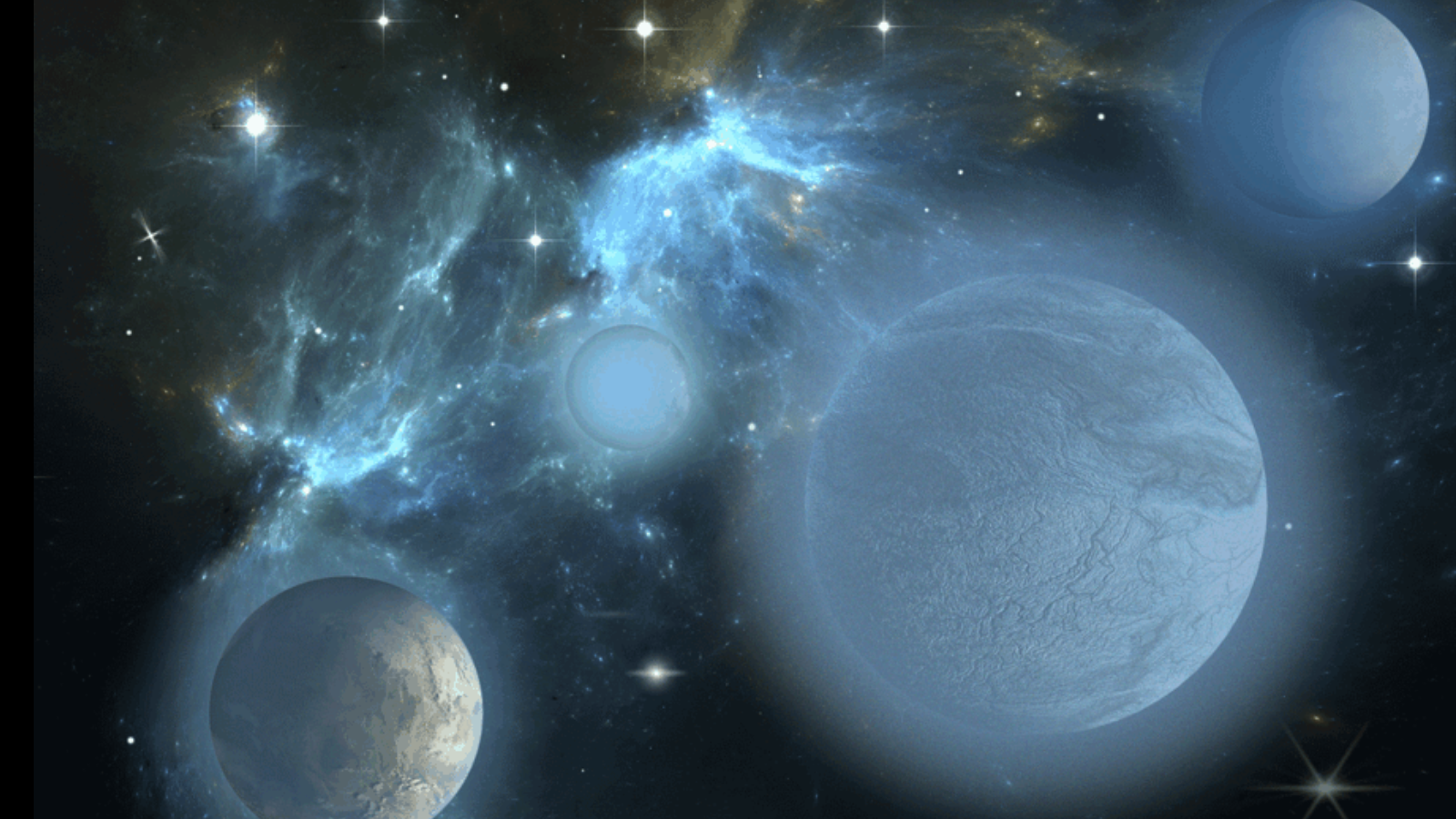

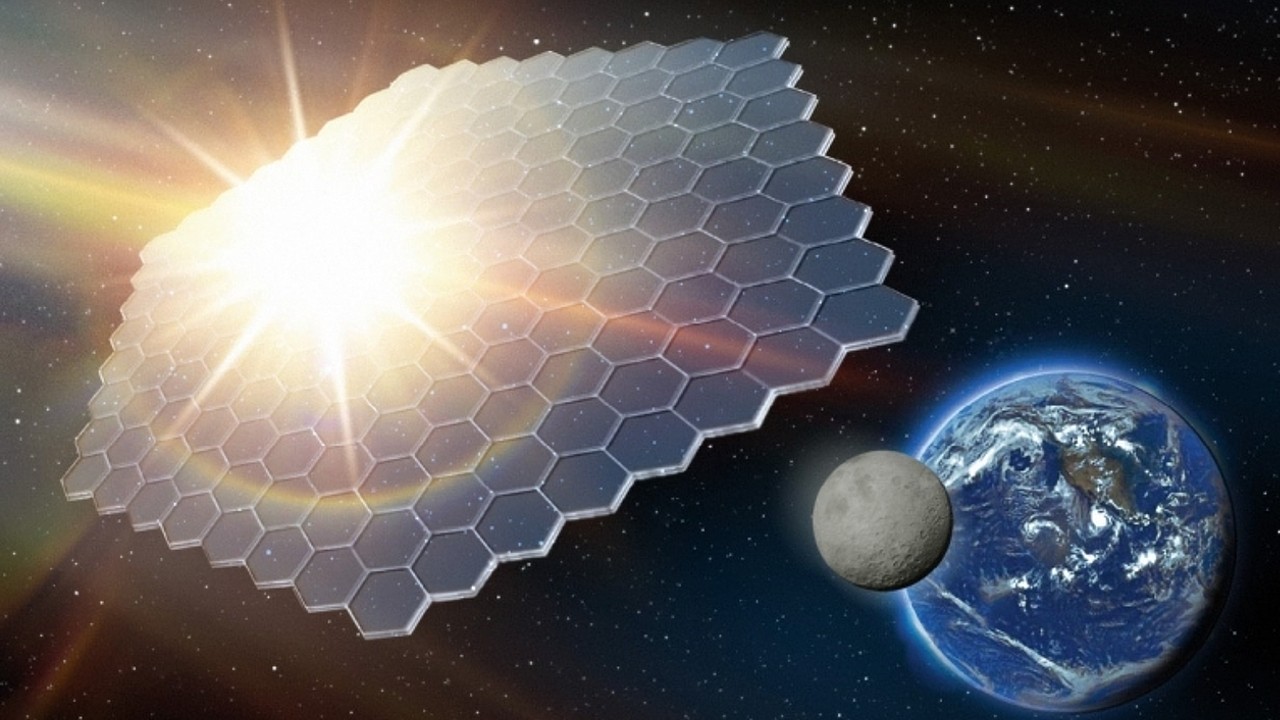

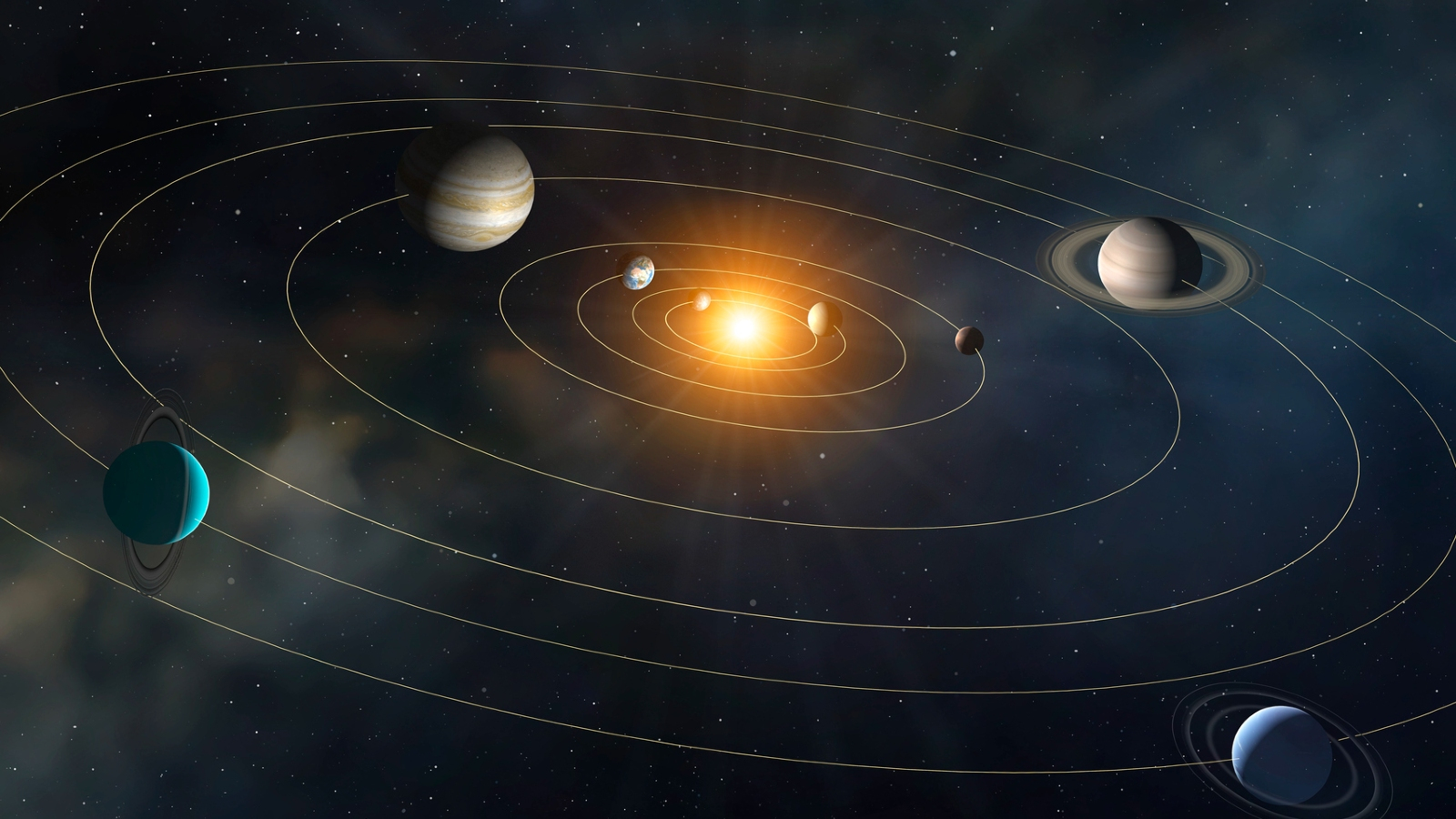

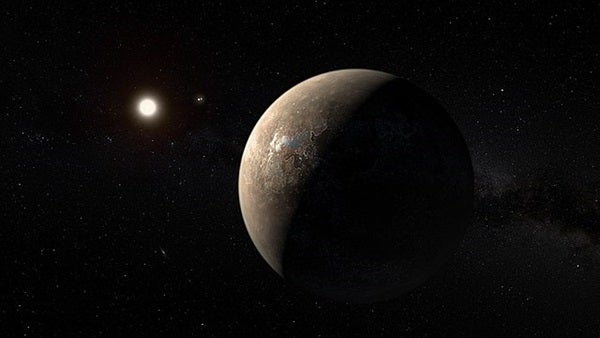

A carbon-lite atmosphere could be a sign of water and life on other terrestrial planets, MIT study finds

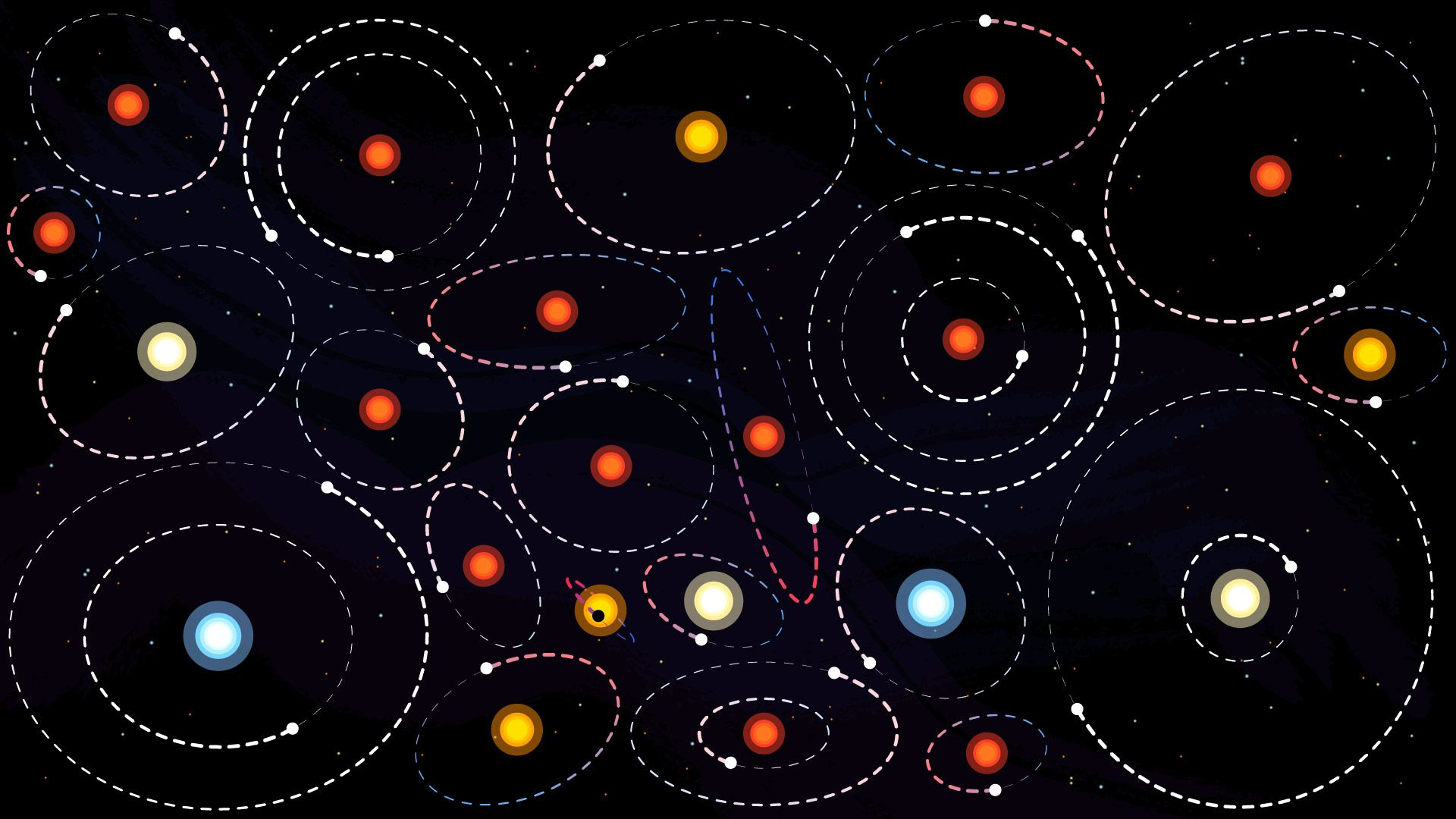

Scientists at MIT, the University of Birmingham, and elsewhere say that astronomers’ best chance of finding liquid water, and even life on other planets, is to look for the absence, rather than the presence, of a chemical feature in their atmospheres.

The researchers propose that if a terrestrial planet has substantially less carbon dioxide in its atmosphere compared to other planets in the same system, it could be a sign of liquid water — and possibly life — on that planet’s surface.

What’s more, this new signature is within the sights of NASA’s James Webb Space Telescope (JWST). While scientists have proposed other signs of habitability, those features are challenging if not impossible to measure with current technologies. The team says this new signature, of relatively depleted carbon dioxide, is the only sign of habitability that is detectable now.

“The Holy Grail in exoplanet science is to look for habitable worlds, and the presence of life, but all the features that have been talked about so far have been beyond the reach of the newest observatories,” says Julien de Wit, assistant professor of planetary sciences at MIT. “Now we have a way to find out if there’s liquid water on another planet. And it’s something we can get to in the next few years.”

The team’s findings appear today in Nature Astronomy. De Wit co-led the study with Amaury Triaud of the University of Birmingham in the UK. Their MIT co-authors include Benjamin Rackham, Prajwal Niraula, Ana Glidden Oliver Jagoutz, Matej Peč, Janusz Petkowski, and Sara Seager, along with Frieder Klein at the Woods Hole Oceanographic Institution (WHOI), Martin Turbet of Ècole Polytechnique in France, and Franck Selsis of the Laboratoire d’astrophysique de Bordeaux.

Beyond a glimmer

Astronomers have so far detected more than 5,200 worlds beyond our solar system. With current telescopes, astronomers can directly measure a planet’s distance to its star and the time it takes it to complete an orbit. Those measurements can help scientists infer whether a planet is within a habitable zone. But there’s been no way to directly confirm whether a planet is indeed habitable, meaning that liquid water exists on its surface.

Across our own solar system, scientists can detect the presence of liquid oceans by observing “glints” — flashes of sunlight that reflect off liquid surfaces. These glints, or specular reflections, have been observed, for instance, on Saturn’s largest moon, Titan, which helped to confirm the moon’s large lakes.

Detecting a similar glimmer in far-off planets, however, is out of reach with current technologies. But de Wit and his colleagues realized there’s another habitable feature close to home that could be detectable in distant worlds.

“An idea came to us, by looking at what’s going on with the terrestrial planets in our own system,” Triaud says.

Venus, Earth, and Mars share similarities, in that all three are rocky and inhabit a relatively temperate region with respect to the sun. Earth is the only planet among the trio that currently hosts liquid water. And the team noted another obvious distinction: Earth has significantly less carbon dioxide in its atmosphere.

“We assume that these planets were created in a similar fashion, and if we see one planet with much less carbon now, it must have gone somewhere,” Triaud says. “The only process that could remove that much carbon from an atmosphere is a strong water cycle involving oceans of liquid water.”

Indeed, the Earth’s oceans have played a major and sustained role in absorbing carbon dioxide. Over hundreds of millions of years, the oceans have taken up a huge amount of carbon dioxide, nearly equal to the amount that persists in Venus’ atmosphere today. This planetary-scale effect has left Earth’s atmosphere significantly depleted of carbon dioxide compared to its planetary neighbors.

“On Earth, much of the atmospheric carbon dioxide has been sequestered in seawater and solid rock over geological timescales, which has helped to regulate climate and habitability for billions of years,” says study co-author Frieder Klein.

The team reasoned that if a similar depletion of carbon dioxide were detected in a far-off planet, relative to its neighbors, this would be a reliable signal of liquid oceans and life on its surface.

“After reviewing extensively the literature of many fields from biology, to chemistry, and even carbon sequestration in the context of climate change, we believe that indeed if we detect carbon depletion, it has a good chance of being a strong sign of liquid water and/or life,” de Wit says.

A roadmap to life

In their study, the team lays out a strategy for detecting habitable planets by searching for a signature of depleted carbon dioxide. Such a search would work best for “peas-in-a-pod” systems, in which multiple terrestrial planets, all about the same size, orbit relatively close to each other, similar to our own solar system. The first step the team proposes is to confirm that the planets have atmospheres, by simply looking for the presence of carbon dioxide, which is expected to dominate most planetary atmospheres.

“Carbon dioxide is a very strong absorber in the infrared, and can be easily detected in the atmospheres of exoplanets,” de Wit explains. “A signal of carbon dioxide can then reveal the presence of exoplanet atmospheres.”

Once astronomers determine that multiple planets in a system host atmospheres, they can move on to measure their carbon dioxide content, to see whether one planet has significantly less than the others. If so, the planet is likely habitable, meaning that it hosts significant bodies of liquid water on its surface.

But habitable conditions doesn’t necessarily mean that a planet is inhabited. To see whether life might actually exist, the team proposes that astronomers look for another feature in a planet’s atmosphere: ozone.

On Earth, the researchers note that plants and some microbes contribute to drawing carbon dioxide, although not nearly as much as the oceans. Nevertheless, as part of this process, the lifeforms emit oxygen, which reacts with the sun’s photons to transform into ozone — a molecule that is far easier to detect than oxygen itself.

The researchers say that if a planet’s atmosphere shows signs of both ozone and depleted carbon dioxide, it likely is a habitable, and inhabited world.

“If we see ozone, chances are pretty high that it’s connected to carbon dioxide being consumed by life,” Triaud says. “And if it’s life, it’s glorious life. It would not be just a few bacteria. It would be a planetary-scale biomass that’s able to process a huge amount of carbon, and interact with it.”

The team estimates that NASA’s James Webb Space Telescope would be able to measure carbon dioxide, and possibly ozone, in nearby, multiplanet systems such as TRAPPIST-1 — a seven-planet system that orbits a bright star, just 40 light years from Earth.

“TRAPPIST-1 is one of only a handful of systems where we could do terrestrial atmospheric studies with JWST,” de Wit says. “Now we have a roadmap for finding habitable planets. If we all work together, paradigm-shifting discoveries could be done within the next few years.”

© Image: Christine Daniloff, MIT; iStock

Powering the green economy: How NUS is advancing sustainability education

In this series, NUS News explores how NUS is accelerating sustainability research and education in response to climate change challenges, and harnessing the knowledge and creativity of our people to pave the way to a greener future for all.

Amid the record-high levels of greenhouse gases, pollution and deforestation, the topic of sustainability has never been more pressing. In tandem with shaping the future of sustainability and contributing to climate action, NUS offers a plethora of sustainability-related postgraduate and executive programmes in both STEM (science, technology, engineering and mathematics) and non-STEM fields. These courses are also updated regularly to ensure they remain relevant in our fast-changing world.

NUS Vice Provost for Masters’ Programmes & Lifelong Education, and Dean of the School of Continuing and Lifelong Education (NUS SCALE) Professor Susanna Leong said, “As Singapore, Asia, and the world work towards a more sustainable future, the courses that the University offer can be applied to solving immediate problems, and those of the future.” The diverse learning opportunities include short executive training courses, professional and graduate certificates, as well as credentialled postgraduate programmes, she added.

NUS offers 11 master’s degrees in various specialised fields of sustainability, from the sciences and engineering to business and climate change. The University also introduces new specialisations in sustainability for postgraduate programmes as part of its regular curriculum review. With a wide range of offerings, students can deep-dive into topics they are passionate about, with many going on to become thought leaders and experts in their respective fields.

Here are highlights of some of our sustainability-related Masters programmes.

MSc in Biodiversity Conservation and Nature-based Climate Solutions

This programme explores problems and strategies related to conservation, environmental sustainability, and climate change. Due to its geographical location in Southeast Asia, Singapore is in a unique position to explore issues where countries may prioritise economic development over conservation concerns.

Students can choose from a range of modules such as exploring the impact of biological invasions as well as the integration of spatial and social modelling skills in environmental sustainability, in-demand topics and skillsets which are highly valued.

These are taught by a stellar team of faculty members from the Department of Biological Sciences with expertise in environmental sustainability, biodiversity conservation, and freshwater, marine and terrestrial ecology.

“For many of our modules, guest speakers from different environmental backgrounds and organisations are invited to talk about their work,” said Ms Kayla Lindsey, a 25-year-old student in the programme. “They also share what real-life opportunities are available to us as we look for full-time jobs after graduation.”

MSc in Energy Systems

With the global shift away from fossil fuels to more sustainable energy sources, this uniquely multidisciplinary programme by the NUS College of Design and Engineering (CDE) combines engineering and technology to address the gap in the current energy education landscape, which tends to be single-disciplinary in nature.

Beyond learning about the principles of energy technologies, the impact of policies and market-based mechanisms, as well as cost analysis, students will also acquire skills that prepare them for the global transition to greener sources of energy such as solar energy and hydrogen.

Energy Systems Modelling and Market Mechanisms, Biomass and Energy, and Management of Technological Innovation are just some of the modules they can choose from.

Graduates have gone on to pursue careers in energy analysis and operation management, consulting and policy advisory, as well as technology and innovation management in the energy sector.

MSc in Sustainable and Green Finance

The first of its kind in Asia, this course incorporates social and environmental considerations into conventional financial models. Through partnerships with industry players, students take their learning beyond the classroom and are prepared for a rapidly-evolving industry.

Launched in 2021 by NUS Business School in collaboration with the Sustainable and Green Finance Institute (SGFIN), it was set up with support from the Monetary Authority of Singapore.

By equipping students with the ability to take ESG considerations into account when making investment decisions, the course opens up a variety of career options to graduates. These range from roles in the corporate or financial sector, as well as government agencies and non-governmental organisations (NGOs).

MSc in Environmental Management

Following a recent curriculum revamp, this long-running flagship programme is now multidisciplinary as well as interdisciplinary – jointly offered by six NUS faculties and schools, including the science and law faculties.

Local as well as global in scope, it grooms graduates for key managerial roles in the private and public sectors. Students gain insights in policymaking, data analysis and other fields.

Mr Nihal Jayantha Mallikaratne, 52, an operations manager at a manufacturing firm who is enrolled in this programme part-time, wants to gain “a sound understanding of the complex environmental challenges we face today, and the strategies needed to address them.” He hopes that at the end of the programme, he will possess the knowledge and skills to make a real impact in the field of environment and sustainability.

Short-term Continuing Education and Training (CET) courses

Aside from the undergraduate and master’s degrees, NUS offers short-term courses for professional executives as well as through organisations for their employees. Currently, these CET courses, which provide a broad view of sustainability and climate change issues, fall into four categories – business, policy, engineering, and science.

With more businesses placing greater emphasis on shaping policies and practices in ESG to better manage the risks and opportunities related to sustainable development, the University is seeing a strong interest in the take-up of such courses. There has also been strong interest from government agencies to upskill policy officers and administrators on sustainability.

Social and Sustainable Investing

With a focus on the Asia-Pacific region, this two-day course covers topics such as sustainable investing, the latest developments in corporate and social responsibility, as well as ways to invest in social impact bonds and green bonds.

It is offered by the NUS Business School. Business leaders and investment managers will find it particularly relevant, although the course is open to anyone with an interest in social and environmentally-related investments.

Senior Management Programme: Policy & Leadership for Innovation and Sustainability

Corporate leaders, leaders of non-profit organisations, and senior policy professionals have taken part in this programme run by the Lee Kuan Yew School of Public Policy.

Over three weeks, participants study how different institutions, economies and societies operate as complex and adaptive systems – and learn how they can create better policies.

The Senior Management Programme (SMP) includes a week-long study trip to Zurich, Switzerland, which offers first-hand insights into the country’s policies and business practices.

One recent graduate was Mr Tan Tok Seng, Senior Deputy Director of the School Campus Department at the Ministry of Education, who shared that he was impressed by the “thoughtfully curated and professionally conducted” discussions led by experts of the field. The programme also had a “good variety of topics that benefited participants from different agencies and backgrounds”, he added.

Deep Decarbonisation: Principles and Analysis Tools

This course, offered by CDE, looks at the challenges and opportunities posed by the energy transition, as well as the analytical tools used to study the underlying issues and manage trade-offs.

Energy System Transformation, Decision-Making Under Uncertainties and Energy System Modelling and Analysis, are among the topics covered in this 14-hour programme.

One of the participants, Mr Adrian Chan, a production line trainer from Shell Jurong Island, noted that understanding decarbonisation is essential in his line of work.

“As the implementation of decarbonisation initiatives brings forth novel technologies and processes, it becomes imperative for operation technicians to receive appropriate training to proficiently operate and maintain these systems,” he said.

Sustainability 101 Course for Policy Officers

Sustainability 101 is symbolic of a “whole-of-nation” sustainability movement to create a robust green talent pipeline as enshrined in the Singapore Green Plan 2030. Launched in November 2022 by NUS Centre for Nature-based Climate Solutions in collaboration with the National Climate Change Secretariat and NUS SCALE, more than 153 government officers from over 35 agencies have taken this programme which covers topics such as international climate negotiations and domestic environmental policies.

To help them develop well-balanced and science-based policies in their respective fields, participants were exposed to seminars, panels, case studies and discussions led by academics, government officials and industry leaders.

Keen to find out more about the university’s wide range of sustainability programmes? View the full list here, or email sustainability@nus.edu.sg for more information.

This is the first in a two-part series on NUS’ sustainable education offerings.

What are neutron stars? The cosmic gold mines, explained

It isn’t a secret that humanity and everything around us is made of star stuff. But not all stars create elements equally. Sure, regular stars can create the basic elements: helium, carbon, neon, oxygen, silicon, and iron. But it takes the collision of two neutron stars — incredibly dense stellar corpses — to create theContinue reading "What are neutron stars? The cosmic gold mines, explained"

The post What are neutron stars? The cosmic gold mines, explained appeared first on Astronomy Magazine.

Does “food as medicine” make a big dent in diabetes?

How much can healthy eating improve a case of diabetes? A new health care program attempting to treat diabetes by means of improved nutrition shows a very modest impact, according to the first fully randomized clinical trial on the subject.

The study, co-authored by MIT health care economist Joseph Doyle of the MIT Sloan School of Management, tracks participants in an innovative program that provides healthy meals in order to address diabetes and food insecurity at the same time. The experiment focused on Type 2 diabetes, the most common form.

The program involved people with high blood sugar levels, in this case an HbA1c hemoglobin level of 8.0 or more. Participants in the clinical trial who were given food to make 10 nutritious meals per week saw their hemoglobin A1c levels fall by 1.5 percentage points over six months. However, trial participants who were not given any food had their HbA1c levels fall by 1.3 percentage points over the same time. This suggests the program’s relative effects were limited and that providers need to keep refining such interventions.

“We found that when people gained access to [got food from] the program, their blood sugar did fall, but the control group had an almost identical drop,” says Doyle, the Erwin H. Schell Professor of Management at MIT Sloan.

Given that these kinds of efforts have barely been studied through clinical trials, Doyle adds, he does not want one study to be the last word, and hopes it spurs more research to find methods that will have a large impact. Additionally, programs like this also help people who lack access to healthy food in the first place by dealing with their food insecurity.

“We do know that food insecurity is problematic for people, so addressing that by itself has its own benefits, but we still need to figure out how best to improve health at the same time if it is going to be addressed through the health care system,” Doyle adds.

The paper, “The Effect of an Intensive Food-as-Medicine Program on Health and Health Care Use: A Randomized Clinical Trial,” is published today in JAMA Internal Medicine.

The authors are Doyle; Marcella Alsan, a professor of public policy at Harvard Kennedy School; Nicholas Skelley, a predoctoral research associate at MIT Sloan Health Systems Initiative; Yutong Lu, a predoctoral technical associate at MIT Sloan Health Systems Initiative; and John Cawley, a professor in the Department of Economics and the Department of Policy Analysis and Management at Cornell University and co-director of Cornell's Institute on Health Economics, Health Behaviors and Disparities.

To conduct the study, the researchers partnered with a large health care provider in the Mid-Atlantic region of the U.S., which has developed food-as-medicine programs. Such programs have become increasingly popular in health care, and could apply to treating diabetes, which involves elevated blood sugar levels and can create serious or even fatal complications. Diabetes affects about 10 percent of the adult population.

The study consisted of a randomized clinical trial of 465 adults with Type 2 diabetes, centered in two locations within the network of the health care provider. One location was part of an urban area, and the other was rural. The study took place from 2019 through 2022, with a year of follow-up testing beyond that. People in the study’s treatment group were given food for 10 healthy meals per week for their families over a six-month period, and had opportunities to consult with a nutritionist and nurses as well. Participants from both the treatment and control groups underwent periodic blood testing.

Adherence to the program was very high. Ultimately, however, the reduction in blood sugar levels experienced by people in the treatment group was only marginally bigger than that of people in the control group.

Those results leave Doyle and his co-authors seeking to explain why the food intervention didn’t have a bigger relative impact. In the first place, he notes, there could be some basic reversion to the mean in play — some people in the control group with high blood sugar levels were likely to improve that even without being enrolled in the program.

“If you examine people on a bad health trajectory, many will naturally improve as they take steps to move away from this danger zone, such as moderate changes in diet and exercise,” Doyle says.

Moreover, because the healthy eating program was developed by a health care provider staying engaged with all the participants, people in the control group may have still benefitted from medical engagement and thus fared better than a control group without such health care access.

It is also possible the Covid-19 pandemic, unfolding during the experiment’s time frame, affected the outcomes in some way, although results were similar when they examined outcomes prior to the pandemic. Or it could be that the intervention’s effects might appear over a still-longer time frame.

And while the program provided food, it left it to participants to prepare meals, which might be a hurdle for program compliance. Potentially, premade meals might have a bigger impact.

“Experimenting with providing those premade meals seems like a natural next step,” says Doyle, who emphasizes that he would like to see more research about food-as-medicine programs aiming at diabetes, especially if such programs evolve and try to some different formats and features.

“When you find a particular intervention doesn’t improve blood sugar, we don’t just say, we shouldn’t try at all,” Doyle says. “Our study definitely raises questions, and gives us some new answers we haven’t seen before.”

Support for the study came from the Robert Wood Johnson Foundation; the Abdul Latif Jameel Poverty Action Lab (J-PAL); and the MIT Sloan Health Systems Initiative. Outside the submitted work, Cawley has reported receiving personal fees from Novo Nordisk, Inc, a pharmaceutical company that manufactures diabetes medication and other treatments.

© Credit: iStock

Hubble Telescope sees a bright 'snowball' of stars in the Milky Way's neighbor (image)

© ESA/Hubble & NASA, A. Sarajedini, F. Niederhofer

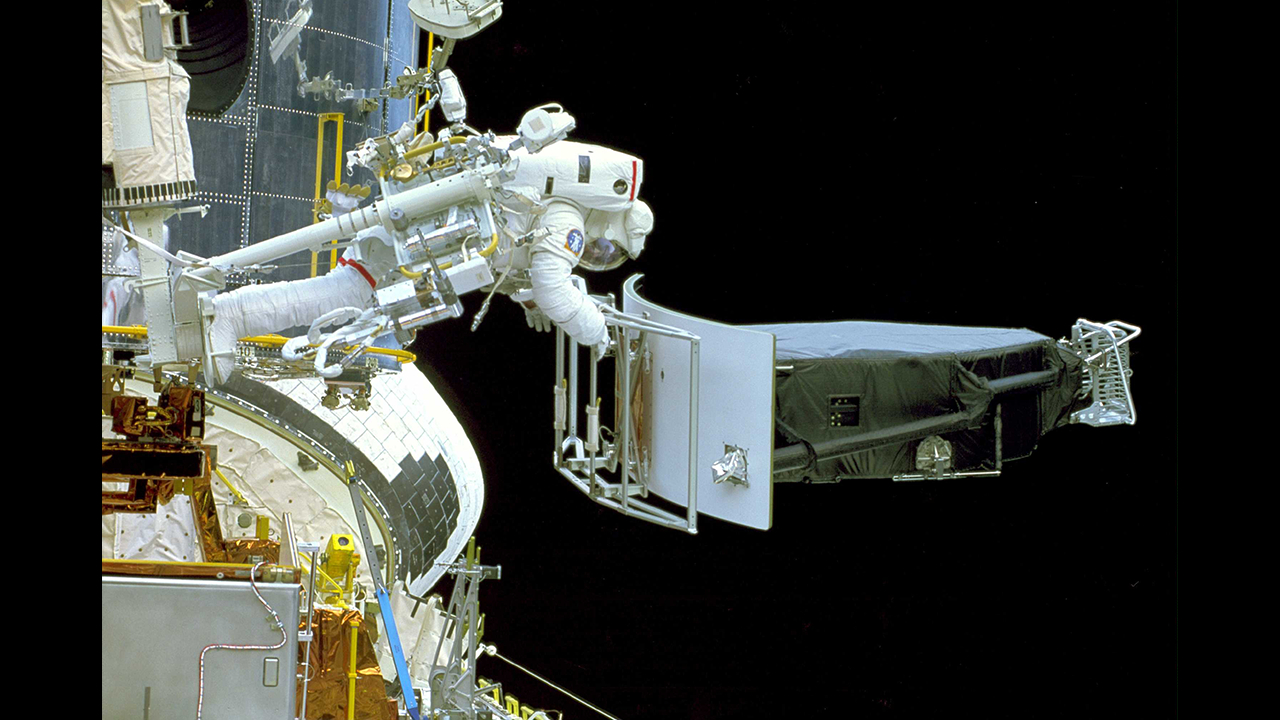

30 years ago, astronauts saved the Hubble Space Telescope

© NASA

12 out-of-this-world exoplanet discoveries in 2023

© Zayna Sheikh

10 times the night sky amazed us in 2023

© Josh Dinner

12 James Webb Space Telescope findings that changed our understanding of the universe in 2023

© Northrop Grumman

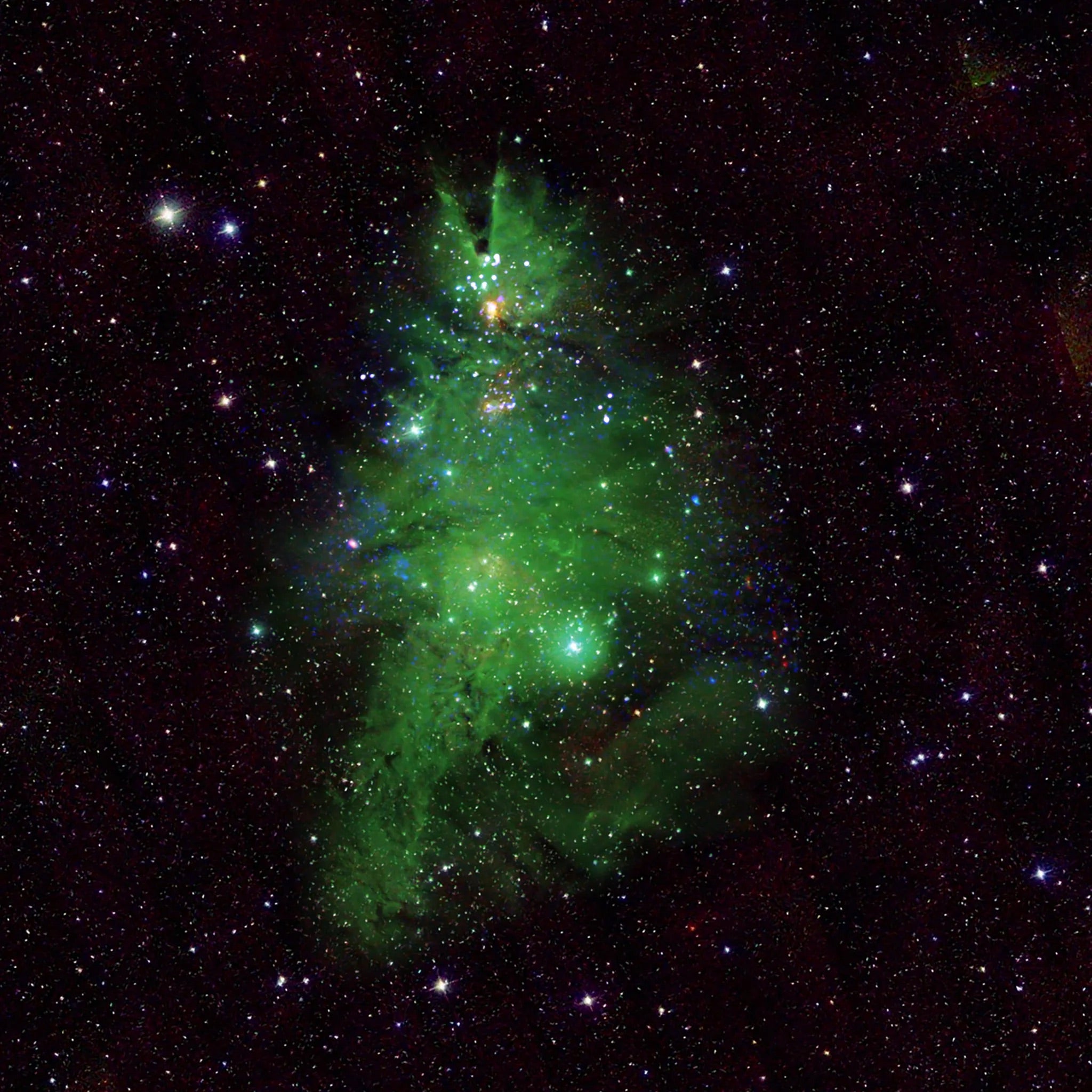

Merry Christmas from the cosmos

Have you ever seen comparison photos of objects on Earth versus objects in space, and found that there are some mind-boggling similarities? I’m talking about ones that look almost identical. A fresh image of an open star cluster, cataloged as NGC 2264 and nicknamed the Christmas Tree Cluster, offers an appearance that helps us perfectlyContinue reading "Merry Christmas from the cosmos"

The post Merry Christmas from the cosmos appeared first on Astronomy Magazine.

Nuking an incoming asteroid will spew out X-rays. This new model shows what happens

© European Space Agency

Engineers develop a vibrating, ingestible capsule that might help treat obesity

When you eat a large meal, your stomach sends signals to your brain that create a feeling of fullness, which helps you realize it’s time to stop eating. A stomach full of liquid can also send these messages, which is why dieters are often advised to drink a glass of water before eating.

MIT engineers have now come up with a new way to take advantage of that phenomenon, using an ingestible capsule that vibrates within the stomach. These vibrations activate the same stretch receptors that sense when the stomach is distended, creating an illusory sense of fullness.

In animals who were given this pill 20 minutes before eating, the researchers found that this treatment not only stimulated the release of hormones that signal satiety, but also reduced the animals’ food intake by about 40 percent. Scientists have much more to learn about the mechanisms that influence human body weight, but if further research suggests this technology could be safely used in humans, such a pill might offer a minimally invasive way to treat obesity, the researchers say.

“For somebody who wants to lose weight or control their appetite, it could be taken before each meal,” says Shriya Srinivasan PhD ’20, a former MIT graduate student and postdoc who is now an assistant professor of bioengineering at Harvard University. “This could be really interesting in that it would provide an option that could minimize the side effects that we see with the other pharmacological treatments out there.”

Srinivasan is the lead author of the new study, which appears today in Science Advances. Giovanni Traverso, an associate professor of mechanical engineering at MIT and a gastroenterologist at Brigham and Women’s Hospital, is the senior author of the paper.

A sense of fullness

When the stomach becomes distended, specialized cells called mechanoreceptors sense that stretching and send signals to the brain via the vagus nerve. As a result, the brain stimulates production of insulin, as well as hormones such as C-peptide, Pyy, and GLP-1. All of these hormones work together to help people digest their food, feel full, and stop eating. At the same time, levels of ghrelin, a hunger-promoting hormone, go down.

While a graduate student at MIT, Srinivasan became interested in the idea of controlling this process by artificially stretching the mechanoreceptors that line the stomach, through vibration. Previous research had shown that vibration applied to a muscle can induce a sense that the muscle has stretched farther than it actually has.

“I wondered if we could activate stretch receptors in the stomach by vibrating them and having them perceive that the entire stomach has been expanded, to create an illusory sense of distension that could modulate hormones and eating patterns,” Srinivasan says.

As a postdoc in MIT’s Koch Institute for Integrative Cancer Research, Srinivasan worked closely with Traverso’s lab, which has developed many novel approaches to oral delivery of drugs and electronic devices. For this study, Srinivasan, Traverso, and a team of researchers designed a capsule about the size of a multivitamin, that includes a vibrating element. When the pill, which is powered by a small silver oxide battery, reaches the stomach, acidic gastric fluids dissolve a gelatinous membrane that covers the capsule, completing the electronic circuit that activates the vibrating motor.

In a study in animals, the researchers showed that once the pill begins vibrating, it activates mechanoreceptors, which send signals to the brain through stimulation of the vagus nerve. The researchers tracked hormone levels during the periods when the device was vibrating and found that they mirrored the hormone release patterns seen following a meal, even when the animals had fasted.

The researchers then tested the effects of this stimulation on the animals’ appetite. They found that when the pill was activated for about 20 minutes, before the animals were offered food, they consumed 40 percent less, on average, than they did when the pill was not activated. The animals also gained weight more slowly during periods when they were treated with the vibrating pill.

“The behavioral change is profound, and that’s using the endogenous system rather than any exogenous therapeutic. We have the potential to overcome some of the challenges and costs associated with delivery of biologic drugs by modulating the enteric nervous system,” Traverso says.

The current version of the pill is designed to vibrate for about 30 minutes after arriving in the stomach, but the researchers plan to explore the possibility of adapting it to remain in the stomach for longer periods of time, where it could be turned on and off wirelessly as needed. In the animal studies, the pills passed through the digestive tract within four or five days.

The study also found that the animals did not show any signs of obstruction, perforation, or other negative impacts while the pill was in their digestive tract.

An alternative approach

This type of pill could offer an alternative to the current approaches to treating obesity, the researchers say. Nonmedical interventions such as diet exercise don’t always work, and many of the existing medical interventions are fairly invasive. These include gastric bypass surgery, as well as gastric balloons, which are no longer used widely in the United States due to safety concerns.

Drugs such as GLP-1 agonists can also aid weight loss, but most of them have to be injected, and they are unaffordable for many people. According to Srinivasan, the MIT capsules could be manufactured at a cost that would make them available to people who don’t have access to more expensive treatment options.

“For a lot of populations, some of the more effective therapies for obesity are very costly. At scale, our device could be manufactured at a pretty cost-effective price point,” she says. “I’d love to see how this would transform care and therapy for people in global health settings who may not have access to some of the more sophisticated or expensive options that are available today.”

The researchers now plan to explore ways to scale up the manufacturing of the capsules, which could enable clinical trials in humans. Such studies would be important to learn more about the devices’ safety, as well as determine the best time to swallow the capsule before to a meal and how often it would need to be administered.

Other authors of the paper include Amro Alshareef, Alexandria Hwang, Ceara Byrne, Johannes Kuosmann, Keiko Ishida, Joshua Jenkins, Sabrina Liu, Wiam Abdalla Mohammed Madani, Alison Hayward, and Niora Fabian.

The research was funded by the National Institutes of Health, Novo Nordisk, the Department of Mechanical Engineering at MIT, a Schmidt Science Fellowship, and the National Science Foundation.

© Credit: Courtesy of the researchers, MIT News

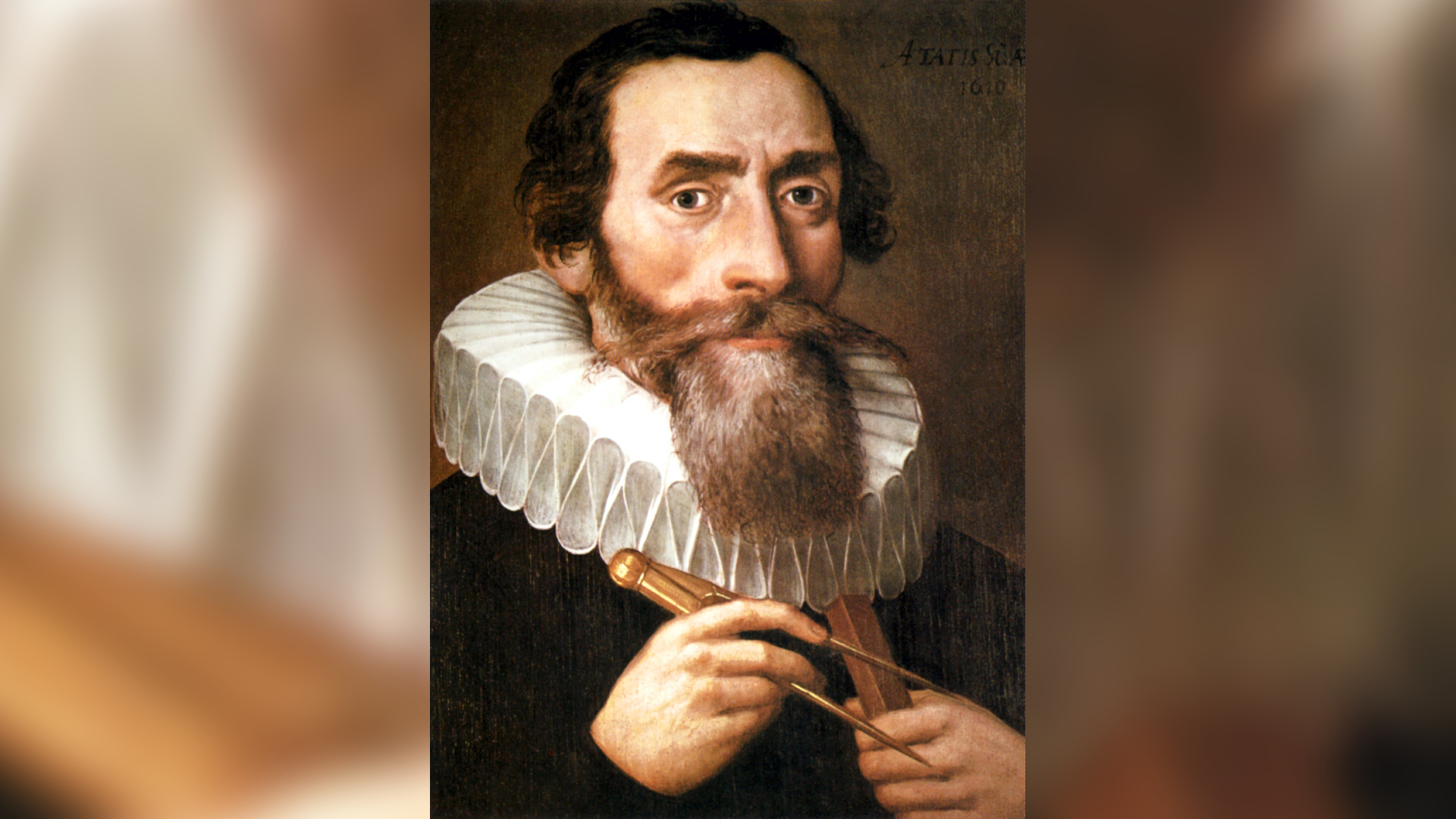

Johannes Kepler: Everything you need to know

© Pictures from History/Universal Images Group via Getty Images

The Sky This Week from December 22 to 29: A Christmastime Cold Moon

Friday, December 22The Moon passes 3° north of Jupiter at 9 A.M. EST. Shortly after sunset, you can find both high in the east, in Aries. The Moon is now nearly 7° northeast (to the left) of Jupiter, hanging directly below the Ram’s brightest star, magnitude 2 Hamal. Jupiter is a blazing magnitude –2.7, theContinue reading "The Sky This Week from December 22 to 29: A Christmastime Cold Moon"

The post The Sky This Week from December 22 to 29: A Christmastime Cold Moon appeared first on Astronomy Magazine.

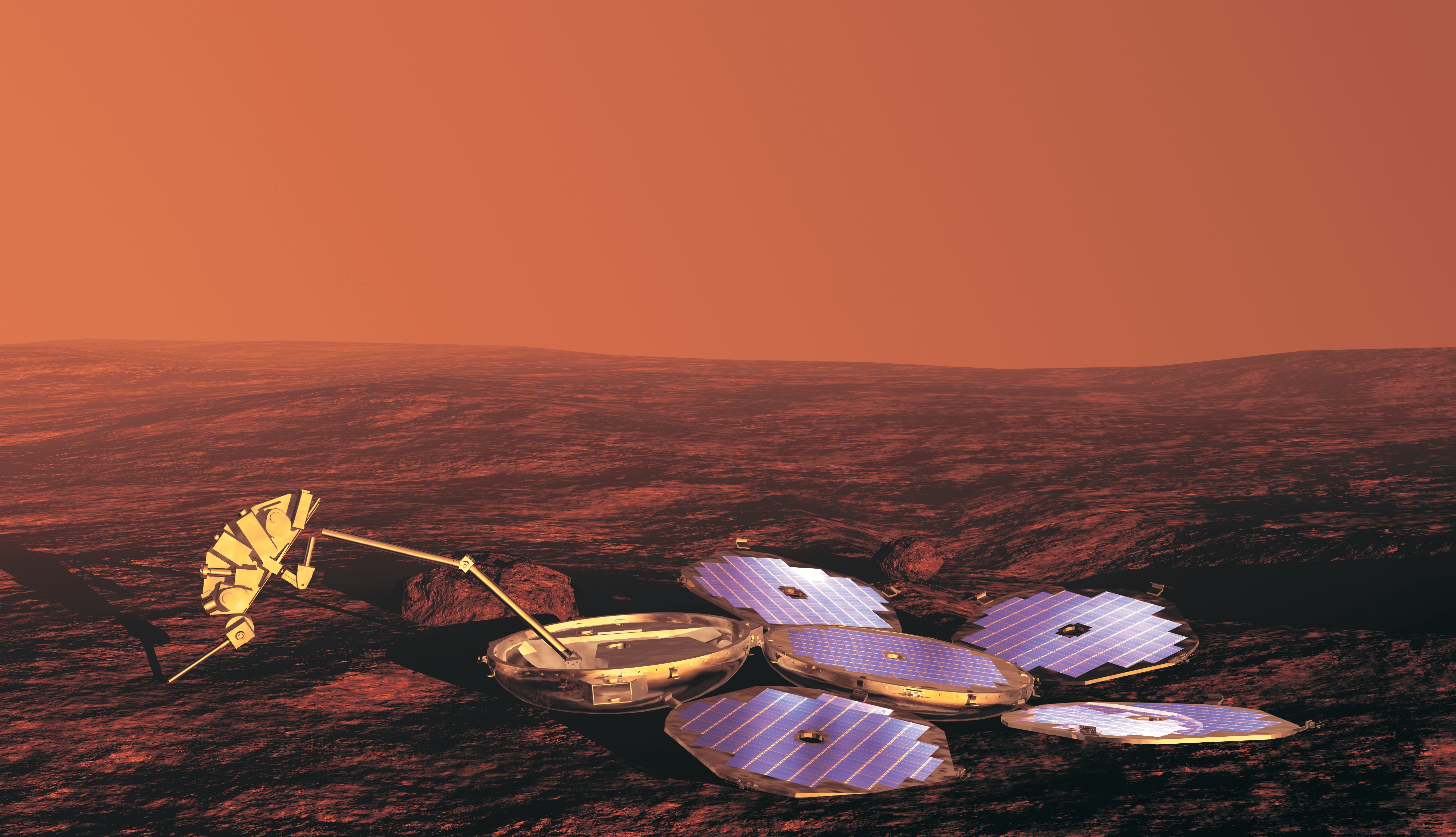

How the Beagle 2 was lost, then found, on Mars

Just south of Mars’ equator, abutting the Red Planet’s crater-studded highlands and smooth rolling lowlands, lies a broad plain wider than Texas, likely carved by a colossal impact more than 3.9 billion years ago. The blasted terrain of Isidis Planitia, a vast landscape of pitted ridges, light-colored ripples, and low dunes, today provides a foreverContinue reading "How the Beagle 2 was lost, then found, on Mars"

The post How the Beagle 2 was lost, then found, on Mars appeared first on Astronomy Magazine.

Hubble Telescope gifts us a dazzling starry 'snow globe' just in time for the holidays

© ESA/Hubble, NASA, ESA, Yumi Choi (NSF's NOIRLab), Karoline Gilbert (STScI), Julianne Dalcanton (Center for Computational Astrophysics/Flatiron Inst., UWashington)

Mirrors for the world’s largest optical telescope are on their way to Chile

The first 18 pieces for one of the European Southern Observatory’s Extremely Large Telescope (ELT) mirrors have started their 10,000 kilometer journey from France to Chile. It’s a key step on the way to competing the ELT, according to the European Southern Observatory (ESO). Each section is part of the telescope’s primary mirror, named the M1.Continue reading "Mirrors for the world’s largest optical telescope are on their way to Chile"

The post Mirrors for the world’s largest optical telescope are on their way to Chile appeared first on Astronomy Magazine.

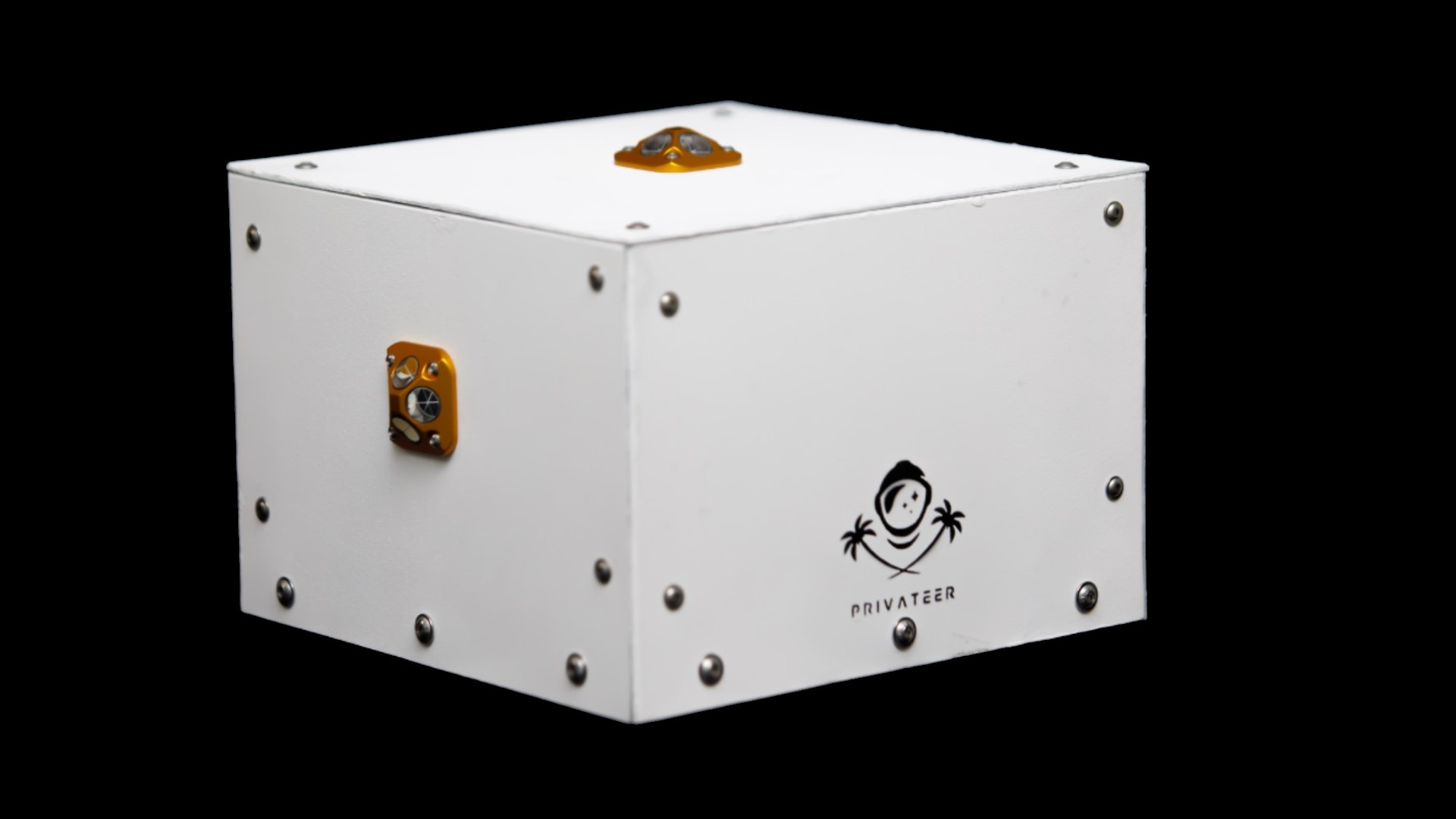

Steve Wozniak's start-up Privateer develops ride-sharing spacecraft to reduce orbital clutter

© Privateer

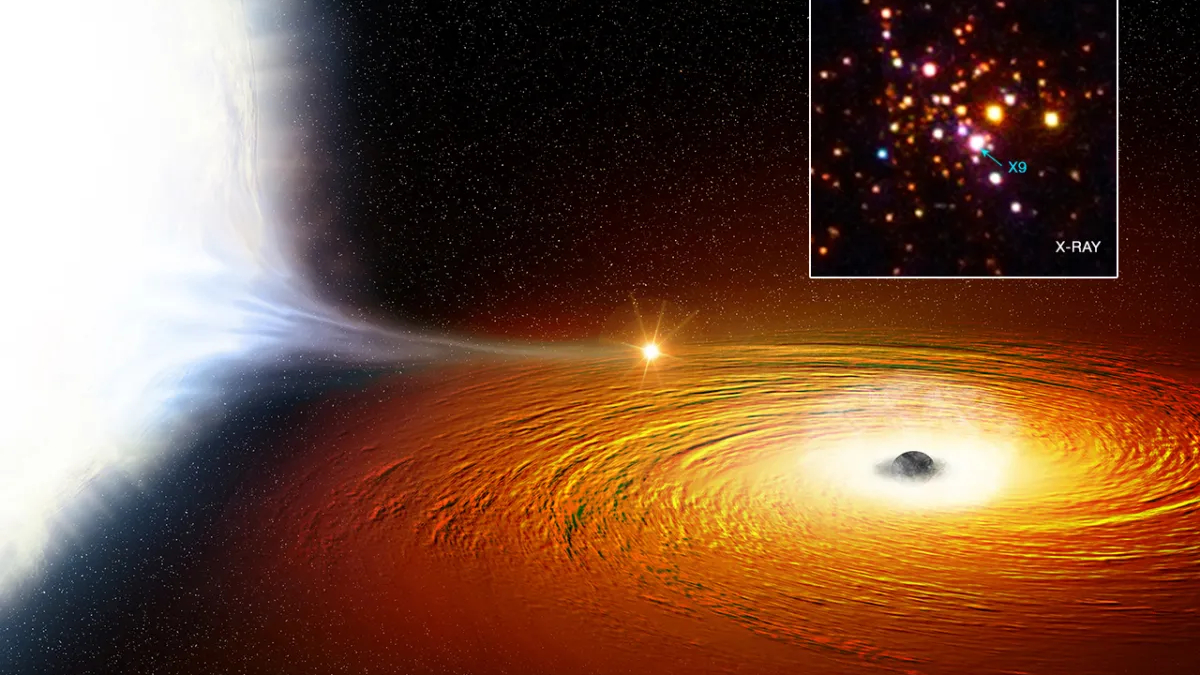

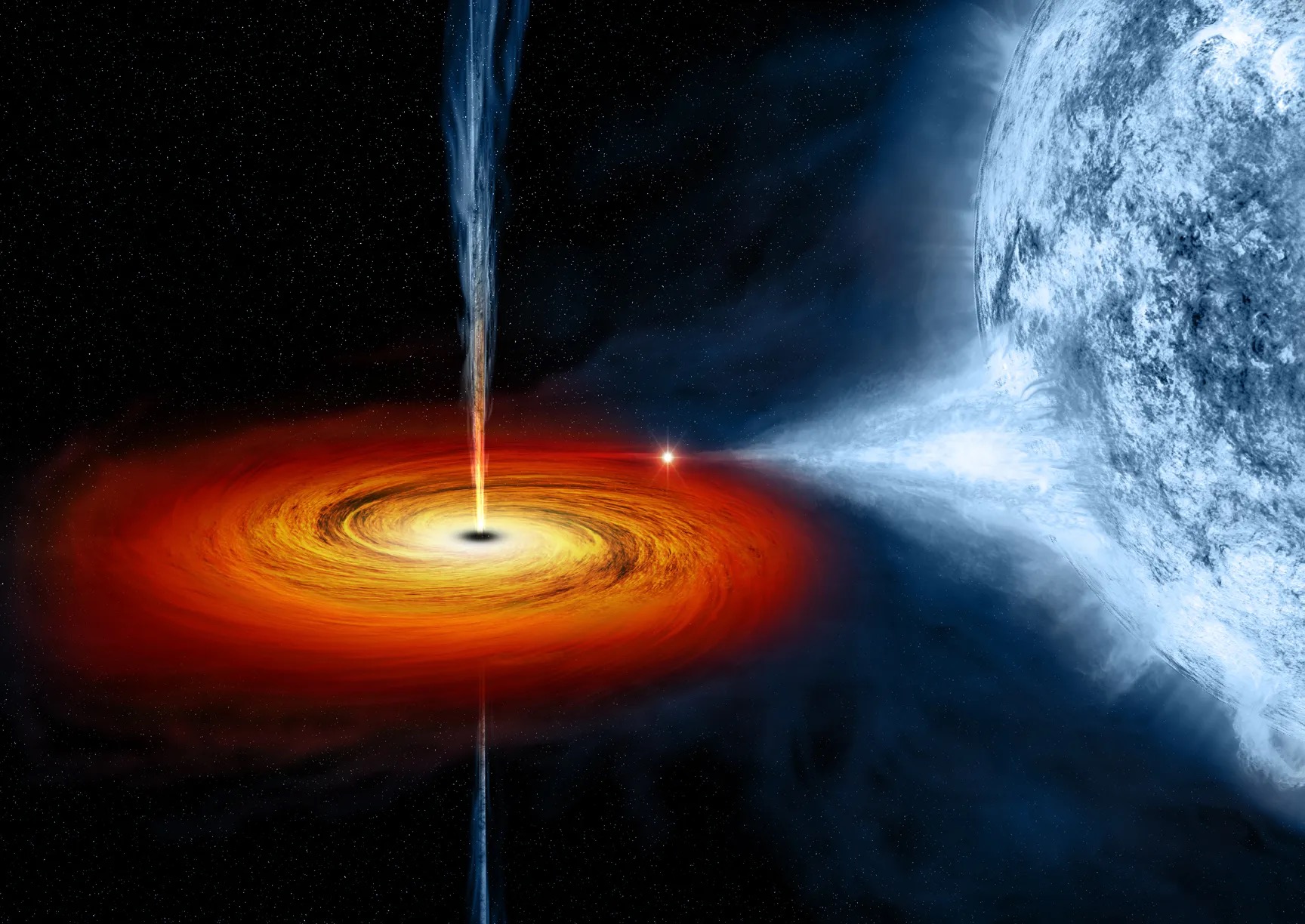

Can stars form around black holes?

© X-ray: NASA/CXC/University of Alberta/A.Bahramian et al.; Illustration: NASA/CXC/M.Weiss

Why are Americans so sick? Researchers point to middle grocery aisles.

Why are Americans so sick? Researchers point to middle grocery aisles.

Anna Lamb

Harvard Staff Writer

Obesity and disease rising with consumption of ultra-processed foods, say Chan School panelists

According to the Centers for Disease Control, more than 40 percent of Americans are obese, and many struggle with comorbidities such as Type 2 diabetes, heart disease, and cancer.

What is making us so sick? The ultra-processed foods that make up the bulk of the American diet are among the major culprits, according to an online panel hosted by Harvard’s T.H. Chan School of Public Health last week.

Experts from Harvard and the National Institutes of Health joined journalist Larissa Zimberoff, author of “Technically Food: Inside Silicon Valley’s Mission to Change What We Eat,” to discuss why the processing of cereals, breads, and other items typically found in the middle aisles of the grocery store — may be driving American weight gain.

Kevin Hall, senior investigator at the National Institute of Diabetes and Digestive and Kidney Diseases at the NIH, said initial research into diets high in ultra-processed foods shows strong links to overconsumption of calories.

Participants in a study conducted by Hall and his team published in 2019 were randomized to receive either ultra-processed or unprocessed diets for two weeks, immediately followed by the alternate diet for two weeks.

“But despite our diets being matched for various nutrients of concern, what we found was that people consuming the ultra-processed foods ate about 500 calories per day more over the two weeks that they were on that diet as compared to the minimally processed diet,” Hall said. “They gained weight and gained body fat. And when they were on the minimally processed diet, they spontaneously lost weight and lost body fat.”

According to Hall, “ultra-processed foods are one of the four categories of something called the NOVA classification system” developed by the School of Public Health at the University of São Paulo, Brazil.

NOVA food classification system

- Natural, packaged, cut, chilled, or frozen vegetables; fruits, potatoes, and other roots and tubers

- Nuts, peanuts, and other seeds without salt or sugar

- Bulk or packaged grains such as brown, white, parboiled, and wholegrain rice, corn kernel, or wheat berry

- Fresh and dried herbs and spices (e.g., oregano, pepper, thyme,

cinnamon) - Fresh or pasteurized vegetable or fruit juices with no added sugar or other substances

- Fresh and dried mushrooms and other fungi or algae

- Grains of wheat, oats and other cereals

- Fresh and dried herbs and spices

- Grits, flakes and flours made from corn, wheat or oats, including those fortified with iron, folic acid or other nutrients lost during processing

- Fresh, chilled or frozen meat, poultry, fish, and seafood, whole or in the form of steaks, fillets and other cuts

- Dried or fresh pasta, couscous, and polenta made from water and the grits/flakes/flours described above

- Fresh or pasteurized milk; yogurt without sugar

- Eggs

- Tea, herbal infusions

- Lentils, chickpeas, beans, and other legumes

- Coffee

- Dried fruits

- Oils made from seeds, nuts and fruits, to include soybeans, corn, oil palm, sunflower, or olives

- Butter

- White, brown, and other types of sugar, and molasses obtained from cane or beet

- Lard

- Honey extracted from honeycombs

- Coconut fat

- Syrup extracted from maple trees

- Refined or coarse salt, mined or from seawater

- Starches extracted from corn and other plants

- Any food combining two of these, such as “salted butter”

- Canned or bottled legumes or vegetables preserved in salt (brine) or vinegar, or by pickling

- Canned fish, such as sardine and tuna, with or without added preservatives

- Tomato extract, pastes or concentrates (with salt and/or sugar)

- Tomato extract, pastes or concentrates (with salt and/or sugar)

- Salted, dried, smoked, or cured meat, or fish

- Fruits in sugar syrup (with or without added antioxidants)

- Coconut fat

- Beef jerky

- Freshly made cheeses

- Bacon

- Freshly-made (unpackaged) breads made of wheat flour, yeast, water, and salt

- Salted or sugared nuts and seeds

- Fermented alcoholic beverages such as beer, alcoholic cider, and wine

- Fatty, sweet, savory or salty packaged snacks

- Pre-prepared (packaged) meat, fish, and vegetables

- Biscuits (cookies)

- Pre-prepared pizza and pasta dishes

- Ice creams and frozen desserts

- Pre-prepared burgers, hot dogs, sausages

- Chocolates, candies, and confectionery in general

- Pre-prepared poultry and fish “nuggets” and “sticks”’

- Cola, soda and other carbonated soft drinks

- Other animal products made from remnants

- “Energy” and sports drinks

- Packaged breads, hamburger and hot dog buns

- Canned, packaged, dehydrated (powdered) and other “instant” soups,

noodles, sauces, desserts, drink mixes and seasonings - Baked products made with ingredients such as hydrogenated vegetable fat, sugar, yeast, whey, emulsifiers, and other additives

- Sweetened and flavored yogurts, including fruit yogurts

- Breakfast cereals and bars

- Dairy drinks, including chocolate milk

- Infant formulas and drinks, and meal replacement shakes (e.g., “slim fast”)

- Sweetened juices

- Pastries, cakes, and cake mixes

- Margarines and spreads

- Distilled alcoholic beverages such as whisky, gin, rum, vodka, etc.

Manufacturing techniques to create ultra-processed foods include extrusion, molding, and preprocessing by frying. Panelist Jerold Mande, CEO of Nourish Science and an adjunct professor of nutrition at the Chan School who has previously held positions with the FDA and USDA, pointed out that foods like shelf-stable breads found at the grocery store are often no more than “very sophisticated emulsified foams.”

But Hall noted that not all ultra-processed foods are necessarily equally bad for you. His team is conducting a follow-up study that aims to look at different qualities of ultra-processed versus whole foods, including energy density, palatability, and portions.

“Those are only two potential mechanisms, the calories per gram of food — that’s the energy density of food — and the proportion of foods that have pairs of nutrients that cross certain thresholds, foods that are high in both sugar and fat, salt and fat, and salt and carbohydrates,” he said.

“We’re starting to see a little bit of that evidence that some ultra-processed foods might have a higher risk of disease and chronic disease than others,” said Josiemer Mattei, the Donald and Sue Pritzker Associate Professor of Nutrition at the Chan School.

Still, Mattei argued for lowering consumption across the board.

“Higher consumption and higher intake of ultra-processed foods overall was associated with higher risk of eventually developing Type 2 diabetes, and more emerging evidence coming with cardiovascular disease, especially for coronary heart disease,” she said.

All the panelists agreed that obesity and negative health outcomes have risen alongside consumption of ultra-processed foods.

“We need to invest more in the science,” Mande said. “We need to make sure our regulatory agencies work, and we need to leverage the biggest programs.”

Study finds one fifth of Australian smokers plan to keep smoking

A new study has revealed one in five Australian smokers (21%) would prefer to still be smoking in the next 1-2 years, only 59% would prefer to quit smoking all together, and the remainder would either prefer to switch to a lower harm alternative (12%) or are uncertain.

From high-speed electric cars to ETH in space

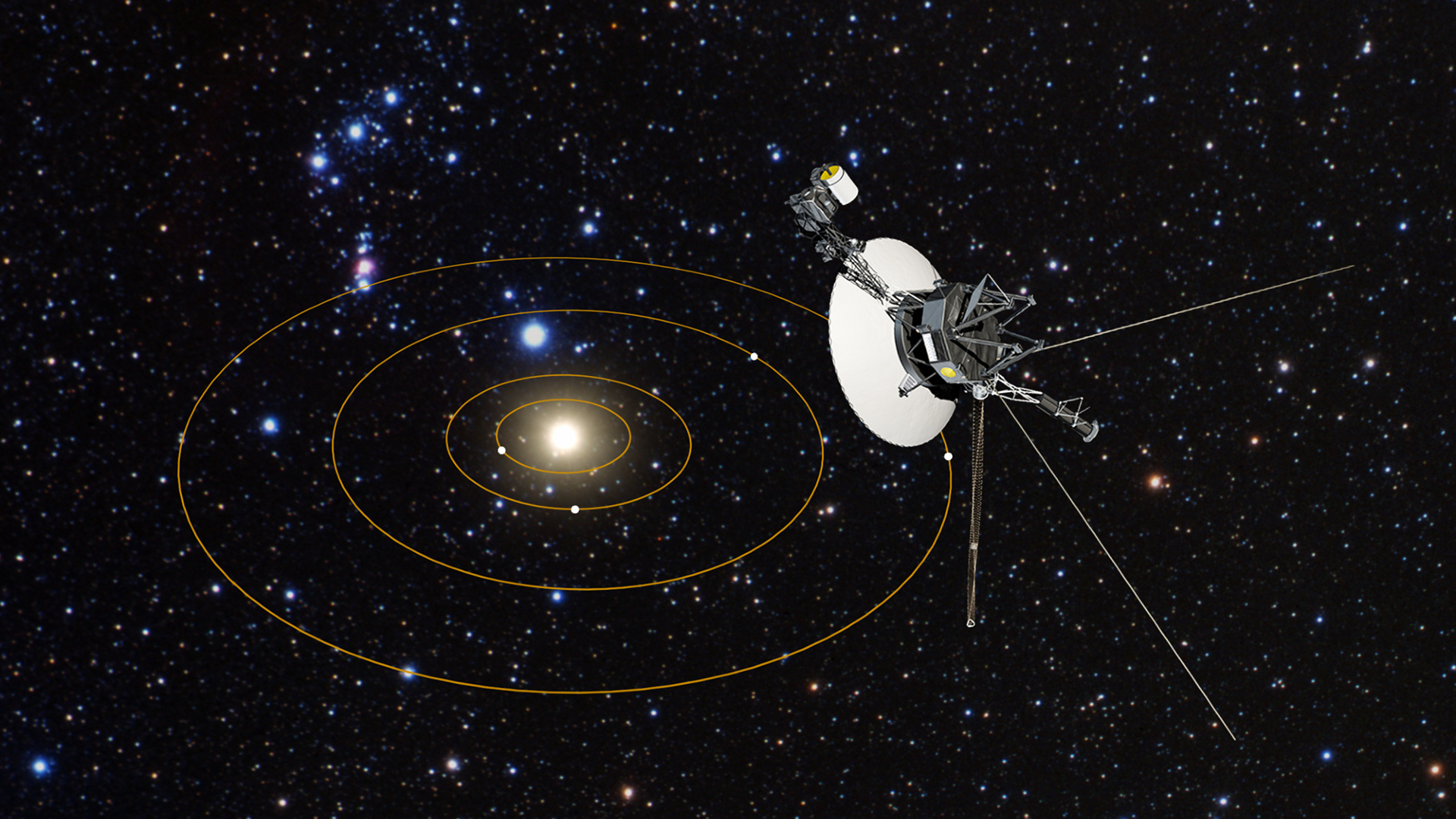

Voyager 1 is sending binary gibberish to Earth from 15.1 billion miles away

Update: According to NASA’s Jet Propulsion Laboratory spokesperson Calla Coffield on March 8, the problem is still unresolved. However, the NASA team is fairly confident it is flight data system’s (FDS) memory that is causing the problems. The fixes have been minimal such as setting the object however, there are more ambitious fixes yet toContinue reading "Voyager 1 is sending binary gibberish to Earth from 15.1 billion miles away"

The post Voyager 1 is sending binary gibberish to Earth from 15.1 billion miles away appeared first on Astronomy Magazine.

Saving lives in the ICU: Clean teeth

Saving lives in the ICU: Clean teeth

‘Striking’ study suggests daily use of a toothbrush lowers risk of hospital-acquired pneumonia, intensive-care mortality

BWH Communications

Researchers have found an inexpensive tool that may save lives in the hospital — and it comes with bristles on one end.

Investigators from Harvard-affiliated Brigham and Women’s Hospital worked with colleagues from Harvard Pilgrim Health Care Institute to examine whether daily toothbrushing among hospitalized patients is associated with lower rates of hospital-acquired pneumonia and other outcomes. The team combined the results of 15 randomized clinical trials that included more than 2,700 patients and found that hospital-acquired pneumonia rates were lower among patients who brushed daily than among those who did not. The results were especially compelling among patients on mechanical ventilation. The findings are published in JAMA Internal Medicine.

“The signal that we see here toward lower mortality is striking — it suggests that regular toothbrushing in the hospital may save lives,” said corresponding author Michael Klompas, an infectious disease physician at the Brigham, professor at Harvard Medical School, and professor of population medicine at Harvard Pilgrim Health Care Institute. “It’s rare in the world of hospital preventative medicine to find something like this that is both effective and cheap. Instead of a new device or drug, our study indicates that something as simple as brushing teeth can make a big difference.”

The team conducted a systematic review and meta-analysis to determine the association between daily toothbrushing and pneumonia. Using a variety of databases, the researchers collected and analyzed randomized clinical trials from around the world that compared the effect of regular oral care with toothbrushing versus oral care without toothbrushing on the occurrence of hospital-acquired pneumonia and other outcomes.

The analysis found that daily toothbrushing was associated with a significantly lower risk for hospital-acquired pneumonia and mortality in the intensive care unit. In addition, the investigators identified that toothbrushing for patients in the ICU was associated with fewer days of mechanical ventilation and a shorter length of stay in intensive care.

Most of the research in the team’s review explored the role of teeth-cleaning in adults in the ICU. Only two of the 15 studies included in the authors’ analysis evaluated the impact of toothbrushing on non-ventilated patients. The team is hopeful that the protective effect of toothbrushing extends to non-ICU patients but noted the need for further research.

“The findings from our study emphasize the importance of implementing an oral health routine that includes toothbrushing for hospitalized patients,” Klompas said. “Our hope is that our study will help catalyze policies and programs to assure that hospitalized patients regularly brush their teeth. If a patient cannot perform the task themselves, we recommend a member of the patient’s care team assist.”

NASA laser-beams adorable cat video to Earth from 19 million miles away (video)

© NASA/JPL-Caltech

The year in photos

The year in photos

Part of the Photography series

Harvard’s campus and community through the lens of our photographers.

Academic and athletic highs, dramatic scenes on- and offstage, quiet moments, a changing of the presidential guard. The year 2023 added its imprint to the long Crimson line.

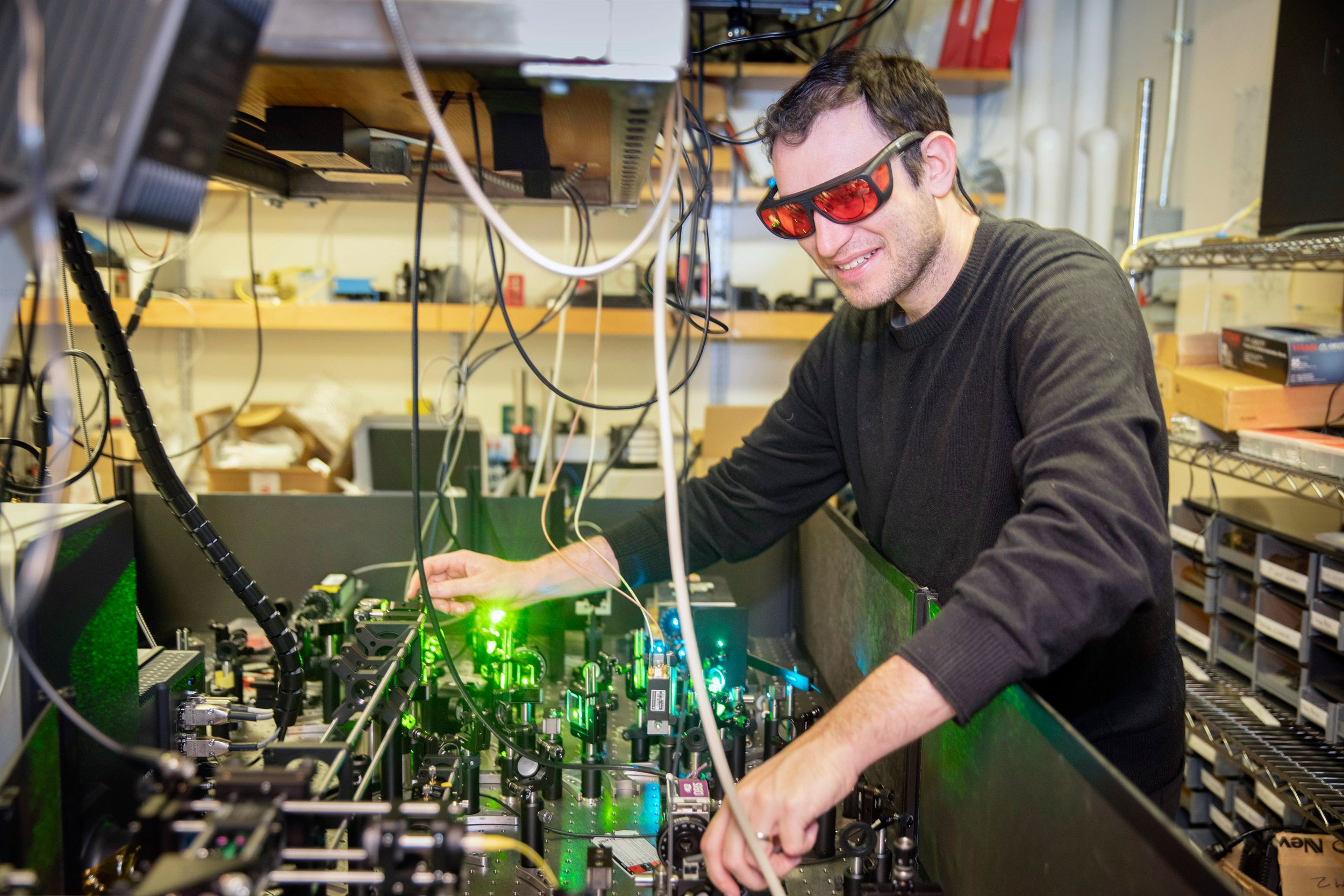

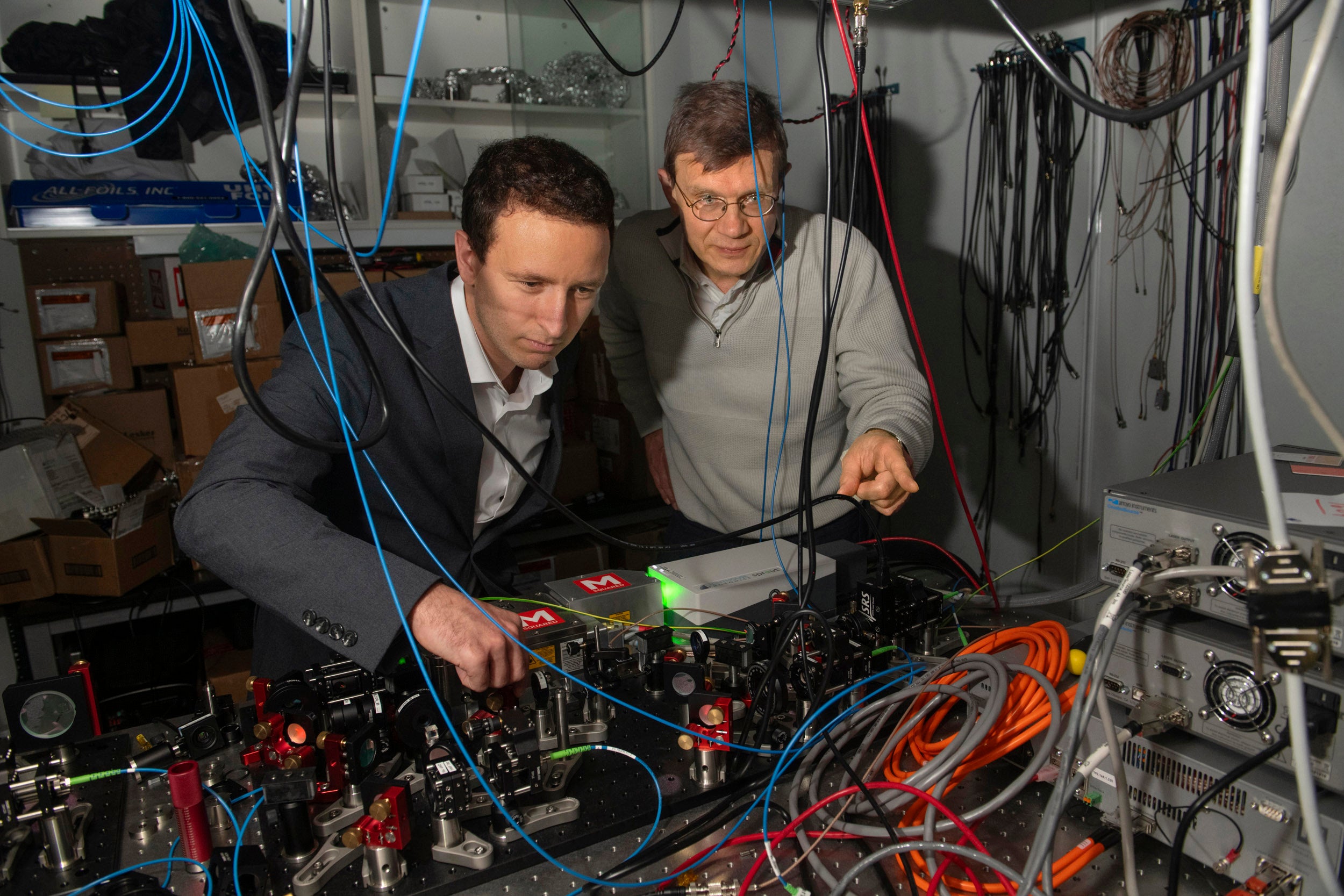

Postdoc William Allen performs research with lasers in the lab of Xiaowei Zhuang.

Kris Snibbe/Harvard Staff Photographer

During the 2023 Wintersession, 12 students build a Japanese river skiff in an apprenticeship-style “silent” workshop. Sachiko Kirby ’26 closely examines the boat edge for straightness as she uses the plane tool.

Stephanie Mitchell/Harvard Staff Photographer

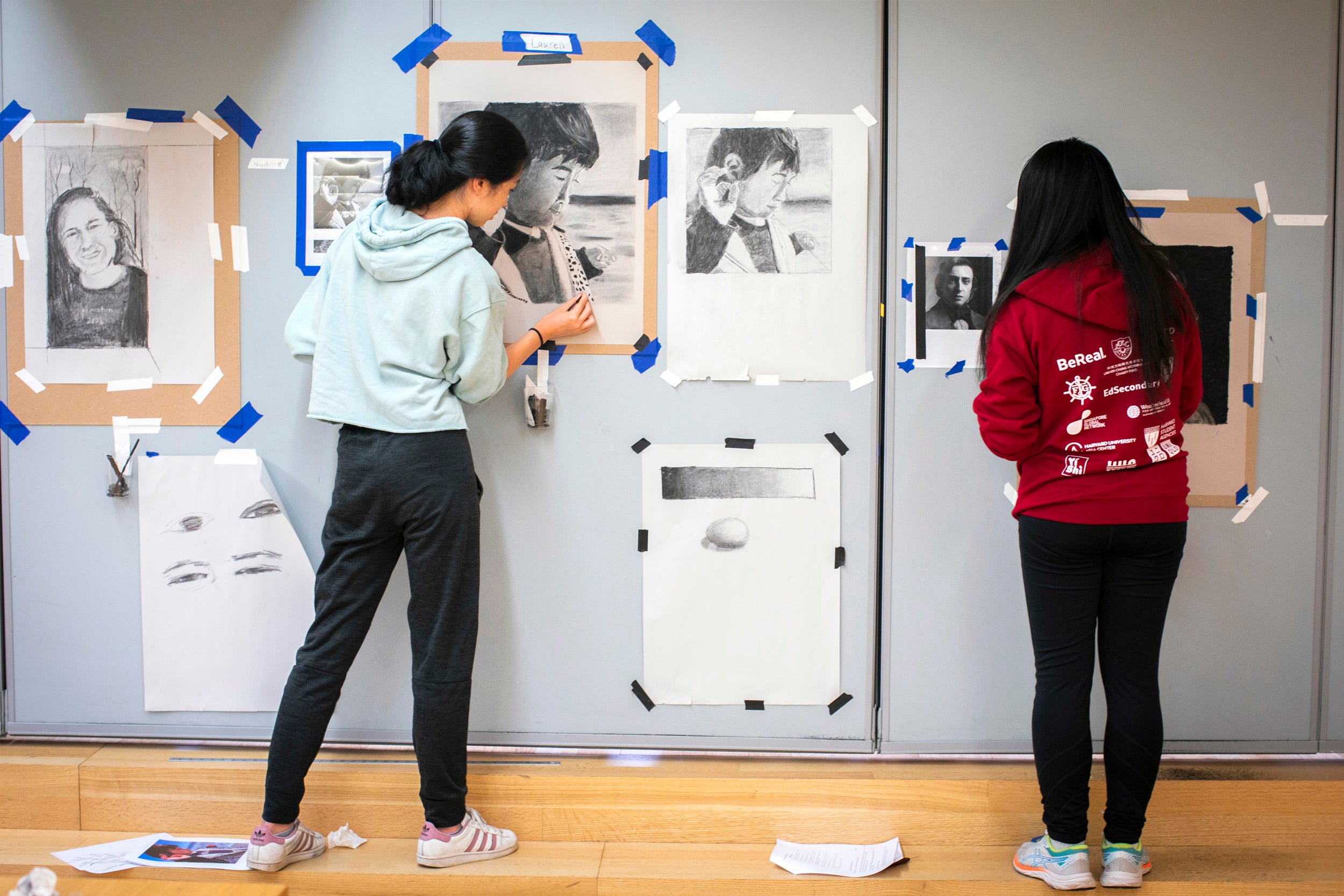

Lauren Chen ’24 (left) and Minjue Wu ’22 create charcoal drawings during a portraiture class.

Stephanie Mitchell/Harvard Staff Photographer

Chilly crowds join the Parade for Hasty Pudding 2023 Woman of the Year Jennifer Coolidge.

Jon Chase/Harvard Staff Photographer

Alexander Yang (from left), Katherine Marguerite, and Leen Al Kassab receive their residency assignments during Match Day at Harvard Medical School.

Kris Snibbe/Harvard Staff Photographer

Harvard Climbing Club members socialize before getting in a workout.

Jon Chase/Harvard Staff Photographer

The Harvard Foundation hosts the 37th Annual Cultural Rhythms celebrating 2023 Artist of the Year Issa Rae, flanked by Alta Mauro (left) and Sade Abraham (right). Devon Gates performs during the show.

Jon Chase/Harvard Staff Photographer

Devon Gates performs during the show.

Jon Chase/Harvard Staff Photographer

Students strut during Eleganza, an annual fashion and talent show put on at the Bright-Landry Center.

Stephanie Mitchell/Harvard Staff Photographer

Poetry of the past and present intermingle. Harvard student poet Mia Word ’24 stands for a portrait outside Longfellow House on Brattle Street. She selected the location because of its connection to Phyllis Wheatley, the first African American published poet.

Stephanie Mitchell/Harvard Staff Photographer